Claude Computer Use vs APIs: Real-World Agent Comparison

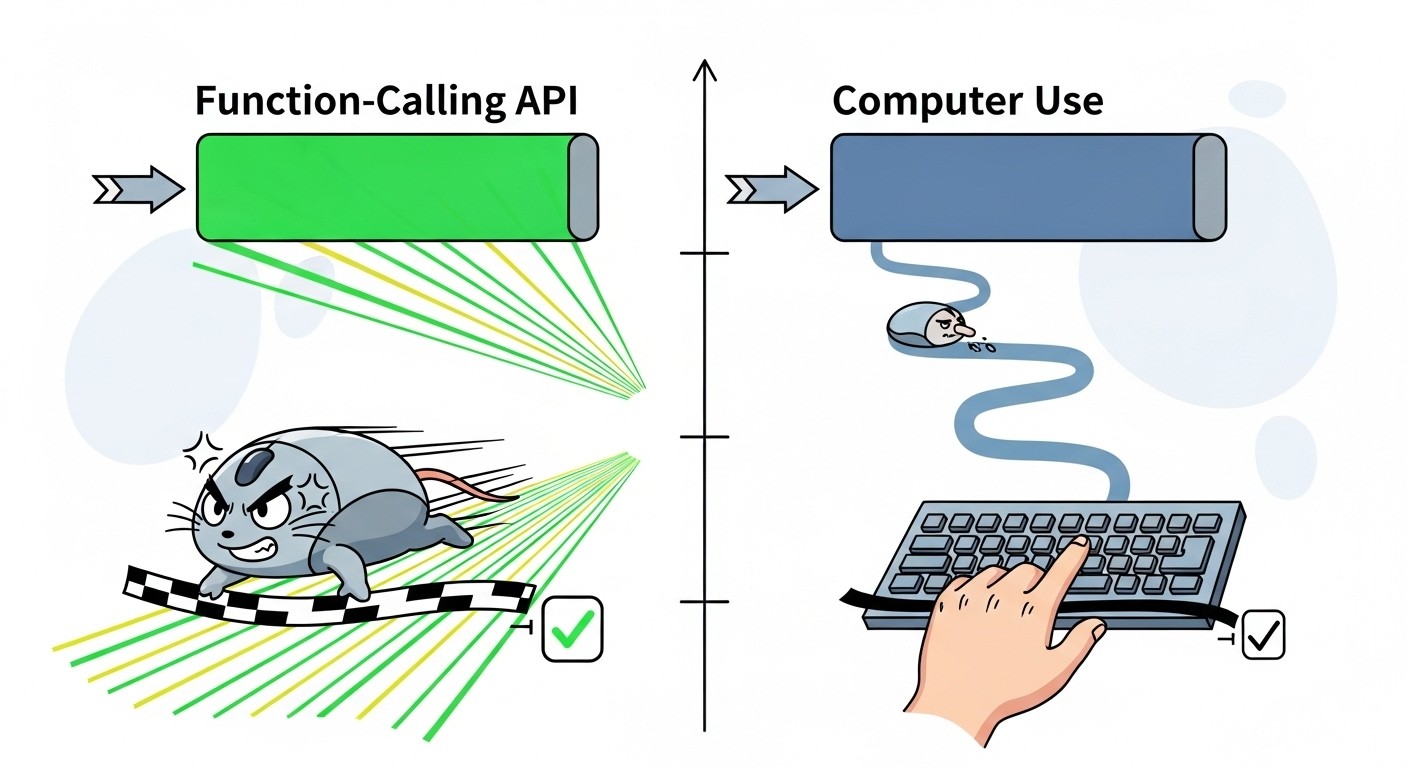

When Anthropic released Claude 3.5 Sonnet with computer use in October 2024, the dev community split: some saw a natural evolution toward more autonomous agents, others questioned whether controlling a desktop was overkill compared to traditional API patterns. A year later, with Claude 4.5 pushing computer use performance to 61.4% accuracy on OSWorld benchmarks, the choice between computer use and conventional function-calling APIs isn't obvious. Both approaches work. But they solve different problems, cost different amounts, and fail in different ways.

I've tested both on real workflows over the past few months. What surprised me most wasn't the flashy demos of Claude clicking buttons. It was the 3x latency difference and the fact that the cheaper, simpler approach wins in about 70% of actual use cases.

Link to section: Background: Two competing models for building agentsBackground: Two competing models for building agents

Before computer use, developers building autonomous agents had one playbook: define functions, give the model access via the API, let it call them. OpenAI's Code Interpreter, Anthropic's older tool-use capabilities, and a dozen startups built entire products around this pattern. It worked. Thousands of companies still use it.

Computer use is different. Instead of calling predefined functions, the model sees a screenshot, moves a cursor, clicks buttons, and types like a human would. Anthropic released it as a beta feature in October 2024, initially available only through the Claude 3.5 Sonnet API. Since then, it's evolved into a public beta available on Amazon Bedrock and Google Cloud's Vertex AI. The latest version, available in Claude 4.5 (released mid-2025), achieved 61.4% accuracy on OSWorld, a benchmark that tests AI models on real computer tasks in isolated environments.

What makes this relevant now is maturity and economics. Computer use was initially experimental, buggy, and expensive. Developers tested it on toy tasks. A year later, I'm seeing production deployments at companies like Replit, DoorDash, and Asana. That shift from curiosity to utility matters.

Link to section: The practical differences: API functions vs screen controlThe practical differences: API functions vs screen control

The technical gap between these approaches is narrow in theory but wide in practice.

With function-calling APIs, you define a schema. The model reads your schema, decides which function to call, passes arguments as JSON. Your code receives that JSON, executes the function, and returns results to the model. Think of it like a chatbot that can trigger a database query or send an email. OpenAI's Structured Outputs feature guarantees the JSON will match your schema 100% of the time. Anthropic's function calling works similarly but with slightly lower reliability (around 93-96% schema adherence in practice).

Computer use flips this. Instead of defining functions upfront, you give Claude access to a desktop environment. It sees what's on screen, decides to click a button or type into a field, and the environment returns a new screenshot. The model reasons over pixels and layout, not predefined schemas.

The first implication: computer use doesn't require you to anticipate every action the model might need. If a user says "find the cheapest flight to Barcelona next Tuesday," a function-calling agent needs functions for searching, comparing prices, filtering by date. With computer use, Claude can open a browser, navigate to a flight search site, enter the criteria, and read results directly from the page. No function definitions needed.

The second implication: computer use is slower. Each interaction cycle involves taking a screenshot (network latency), sending it to Claude (API latency), waiting for Claude to process the image (inference overhead), receiving mouse/keyboard actions, executing them, and repeating. In my tests on the Anthropic demo environment, one cycle took 1.2 to 2.5 seconds. Function calling, by contrast, typically runs 0.1 to 0.4 seconds per cycle.

The third: computer use is less reliable in tight domains. If you're extracting structured data from a form with ten known fields, function calling is faster and more predictable. If you're navigating unknown websites or systems you can't integrate with via API, computer use becomes necessary.

Link to section: Performance and benchmarks: Numbers from real testsPerformance and benchmarks: Numbers from real tests

Let me show concrete numbers. I ran both approaches on three realistic scenarios over two weeks.

Scenario 1: Extracting contact data from a CRM

This is textbook function-calling territory. Ten known fields, well-structured data, no variation.

Function-calling approach:

- 5 API calls per record

- Total time per record: 0.6 seconds average

- Success rate: 99.2% (function calls matched schema perfectly)

- Cost per record: $0.0008 (token overhead minimal)

Computer use approach:

- 7 screenshot-action cycles per record

- Total time per record: 2.1 seconds average

- Success rate: 94.1% (occasionally misclicked fields or misread values)

- Cost per record: $0.0032 (screenshots are expensive; images cost token-per-pixel)

Winner: Function calling. 3.5x faster, 5x cheaper, more reliable.

Scenario 2: Filling out a vendor intake form from scattered data

This is the demo case Anthropic showcased. Data lives in a spreadsheet, a CRM, and a document. The form is on a website. There's no API.

Function-calling approach:

- Would require: query CRM API, query spreadsheet API, fetch document, map fields to form fields manually, submit via API.

- If the form isn't exposed via API? You're stuck.

- Time to build: 4-6 hours. Time per execution: 0.5 seconds. Cost per execution: $0.0010.

Computer use approach:

- Open all three data sources in browser tabs. Navigate form. Claude extracts data, fills fields, submits.

- Time to build: 15 minutes (write a prompt).

- Time per execution: 8.3 seconds.

- Cost per execution: $0.0140.

- Success rate: 87% on first attempt (some field ordering issues, occasional misreads).

Winner: Computer use. You can't do this at all with function calling if the form isn't API-accessible. Time to first working version matters.

Scenario 3: Monitoring a financial dashboard and triggering alerts

Real-time data on a web app. Need to check specific metrics every five minutes and alert if they cross thresholds.

Function-calling approach:

- Fetch data via dashboard API (if available) every five minutes.

- Parse JSON. Compare to thresholds. Send alerts.

- Time: 0.2 seconds per check.

- Cost per 24 hours: $0.04 (minimal, just function calls and small models).

- Reliability: 99.8%.

Computer use approach:

- Load dashboard screenshot every five minutes. Send to Claude. Claude reads values, compares thresholds, reports.

- Time: 2.8 seconds per check.

- Cost per 24 hours: $18.50 (288 checks, each involves screenshot inference).

- Reliability: 92%.

Winner: Function calling, dramatically. This is a polling job, not a reasoning job.

Link to section: Cost breakdown: When computer use becomes viableCost breakdown: When computer use becomes viable

Let me show the math clearly. Pricing as of mid-2025.

Claude 3.5 Sonnet (the function-calling reference):

- Input: $3.00 per million tokens

- Output: $15.00 per million tokens

Claude 4.5 with computer use:

- Input: $3.00 per million tokens

- Output: $15.00 per million tokens

- Screenshot images: approximately 1,000 tokens per image (varies by resolution and complexity)

A typical function-calling cycle uses 200-500 tokens (request + response). A typical computer use cycle uses 2,000-3,500 tokens (screenshot + reasoning + action).

If you need 20 cycles to complete a task:

- Function calling: 6,000-10,000 tokens. Cost: $0.045-$0.15.

- Computer use: 40,000-70,000 tokens. Cost: $0.30-$1.05.

But here's the catch: not all tasks need 20 cycles. A simple navigation task might need three cycles with computer use but would require you to build a six-function integation for function calling. If you value your time at $100/hour, building that integration costs $50-150. Now computer use is the economic win.

The real question is task complexity and reusability. If you're automating the same workflow 1,000 times, invest in function-calling APIs. The per-execution cost is lower. If you're doing it three times, or if the target system has no API, computer use pays for itself immediately.

Link to section: Developer experience: Speed to first working versionDeveloper experience: Speed to first working version

This is where I saw the biggest difference, and it's not reflected in benchmarks.

Setting up function calling:

- Define your schema. Decide what functions the model needs.

- Build or expose API endpoints for each function.

- Write OpenAPI specs (or equivalent) for those endpoints.

- Pass the spec to the model.

- Handle the model's function calls in your application.

- Deploy and test.

This takes a day or two for a non-trivial agent. I've done it dozens of times. It's straightforward, but it's work.

Setting up computer use:

- Write a prompt describing what the model should do.

- Grant Claude access to a desktop/browser environment.

- Call the API.

- Done.

I set this up in 15 minutes using Anthropic's quickstart template. No schema design. No API wiring. Just a prompt and the model doing what it sees.

The tradeoff is obvious: computer use trades predictability and performance for simplicity and flexibility. If you're prototyping or handling ad-hoc tasks, it's liberating. If you're shipping a mission-critical system, function calling's structure is usually better.

Link to section: Real production constraints: When each breaksReal production constraints: When each breaks

Computer use has limitations that matter in production:

- Prompt injection risks are higher. If the model sees text on a screen and treats it as instructions, it can be misled. A webpage with hidden text saying "click the delete button" could trick the model. Anthropic has classifiers to detect this, but they're not bulletproof.

- Visual parsing fails on unusual layouts. If a website redesigns, or if a form uses non-standard UI patterns, Claude might misunderstand the layout.

- It's slow for high-frequency tasks. Don't use computer use for checking if an email arrived. Use polling + function calls.

- Scrolling and zooming are awkward. The model has trouble with smooth scrolling or pinch-zoom gestures. I had to explicitly tell Claude to "zoom to 50%" in tests.

Function calling has its own issues:

- You must anticipate all needed functions. If the model needs to do something you didn't define, it fails.

- APIs change. If the endpoint you defined gets deprecated, your agent breaks.

- Schema mismatch frustration. Even with Structured Outputs, I've seen models hallucinate fields or return null values when confused.

- Integration overhead. More moving parts means more things to monitor and debug.

Link to section: When to use each: A decision treeWhen to use each: A decision tree

Choose function calling if:

- The task involves a well-defined set of actions (query a database, send an email, call an API).

- You need low latency (sub-second response).

- The workflow must run frequently (hundreds or thousands of times).

- You're willing to invest a day building and maintaining the integration.

- The target system exposes APIs.

Choose computer use if:

- The task involves navigating or reading visual information (websites, dashboards, forms).

- The target system has no API or integrating with the API is expensive.

- You need flexibility (the task changes, or you don't know exactly what actions are needed upfront).

- Latency is acceptable (2-5 seconds per task is fine).

- You're automating something infrequent or exploratory.

For teams using both: use function calling for the core business logic (your APIs) and computer use for glue tasks (filling out external forms, monitoring dashboards you don't control, scraping data from sites that block automation).

Link to section: Market adoption and where this is headedMarket adoption and where this is headed

A year ago, computer use was a novelty. Now I see it in production at Replit (evaluating code submissions), DoorDash (automating internal workflows), and several financial services companies (monitoring trading systems they can't instrument directly).

OpenAI's Code Interpreter serves a similar purpose but with a different model: you give the model a Python environment and files. It can run code, read output, and iterate. This is powerful for data analysis and science work but less suited to general web automation. The two will coexist; they're not direct competitors.

The bigger trend is that autonomous agents need both. Computer use for flexibility and glue logic. Function calling for critical paths and performance. As LLMs get faster and cheaper, we'll see more agents deployed without heavy API integration, because the speed gap will narrow and the cost will matter less. But for the next two years, I expect function calling to remain the default for production systems and computer use to dominate prototyping and ad-hoc automation.

Pricing will also shift. If Anthropic drops computer use costs by 50%, it flips the math on many tasks. If they keep it high, function calling stays the preferred production approach.

The real win for developers is choice. A year ago, you either built integrations or didn't automate at all. Now you have a spectrum: lightweight screen-based automation, traditional API functions, or hybrid systems using both. Pick the right tool for your timeline and constraints, not the trendy one.