Complete Deepfake Detection Guide: AI Tools Setup

The digital landscape changed forever when a Hong Kong company lost $25 million to deepfake fraudsters in 2024. The criminals used AI-generated video calls that perfectly mimicked company executives, complete with accurate voices, facial expressions, and mannerisms. This incident wasn't isolated. As deepfake technology becomes more sophisticated, the need for reliable detection tools has never been more critical.

Recent breakthroughs in August 2025 have revolutionized deepfake detection, with new universal AI detectors achieving 98% accuracy across different platforms and content types. Unlike previous tools that only worked on specific deepfake types, these new systems can identify both synthetic speech and facial manipulations with unprecedented precision.

This comprehensive guide will walk you through setting up and using these cutting-edge deepfake detection tools, from basic installation to advanced configuration and real-world implementation.

Link to section: Understanding the 98% Accuracy BreakthroughUnderstanding the 98% Accuracy Breakthrough

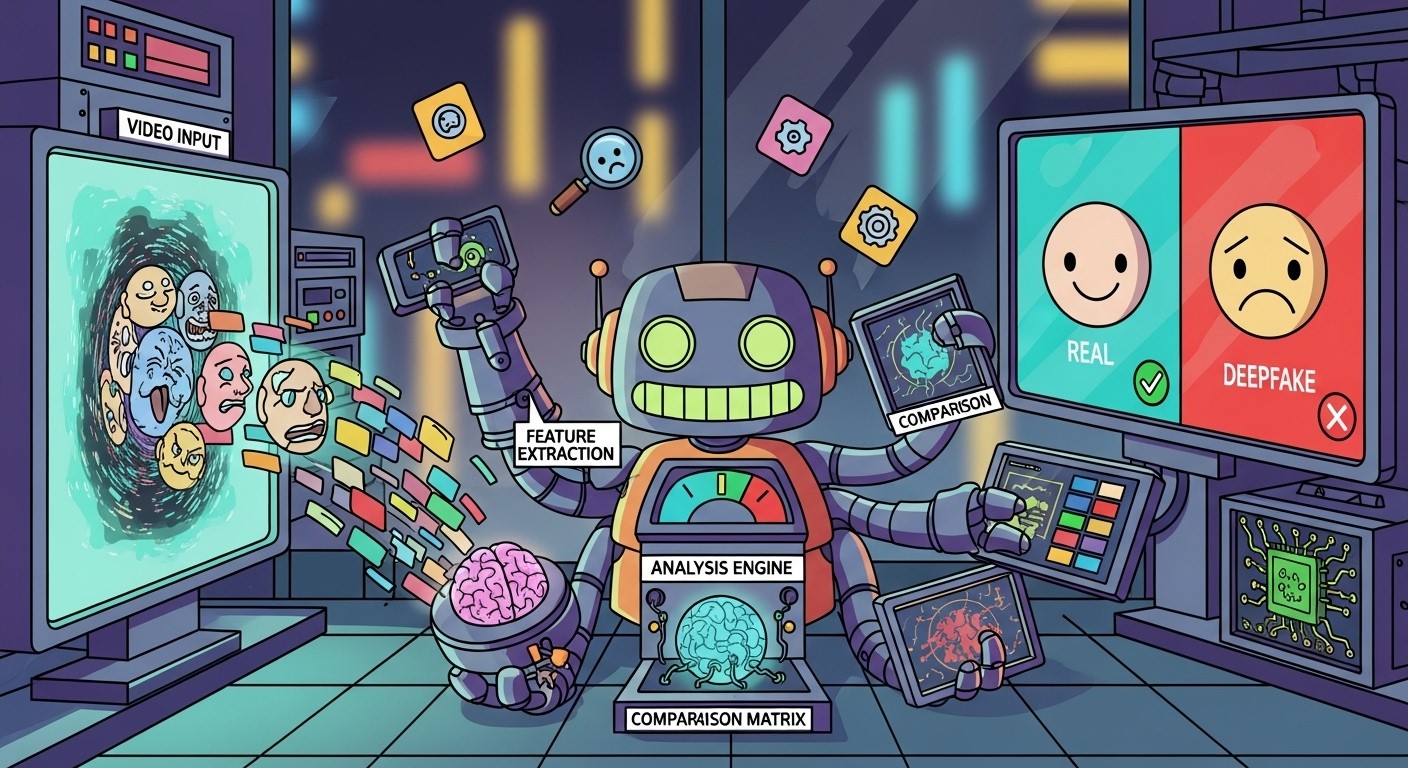

The latest generation of deepfake detectors represents a quantum leap in detection technology. Traditional tools struggled with cross-platform compatibility and often failed when deepfakes were created using different AI models or techniques. The new universal detectors solve this by analyzing multiple aspects of video and audio content simultaneously.

These systems examine micro-expressions that are nearly impossible for AI to replicate perfectly. Even the most sophisticated deepfakes often miss subtle details like natural eye movement patterns or how skin texture changes during different facial expressions. The detectors also analyze audio-visual synchronization, looking for inconsistencies between lip movements and speech patterns that human speakers naturally exhibit.

The breakthrough comes from combining convolutional neural networks with advanced pattern recognition algorithms trained on massive datasets containing both authentic and synthetic content. This training allows the systems to identify artifacts that previous generations of deepfakes commonly produced, while also adapting to newer generation techniques.

Link to section: Setting Up Your Detection EnvironmentSetting Up Your Detection Environment

Before diving into specific tools, you'll need to prepare your development environment. Most modern deepfake detection tools require Python 3.8 or higher, with specific dependencies for machine learning libraries.

Start by creating a dedicated virtual environment for your deepfake detection setup:

python -m venv deepfake_detection

source deepfake_detection/bin/activate # On Windows: deepfake_detection\Scripts\activateInstall the essential dependencies that most detection tools require:

pip install opencv-python==4.8.1.78

pip install tensorflow==2.13.0

pip install torch==2.0.1

pip install torchvision==0.15.2

pip install numpy==1.24.3

pip install scikit-learn==1.3.0

pip install pillow==10.0.0For GPU acceleration, which significantly improves processing speed, install the CUDA-enabled versions:

pip install torch==2.0.1+cu118 torchvision==0.15.2+cu118 -f https://download.pytorch.org/whl/torch_stable.htmlVerify your installation by checking GPU availability:

import torch

print(f"CUDA available: {torch.cuda.is_available()}")

print(f"GPU count: {torch.cuda.device_count()}")

if torch.cuda.is_available():

print(f"GPU name: {torch.cuda.get_device_name(0)}")Link to section: Installing and Configuring Detection ToolsInstalling and Configuring Detection Tools

Link to section: Sentinel Universal DetectorSentinel Universal Detector

Sentinel represents one of the most advanced universal deepfake detectors available, claiming 98% accuracy across platforms. Download the latest release from their GitHub repository:

git clone https://github.com/sentinel-ai/deepfake-detector.git

cd deepfake-detector

pip install -r requirements.txtConfigure Sentinel by creating a configuration file config.yaml:

model:

path: "models/sentinel_universal_v2.1.pth"

threshold: 0.85

batch_size: 16

detection:

enable_audio: true

enable_video: true

min_face_size: 64

confidence_threshold: 0.90

output:

save_results: true

output_dir: "detection_results"

generate_heatmaps: trueDownload the pre-trained model weights:

wget https://releases.sentinel-ai.com/models/sentinel_universal_v2.1.pth -P models/Link to section: HyperVerge Detection SuiteHyperVerge Detection Suite

HyperVerge offers a comprehensive suite of detection tools with specialized modules for different types of synthetic content. Install using their package manager:

pip install hyperverge-detect==3.2.1Initialize the HyperVerge environment:

from hyperverge_detect import DeepfakeDetector

import os

# Set up API credentials

os.environ['HYPERVERGE_API_KEY'] = 'your_api_key_here'

os.environ['HYPERVERGE_API_SECRET'] = 'your_api_secret_here'

# Initialize detector

detector = DeepfakeDetector(

model_version='universal_v3',

detection_threshold=0.88,

enable_preprocessing=True

)

Link to section: MIT's Detect DeepFakes ToolMIT's Detect DeepFakes Tool

MIT's Media Lab has released an open-source tool that focuses on pixel-level inconsistency analysis. Clone and set up the repository:

git clone https://github.com/mit-media-lab/detect-deepfakes.git

cd detect-deepfakes

pip install -e .The MIT tool requires additional setup for their specialized analysis modules:

# Download pre-trained weights for pixel analysis

python scripts/download_models.py --model pixel_inconsistency_v2

python scripts/download_models.py --model lighting_analysis_v1

# Set up the analysis environment

export DETECT_DEEPFAKES_HOME=$(pwd)

export PYTHONPATH="${PYTHONPATH}:${DETECT_DEEPFAKES_HOME}"Link to section: Implementing Basic Detection WorkflowsImplementing Basic Detection Workflows

Link to section: Processing Single Video FilesProcessing Single Video Files

Start with a basic detection script that processes individual video files. Create detect_single.py:

import cv2

import numpy as np

from sentinel_detector import SentinelDetector

import argparse

import json

def detect_deepfake(video_path, output_path):

# Initialize detector

detector = SentinelDetector(

model_path='models/sentinel_universal_v2.1.pth',

threshold=0.85

)

# Open video file

cap = cv2.VideoCapture(video_path)

frame_count = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

fps = int(cap.get(cv2.CAP_PROP_FPS))

results = {

'filename': video_path,

'total_frames': frame_count,

'fps': fps,

'detections': []

}

frame_idx = 0

while True:

ret, frame = cap.read()

if not ret:

break

# Process every 30th frame for efficiency

if frame_idx % 30 == 0:

detection_result = detector.analyze_frame(frame)

if detection_result['is_deepfake']:

results['detections'].append({

'frame': frame_idx,

'timestamp': frame_idx / fps,

'confidence': detection_result['confidence'],

'type': detection_result['detection_type']

})

frame_idx += 1

cap.release()

# Calculate overall assessment

if len(results['detections']) > 0:

avg_confidence = np.mean([d['confidence'] for d in results['detections']])

results['overall_assessment'] = 'DEEPFAKE' if avg_confidence > 0.85 else 'SUSPICIOUS'

else:

results['overall_assessment'] = 'AUTHENTIC'

# Save results

with open(output_path, 'w') as f:

json.dump(results, f, indent=2)

return results

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--input', required=True, help='Input video file')

parser.add_argument('--output', required=True, help='Output JSON file')

args = parser.parse_args()

results = detect_deepfake(args.input, args.output)

print(f"Analysis complete. Results saved to {args.output}")Run the detection script:

python detect_single.py --input suspicious_video.mp4 --output results.jsonLink to section: Batch Processing Multiple FilesBatch Processing Multiple Files

For processing multiple videos, create a batch processing script batch_detect.py:

import os

import glob

import threading

from concurrent.futures import ThreadPoolExecutor

from detect_single import detect_deepfake

def process_directory(input_dir, output_dir, max_workers=4):

# Find all video files

video_extensions = ['*.mp4', '*.avi', '*.mov', '*.mkv', '*.webm']

video_files = []

for ext in video_extensions:

video_files.extend(glob.glob(os.path.join(input_dir, ext)))

video_files.extend(glob.glob(os.path.join(input_dir, ext.upper())))

print(f"Found {len(video_files)} video files to process")

# Ensure output directory exists

os.makedirs(output_dir, exist_ok=True)

def process_single_file(video_path):

filename = os.path.basename(video_path)

output_filename = os.path.splitext(filename)[0] + '_detection.json'

output_path = os.path.join(output_dir, output_filename)

try:

results = detect_deepfake(video_path, output_path)

print(f"✓ Processed {filename}: {results['overall_assessment']}")

return results

except Exception as e:

print(f"✗ Error processing {filename}: {str(e)}")

return None

# Process files in parallel

with ThreadPoolExecutor(max_workers=max_workers) as executor:

results = list(executor.map(process_single_file, video_files))

# Generate summary report

generate_summary_report(results, output_dir)

def generate_summary_report(results, output_dir):

valid_results = [r for r in results if r is not None]

summary = {

'total_files': len(valid_results),

'authentic': len([r for r in valid_results if r['overall_assessment'] == 'AUTHENTIC']),

'suspicious': len([r for r in valid_results if r['overall_assessment'] == 'SUSPICIOUS']),

'deepfake': len([r for r in valid_results if r['overall_assessment'] == 'DEEPFAKE'])

}

with open(os.path.join(output_dir, 'summary_report.json'), 'w') as f:

json.dump(summary, f, indent=2)

print("\n=== DETECTION SUMMARY ===")

print(f"Total files processed: {summary['total_files']}")

print(f"Authentic videos: {summary['authentic']}")

print(f"Suspicious videos: {summary['suspicious']}")

print(f"Detected deepfakes: {summary['deepfake']}")Link to section: Advanced Configuration and OptimizationAdvanced Configuration and Optimization

Link to section: Fine-tuning Detection ThresholdsFine-tuning Detection Thresholds

Different use cases require different sensitivity levels. For high-security applications, you might want to lower the threshold to catch more potential deepfakes, accepting higher false positive rates:

# High security configuration

security_config = {

'threshold': 0.70, # Lower threshold = more sensitive

'multi_model_voting': True,

'require_consensus': 2, # Require 2 out of 3 models to agree

'enable_temporal_analysis': True

}

# Media verification configuration

media_config = {

'threshold': 0.85, # Balanced threshold

'enable_metadata_check': True,

'check_compression_artifacts': True,

'analyze_editing_patterns': True

}

# Social media monitoring configuration

social_config = {

'threshold': 0.90, # Higher threshold = fewer false positives

'fast_processing': True,

'skip_audio_analysis': False,

'batch_size': 32

}Link to section: Implementing Real-time ProcessingImplementing Real-time Processing

For real-time applications like video conferencing or live streams, implement a streaming detection pipeline:

import asyncio

import websockets

from queue import Queue

import threading

class RealTimeDetector:

def __init__(self, model_path, buffer_size=30):

self.detector = SentinelDetector(model_path)

self.frame_buffer = Queue(maxsize=buffer_size)

self.results_queue = Queue()

self.processing = False

def start_processing(self):

self.processing = True

self.worker_thread = threading.Thread(target=self._process_frames)

self.worker_thread.start()

def _process_frames(self):

while self.processing:

if not self.frame_buffer.empty():

frame_data = self.frame_buffer.get()

result = self.detector.analyze_frame(frame_data['frame'])

self.results_queue.put({

'timestamp': frame_data['timestamp'],

'result': result

})

def add_frame(self, frame, timestamp):

if not self.frame_buffer.full():

self.frame_buffer.put({

'frame': frame,

'timestamp': timestamp

})

def get_latest_result(self):

if not self.results_queue.empty():

return self.results_queue.get()

return NoneLink to section: Performance Optimization StrategiesPerformance Optimization Strategies

Modern deepfake detection can be computationally intensive. Several strategies can significantly improve performance:

Enable GPU acceleration for supported models:

import torch

# Check for GPU availability and configure

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(f"Using device: {device}")

# For multi-GPU setups

if torch.cuda.device_count() > 1:

print(f"Using {torch.cuda.device_count()} GPUs")

# Enable multi-GPU processing

detector = nn.DataParallel(detector)Implement intelligent frame sampling to reduce processing load:

def smart_frame_sampling(video_path, target_frames=100):

cap = cv2.VideoCapture(video_path)

total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

if total_frames <= target_frames:

# Process all frames for short videos

sample_indices = list(range(total_frames))

else:

# Smart sampling: more frames from beginning and end

start_frames = list(range(0, min(30, total_frames)))

end_frames = list(range(max(total_frames-30, 30), total_frames))

middle_frames = list(range(30, total_frames-30,

(total_frames-60) // (target_frames-60)))

sample_indices = sorted(start_frames + middle_frames + end_frames)

return sample_indices[:target_frames]Link to section: Troubleshooting Common IssuesTroubleshooting Common Issues

Link to section: Memory Management ProblemsMemory Management Problems

Large video files can cause memory overflow. Implement chunked processing:

def process_large_video(video_path, chunk_size=1000):

cap = cv2.VideoCapture(video_path)

total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

results = []

for start_frame in range(0, total_frames, chunk_size):

end_frame = min(start_frame + chunk_size, total_frames)

# Process chunk

cap.set(cv2.CAP_PROP_POS_FRAMES, start_frame)

chunk_results = []

for frame_idx in range(start_frame, end_frame):

ret, frame = cap.read()

if not ret:

break

result = detector.analyze_frame(frame)

chunk_results.append(result)

results.extend(chunk_results)

# Clear memory after each chunk

import gc

gc.collect()

cap.release()

return resultsLink to section: Model Loading ErrorsModel Loading Errors

Common model loading issues and solutions:

def safe_model_loading(model_path):

try:

# Try standard loading

model = torch.load(model_path, map_location=device)

return model

except RuntimeError as e:

if "version" in str(e).lower():

# Version mismatch - try compatibility mode

model = torch.load(model_path, map_location=device,

weights_only=True)

return model

else:

raise e

except FileNotFoundError:

print(f"Model file not found: {model_path}")

print("Downloading default model...")

download_default_model()

return torch.load('models/default_model.pth', map_location=device)Link to section: Performance BottlenecksPerformance Bottlenecks

Monitor and optimize performance with profiling:

import time

import psutil

def profile_detection(video_path):

start_time = time.time()

start_memory = psutil.Process().memory_info().rss

# Run detection

results = detect_deepfake(video_path, 'temp_results.json')

end_time = time.time()

end_memory = psutil.Process().memory_info().rss

performance_stats = {

'processing_time': end_time - start_time,

'memory_used': (end_memory - start_memory) / 1024 / 1024, # MB

'frames_per_second': results['total_frames'] / (end_time - start_time)

}

print(f"Performance Stats:")

print(f" Processing time: {performance_stats['processing_time']:.2f}s")

print(f" Memory used: {performance_stats['memory_used']:.2f} MB")

print(f" FPS: {performance_stats['frames_per_second']:.2f}")

return performance_statsLink to section: Integration with Existing WorkflowsIntegration with Existing Workflows

Link to section: API DevelopmentAPI Development

Create a REST API for deepfake detection services:

from flask import Flask, request, jsonify

import tempfile

import os

app = Flask(__name__)

detector = SentinelDetector('models/sentinel_universal_v2.1.pth')

@app.route('/detect', methods=['POST'])

def detect_deepfake_api():

if 'video' not in request.files:

return jsonify({'error': 'No video file provided'}), 400

video_file = request.files['video']

# Save temporarily

with tempfile.NamedTemporaryFile(delete=False, suffix='.mp4') as temp_file:

video_file.save(temp_file.name)

try:

results = detect_deepfake(temp_file.name, 'temp_results.json')

return jsonify(results)

finally:

os.unlink(temp_file.name)

if os.path.exists('temp_results.json'):

os.unlink('temp_results.json')

@app.route('/health', methods=['GET'])

def health_check():

return jsonify({'status': 'healthy', 'model_loaded': detector.is_loaded()})

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000, debug=False)Link to section: Automated Monitoring SystemsAutomated Monitoring Systems

The 98% accuracy breakthrough has made automated content monitoring viable for large-scale platforms. However, implementation requires careful consideration of the false positive implications. With millions of videos processed daily, even a 2% error rate can result in thousands of legitimate content creators being incorrectly flagged.

This detection capability aligns well with broader AI automation workflows that many organizations are implementing to streamline content moderation and security processes.

The technology represents a significant advancement in combating digital deception, but successful deployment requires balancing detection sensitivity with practical considerations of scale, accuracy, and user experience. As these tools continue to evolve, they promise to become essential components in maintaining digital trust and authenticity across platforms and applications.