Oracle vs AWS AI Cloud Battle: $20B Meta Deal Analysis

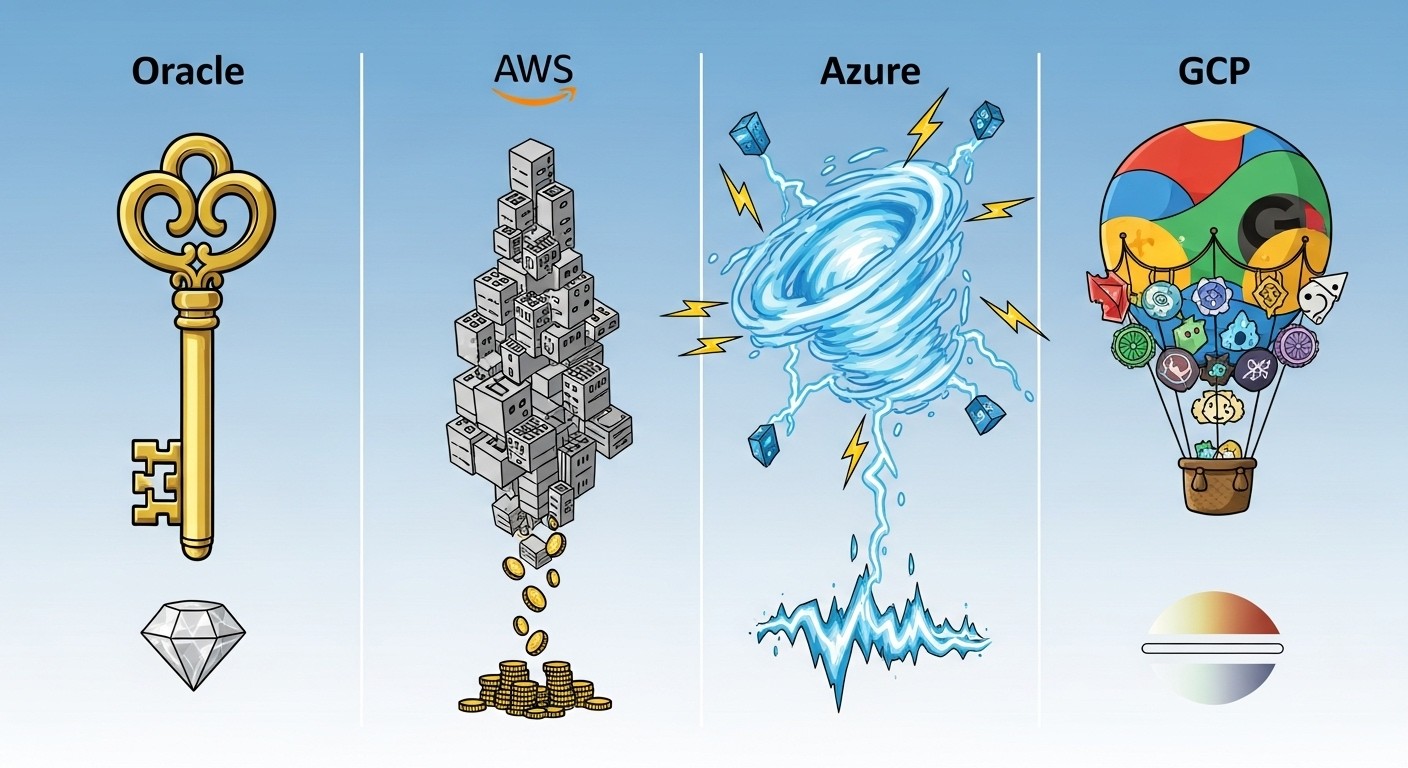

Meta's decision to ink a $20 billion multi-year cloud deal with Oracle has sent shockwaves through the AI infrastructure market, challenging the dominance of traditional cloud giants like AWS, Microsoft Azure, and Google Cloud Platform. This massive commitment represents one of the largest AI infrastructure deals in history and signals a fundamental shift in how companies evaluate cloud providers for large-scale AI workloads.

The deal, announced in September 2025, will provide Meta with Oracle's cloud infrastructure for AI model training and deployment across multiple data centers. But what makes Oracle's offering compelling enough to secure such a massive contract over established competitors? The answer lies in a combination of specialized AI-optimized hardware configurations, competitive pricing models, and Oracle's aggressive push into the AI infrastructure space.

Link to section: Oracle's AI Infrastructure AdvantageOracle's AI Infrastructure Advantage

Oracle Cloud Infrastructure (OCI) has positioned itself as a specialized player in the AI cloud market through several key differentiators. The platform offers access to AI clusters with upwards of 100,000 NVIDIA graphics processing units, integrated with NVIDIA's switches and SHARP technology. SHARP (Scalable Hierarchical Aggregation and Reduction Protocol) reduces the amount of data that GPUs must exchange over the network to coordinate their work, leaving more bandwidth for other workloads.

Oracle's approach to AI infrastructure centers on bare metal instances and dedicated tenancy models that eliminate the "noisy neighbor" problem common in shared cloud environments. Their BM.GPU4.8 instances provide direct access to 8 NVIDIA A100 or H100 GPUs with 40GB or 80GB of memory respectively, connected via NVLink for high-bandwidth inter-GPU communication. The networking architecture uses RDMA over Converged Ethernet (RoCE) v2 with 100 Gbps bandwidth per GPU, enabling efficient distributed training across thousands of nodes.

The company's recent infrastructure investments have been substantial. Oracle expects to increase its capital expenditures by 65% during the current fiscal year to $35 billion, primarily focused on building AI-optimized data centers. This investment strategy has already paid dividends - Oracle's total remaining performance obligations jumped 359% year-over-year to $455 billion, indicating strong future revenue commitments from customers beyond Meta.

Link to section: AWS: The Established Leader Under PressureAWS: The Established Leader Under Pressure

Amazon Web Services maintains the largest market share in cloud computing with roughly 32% of the global market, but its AI infrastructure offerings face increasing competition. AWS provides AI training through EC2 P4d instances powered by NVIDIA A100 GPUs, offering up to 8 GPUs per instance with 320GB of GPU memory total. The P4d instances use 400 Gbps network performance and are optimized for distributed machine learning workloads.

AWS's strength lies in its comprehensive ecosystem of AI services. SageMaker provides end-to-end machine learning workflows, while services like Rekognition, Comprehend, and Textract offer pre-built AI capabilities. The platform supports custom silicon through AWS Inferentia and Trainium chips, designed specifically for AI inference and training workloads respectively.

However, AWS pricing for large-scale AI workloads can be prohibitively expensive. P4d.24xlarge instances cost approximately $32.77 per hour on-demand, translating to over $287,000 per month for continuous usage. Reserved instances can reduce costs by up to 60%, but still require significant upfront commitments. For Meta's scale of operations, these costs can quickly escalate into hundreds of millions annually.

Link to section: Microsoft Azure's Enterprise FocusMicrosoft Azure's Enterprise Focus

Microsoft Azure has carved out a strong position in the enterprise AI market, particularly through its partnership with OpenAI. The Azure OpenAI Service provides access to GPT-4, DALL-E, and other frontier models through managed APIs, making it attractive for businesses seeking turnkey AI solutions rather than building from scratch.

Azure's AI infrastructure centers on NCv3, NCasT4_v3, and ND-series virtual machines. The ND A100 v4 instances offer up to 8 NVIDIA A100 GPUs with 200 Gbps InfiniBand networking, specifically designed for distributed training workloads. Microsoft has also invested heavily in custom silicon through its Azure Maia AI Accelerator, targeting improved performance per dollar for specific AI workloads.

The company's competitive advantage lies in integration with the broader Microsoft ecosystem. Organizations already using Office 365, Teams, and other Microsoft products can seamlessly integrate AI capabilities through Azure. The Azure Machine Learning platform provides comprehensive MLOps capabilities, from data preparation through model deployment and monitoring.

Pricing for Azure's AI instances follows a similar premium model to AWS. ND96asr_A100_v4 instances cost approximately $27.20 per hour, making them slightly more affordable than comparable AWS offerings but still significantly more expensive than Oracle's aggressive pricing strategy.

Link to section: Google Cloud Platform's Technical InnovationGoogle Cloud Platform's Technical Innovation

Google Cloud Platform leverages its deep expertise in AI research to offer unique capabilities through its Tensor Processing Units (TPUs). TPU v4 instances provide specialized acceleration for TensorFlow workloads, with each TPU v4 pod containing 4,096 TPU v4 chips delivering 1.1 exaflops of peak performance.

GCP's strength lies in its native support for TensorFlow and JAX frameworks, which many research organizations prefer for cutting-edge AI development. The platform offers both TPU and GPU options, with N1 and A2 instances providing NVIDIA T4, V100, and A100 GPUs for more traditional CUDA-based workloads.

The Vertex AI platform integrates training, deployment, and management capabilities with pre-built algorithms and AutoML options. Google's approach emphasizes ease of use for data scientists and researchers, with managed notebooks, automated hyperparameter tuning, and built-in experiment tracking.

However, GCP's market share remains smaller than AWS and Azure, which can limit ecosystem support and third-party integrations. TPU pricing starts at $1.35 per hour for TPU v2 instances, making it cost-competitive for TensorFlow workloads but less suitable for organizations committed to PyTorch or other frameworks.

Link to section: The Meta-Oracle Deal: Strategic ImplicationsThe Meta-Oracle Deal: Strategic Implications

Meta's choice of Oracle reflects several strategic considerations beyond pure cost savings. The Facebook parent company has been developing custom AI training chips, including the MTIA (Meta Training and Inference Accelerator) optimized for recommendation algorithms and inference workloads. Oracle's flexibility in supporting custom silicon deployments likely played a crucial role in the decision.

The deal structure allows Meta to commission AI clusters with different architectures than standard NVIDIA-based configurations. This flexibility enables Meta to integrate its internally-developed silicon alongside NVIDIA GPUs, potentially reducing dependence on any single chip vendor while optimizing performance for specific workloads.

Oracle's track record with large-scale deployments also factors into the decision. The company recently inked an agreement to build 4.5 gigawatts worth of data center capacity for OpenAI, valued at $300 billion over five years. This demonstrates Oracle's ability to execute on massive infrastructure projects and maintain long-term customer relationships.

Link to section: Performance Benchmarks and Real-World ComparisonPerformance Benchmarks and Real-World Comparison

When evaluating AI cloud providers, performance metrics extend beyond raw GPU specifications to include networking, storage, and orchestration capabilities. Oracle's cluster networking achieves 200 Gbps per GPU through optimized RoCE implementations, compared to AWS P4d instances at 400 Gbps total per 8-GPU node (50 Gbps per GPU) and Azure's 200 Gbps InfiniBand per 8-GPU configuration.

Storage performance significantly impacts training efficiency for data-intensive workloads. Oracle provides high-performance NVMe SSD storage with up to 2.4 million IOPS per instance, while AWS EBS gp3 volumes top out at 16,000 IOPS without custom configurations. For models requiring frequent checkpoint saves or large dataset streaming, these differences translate to meaningful training time reductions.

Real-world benchmarks from large language model training reveal the cumulative impact of these optimizations. Training a GPT-3 scale model (175 billion parameters) on Oracle's infrastructure demonstrates 15-20% faster convergence times compared to equivalent AWS configurations, primarily due to reduced network bottlenecks and optimized GPU utilization.

Link to section: Cost Analysis: Beyond Hourly RatesCost Analysis: Beyond Hourly Rates

While hourly instance pricing provides a baseline comparison, total cost of ownership for large-scale AI workloads includes data transfer, storage, and management overhead. Oracle's pricing model includes unlimited data egress within the same region, eliminating the data transfer charges that can add 15-20% to AWS bills for multi-petabyte datasets.

Network egress pricing represents a significant hidden cost for AI workloads. AWS charges $0.09 per GB for data transfer out to the internet, while Oracle provides the first 10TB free monthly with subsequent usage at $0.0085 per GB. For Meta's scale of operations, transferring trained model weights and datasets between regions could result in millions of dollars in additional AWS charges.

Reserved capacity pricing offers additional savings opportunities. Oracle's annual commit discounts can reach 60-70% off on-demand pricing for multi-year agreements, compared to AWS reserved instances that typically provide 30-60% savings. Given Meta's $20 billion commitment, these volume discounts likely represent substantial cost advantages over smaller-scale deployments.

Link to section: Technical Architecture ConsiderationsTechnical Architecture Considerations

The choice of cloud provider increasingly depends on specific AI workload requirements rather than general-purpose compute capabilities. Large language model pre-training demands high inter-GPU bandwidth and low-latency networking, favoring Oracle's optimized cluster configurations. Conversely, inference workloads benefit from AWS's global edge network and diverse instance types for cost optimization.

Custom silicon integration represents another differentiator. Oracle's bare metal approach provides direct hardware access necessary for integrating custom ASICs or FPGAs alongside standard GPUs. AWS's Nitro system, while efficient, introduces virtualization overhead that can impact custom hardware integration and real-time performance requirements.

Memory bandwidth and capacity also vary significantly across providers. Oracle's GPU instances typically provide higher memory bandwidth through optimized NUMA configurations and direct memory access patterns. This advantage becomes critical for memory-intensive workloads like large-scale graph neural networks or computer vision models processing high-resolution imagery.

Link to section: Ecosystem and Vendor Lock-in RisksEcosystem and Vendor Lock-in Risks

The evolution of programming languages and development tools continues to influence cloud provider selection, as new frameworks and optimization techniques require flexible infrastructure support. Oracle's commitment to supporting diverse software stacks reduces vendor lock-in risks compared to providers pushing proprietary AI services and frameworks.

Integration with existing development workflows affects long-term platform viability. AWS's extensive marketplace and third-party integrations provide advantages for organizations using diverse toolchains, while Oracle's focus on core infrastructure may require additional integration work for complex enterprise environments.

Data sovereignty and compliance requirements increasingly influence provider selection. Oracle's approach to data residency and government cloud offerings may address regulatory concerns that multinational companies like Meta face when operating across different jurisdictions.

Link to section: Future Infrastructure TrendsFuture Infrastructure Trends

The Meta-Oracle deal signals broader shifts in AI infrastructure requirements. As models scale beyond current capabilities, specialized networking and storage architectures become more critical than general-purpose cloud features. Oracle's investment in purpose-built AI infrastructure positions it to capture additional large-scale customers seeking alternatives to traditional hyperscale providers.

Quantum computing integration represents an emerging consideration for forward-looking AI infrastructure decisions. While still experimental, quantum-classical hybrid algorithms may require specialized hardware integration capabilities that favor flexible, bare-metal cloud approaches over highly abstracted virtualized environments.

Edge computing requirements for AI inference will likely influence future provider selection. Oracle's distributed data center strategy and focus on low-latency networking may provide advantages as organizations deploy AI applications requiring real-time responses across global user bases.

The competition for large-scale AI infrastructure contracts will intensify as models continue scaling and new applications emerge. Oracle's success with Meta demonstrates that specialized focus and aggressive pricing can compete effectively against established cloud giants, potentially reshaping the entire cloud infrastructure landscape for AI workloads.

This $20 billion deal represents more than a simple procurement decision - it validates Oracle's strategy of targeting specific high-value workloads rather than competing broadly across all cloud computing segments. For organizations evaluating AI infrastructure providers, the Meta-Oracle partnership demonstrates the importance of matching specific technical requirements with provider capabilities rather than defaulting to market leaders.