Apple Metal 4 Brings Native AI to Mac Graphics Revolution

Apple's unveiling of Metal 4 at WWDC 2025 represents more than just another API update. It marks a fundamental shift in how developers can harness artificial intelligence on Mac platforms, bringing AI computation directly into the graphics pipeline without requiring cloud connectivity or external dependencies. This advancement positions Apple's development ecosystem at the forefront of local AI processing, challenging the industry's assumption that sophisticated AI requires cloud infrastructure.

The implications extend far beyond graphics rendering. Metal 4's native AI integration enables developers to build applications that process machine learning workloads locally, offering improved privacy, reduced latency, and enhanced performance for AI-driven features. This technological leap comes at a time when the industry is grappling with the computational demands of AI applications and the growing need for edge computing solutions.

Link to section: Understanding Metal 4: Building on a Decade of Graphics InnovationUnderstanding Metal 4: Building on a Decade of Graphics Innovation

Metal 4 represents the fourth major iteration of Apple's low-level graphics and compute API, which first launched in 2014 as a replacement for OpenGL. Unlike higher-level graphics frameworks, Metal provides developers with direct access to GPU hardware, enabling fine-grained control over graphics rendering and computational tasks.

The API has powered multiple generations of demanding applications, from AAA games like Cyberpunk 2077 to professional creative software used in film production and architectural visualization. Metal's evolution has consistently focused on extracting maximum performance from Apple's silicon while maintaining developer accessibility.

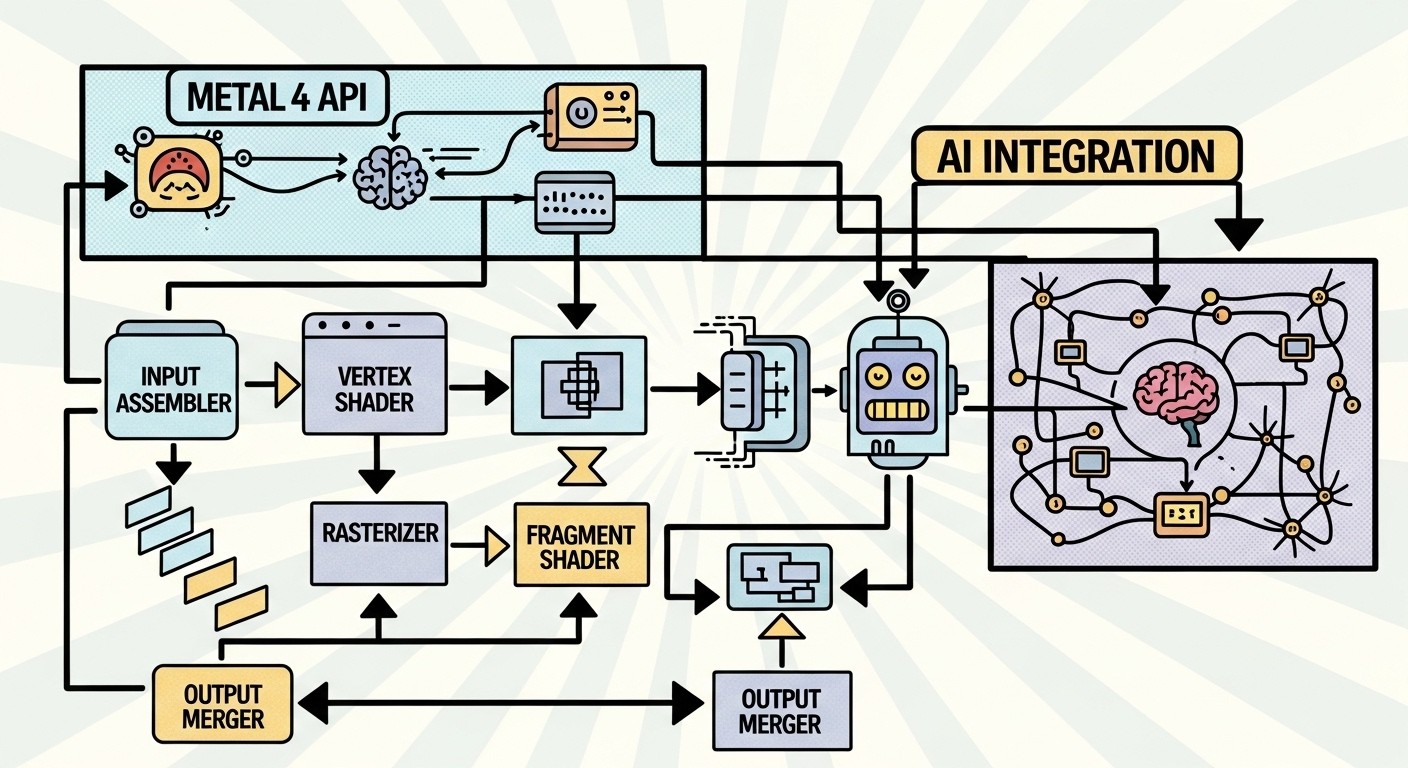

What distinguishes Metal 4 from its predecessors is its explicit design for AI workloads. Previous versions could handle machine learning tasks through general compute shaders, but Metal 4 integrates AI as a first-class citizen in the graphics pipeline. This integration allows developers to seamlessly blend traditional graphics operations with AI computations, opening new possibilities for real-time visual effects, intelligent content creation, and adaptive user interfaces.

The API supports devices equipped with Apple M1 processors and later, as well as A14 Bionic chips and newer generations. This compatibility ensures that developers can target a substantial installed base while taking advantage of Apple's unified memory architecture, which allows CPU and GPU to share the same memory pool without costly data transfers.

Link to section: The AI Integration: Native Machine Learning Without CompromiseThe AI Integration: Native Machine Learning Without Compromise

Metal 4's AI capabilities stem from deep integration with Apple's Neural Engine and GPU compute units. Unlike traditional approaches that treat AI as a separate computational pipeline, Metal 4 allows developers to embed machine learning operations directly within graphics rendering passes. This architectural decision eliminates the overhead typically associated with context switching between graphics and compute operations.

The API provides specialized data types and operations optimized for common AI workflows. Developers can perform tensor operations, matrix multiplications, and neural network inference using the same command buffers and resource management systems they use for graphics. This unified approach simplifies development while ensuring optimal hardware utilization.

One significant advantage is the elimination of cloud dependencies for AI features. Applications can perform complex image recognition, natural language processing, and real-time video analysis entirely on-device. This capability is particularly valuable for applications handling sensitive data, operating in bandwidth-constrained environments, or requiring sub-millisecond response times.

The integration extends to popular machine learning frameworks. Metal 4 provides native bindings for Core ML, Apple's machine learning framework, while also supporting TensorFlow Lite and ONNX models through optimized conversion pipelines. This broad compatibility ensures that developers can leverage existing model architectures without significant modification.

Performance optimizations include automatic batching of AI operations, intelligent memory management for large models, and hardware-specific optimizations for different Apple silicon variants. The API can automatically distribute computational workloads across available processing units, including the CPU, GPU, and Neural Engine, based on the specific requirements of each operation.

Link to section: Why Metal 4 Exists: Market Forces and Strategic PositioningWhy Metal 4 Exists: Market Forces and Strategic Positioning

The development of Metal 4 responds to several converging market trends that have fundamentally altered the software development landscape. The exponential growth in AI applications has created unprecedented demand for computational resources, with many developers struggling to balance performance requirements against cost and privacy constraints.

Traditional cloud-based AI solutions, while powerful, introduce latency that can be prohibitive for real-time applications. Video editing software that applies AI-powered effects, augmented reality applications that perform object recognition, and creative tools that generate content in response to user input all require response times measured in milliseconds rather than seconds.

Privacy concerns have also driven demand for local AI processing. High-profile data breaches and increasing regulatory scrutiny have made both developers and users wary of sending sensitive information to remote servers. Metal 4 addresses this concern by enabling sophisticated AI features that never leave the user's device.

The competitive landscape has intensified pressure on Apple to differentiate its development platform. NVIDIA's CUDA ecosystem has long dominated AI development, particularly in professional and research contexts. Google's TensorFlow and PyTorch have established themselves as the de facto standards for machine learning frameworks. By integrating AI directly into Metal, Apple creates a compelling alternative that leverages the unique advantages of its hardware ecosystem.

Apple's unified memory architecture provides a significant advantage for AI workloads. Unlike traditional computer architectures that require costly data transfers between CPU and GPU memory, Apple silicon allows both processing units to access the same memory pool directly. Metal 4 exploits this architecture to minimize memory overhead and maximize throughput for AI operations.

The timing of Metal 4's release coincides with the maturation of Apple's silicon strategy. The transition from Intel processors to Apple-designed chips has given the company unprecedented control over hardware-software optimization. Metal 4 represents the culmination of this integration, providing developers with tools specifically designed for Apple's silicon architecture.

Link to section: How Developers Can Leverage Metal 4How Developers Can Leverage Metal 4

Getting started with Metal 4 requires understanding its new command structure and resource management model. The API introduces explicit memory management and changes how resources are allocated and managed compared to previous versions. Developers familiar with Metal 3 will need to adapt to these changes, though the core concepts remain consistent.

The new MTL4CommandAllocator provides direct control over command buffer memory, allowing developers to optimize for their specific use cases. This level of control is particularly valuable for AI applications that may have irregular memory access patterns or require precise timing control.

Resource management in Metal 4 centers around the new MTL4ArgumentTable type, which stores binding points for the resources applications need. This system is designed to handle the significantly larger number of buffers and textures that modern applications, particularly those with AI components, typically require.

For AI-specific functionality, developers can use the integrated machine learning operations alongside traditional graphics commands. A typical workflow might involve loading a pre-trained model, configuring input tensors with image data, executing inference operations, and using the results to influence rendering parameters or generate visual effects.

The API supports several common AI use cases out of the box. Image classification operations can be embedded directly in rendering pipelines, allowing applications to adjust visual parameters based on content analysis. Style transfer operations can be applied in real-time to video streams. Natural language processing models can analyze text inputs and generate appropriate visual responses.

Shader compilation has been significantly improved in Metal 4, with dedicated compilation contexts that provide explicit control over when compilation occurs. This improvement is crucial for AI applications that may dynamically load different models or require just-in-time compilation of custom operations.

The flexible render pipeline states in Metal 4 allow applications to build common shader code once and specialize it for different use cases. This capability is particularly valuable for AI applications that may need to handle various input formats or model architectures within a single application.

Link to section: Industry Implications and Competitive ResponseIndustry Implications and Competitive Response

Metal 4's AI integration positions Apple to compete directly with NVIDIA's dominance in AI development. While NVIDIA's CUDA ecosystem remains the gold standard for training large AI models, Metal 4 targets the growing market for AI inference and edge computing applications.

The local processing capabilities enabled by Metal 4 could accelerate the adoption of AI features in consumer applications. Developers who previously avoided AI integration due to cloud infrastructure costs or privacy concerns may now find it feasible to include sophisticated machine learning capabilities in their applications.

This shift toward local AI processing aligns with broader industry trends. Edge computing has gained momentum as organizations seek to reduce bandwidth usage, improve response times, and enhance data security. Metal 4 provides the tools necessary to build applications that can perform complex AI operations at the edge without sacrificing performance or user experience.

The impact on autonomous AI systems could be particularly significant. Applications that rely on AI agents to make decisions or take actions can benefit from the reduced latency and improved reliability that local processing provides. This capability is crucial for applications in industries like healthcare, finance, and manufacturing where real-time decision-making is essential.

Creative industries stand to benefit substantially from Metal 4's capabilities. Video editing applications can apply AI-powered effects in real-time, graphic design tools can generate content based on user input, and music production software can analyze and enhance audio using machine learning algorithms. The integration of AI directly into the graphics pipeline enables these applications to provide sophisticated features without the complexity of managing separate AI infrastructure.

The educational sector may also see significant adoption, as Metal 4 makes it easier for students and researchers to experiment with AI applications without requiring expensive cloud computing resources. This accessibility could accelerate AI literacy and innovation in academic settings.

Link to section: Technical Challenges and LimitationsTechnical Challenges and Limitations

Despite its advantages, Metal 4 faces several technical challenges that developers must navigate. The most significant limitation is hardware compatibility. Metal 4's AI features require Apple silicon, limiting the potential audience for applications that rely heavily on these capabilities. Developers targeting broader audiences may need to maintain separate code paths or fall back to cloud-based processing for older hardware.

Memory management becomes more complex with AI workloads. Machine learning models, particularly large language models or high-resolution image processing networks, can consume substantial amounts of memory. Developers must carefully balance model complexity against available system resources, especially on devices with limited RAM.

The placement sparse resources feature in Metal 4 provides fine-grained control over memory allocation, but it also requires developers to understand the memory characteristics of their AI models. Incorrect memory management can lead to performance degradation or application crashes, particularly when switching between different models or processing varying input sizes.

Debugging AI operations within the graphics pipeline presents unique challenges. Traditional graphics debugging tools may not provide adequate visibility into AI computations, requiring developers to use specialized profiling and analysis tools. Apple has enhanced its debugging infrastructure to support Metal 4's AI features, but the learning curve remains steep for developers new to AI development.

Performance optimization requires understanding both graphics and AI optimization techniques. Developers must balance traditional graphics performance considerations like bandwidth utilization and cache efficiency with AI-specific concerns like numerical precision and model parallelization. This dual expertise requirement may slow adoption among developers primarily focused on graphics or AI individually.

Model compatibility and conversion can present obstacles. While Metal 4 supports popular frameworks like Core ML and TensorFlow Lite, some models may require modification or optimization to achieve optimal performance. Developers may need to experiment with different model architectures or precision levels to find the best balance between accuracy and performance for their specific applications.

Link to section: Future Outlook and EvolutionFuture Outlook and Evolution

Metal 4 represents the beginning rather than the culmination of Apple's AI integration strategy. Future iterations will likely expand AI capabilities based on developer feedback and advances in Apple's silicon design. The company has historically followed a pattern of introducing foundational capabilities and then building upon them with increasingly sophisticated features.

The integration between Metal and Apple's other development frameworks will likely deepen over time. Closer collaboration with Core ML, CreateML, and other Apple machine learning tools could simplify the development process and enable more sophisticated applications. SwiftUI integration might allow developers to declaratively specify AI-powered user interface elements without directly managing Metal resources.

Hardware evolution will continue to drive software capabilities. Apple's next-generation silicon will likely include enhanced AI processing units, larger unified memory pools, and improved interconnects between processing elements. Metal 4's architecture is designed to scale with these hardware improvements, ensuring that applications can take advantage of new capabilities without requiring major code changes.

The developer ecosystem around Metal 4 will play a crucial role in its success. Third-party tools, libraries, and educational resources will determine how quickly developers can adopt the new capabilities. Apple's developer relations efforts, including documentation, sample code, and technical sessions, will influence the pace of adoption.

Industry collaboration may accelerate Metal 4's evolution. As other technology companies develop their own local AI processing solutions, standardization efforts might emerge to ensure compatibility between different platforms. Apple's participation in these efforts could influence Metal 4's future direction.

The success of Metal 4 will ultimately depend on the applications developers build with it. Revolutionary applications that showcase the unique capabilities of local AI processing could drive widespread adoption and influence the broader industry's approach to AI development. Conversely, if developers struggle to realize Metal 4's potential or encounter significant technical barriers, adoption may remain limited to specialized use cases.

Metal 4's AI integration represents a significant step toward a future where sophisticated artificial intelligence is seamlessly integrated into everyday applications. By providing developers with the tools to build AI-powered experiences that run entirely on local hardware, Apple is challenging the industry's assumptions about how AI should be deployed and consumed. The success of this approach will depend on both technical execution and developer adoption, but the potential impact on the software development landscape is substantial.