Distributed Database Wars: 2025's Top Solutions Compared

The distributed database landscape has reached a tipping point in 2025. Traditional monolithic databases are crumbling under the weight of cloud-scale applications, forcing businesses to embrace distributed architectures that can handle massive data volumes across multiple regions while maintaining strong consistency guarantees. This isn't just about handling more data – it's about building systems that can survive datacenter failures, comply with global data regulations, and deliver millisecond response times to users anywhere in the world.

The stakes couldn't be higher. Companies like Netflix process over 1 billion hours of content streaming daily, while financial institutions need to maintain ACID compliance across continents for regulatory requirements. The wrong database choice can mean the difference between seamless scaling and catastrophic failure when traffic spikes hit.

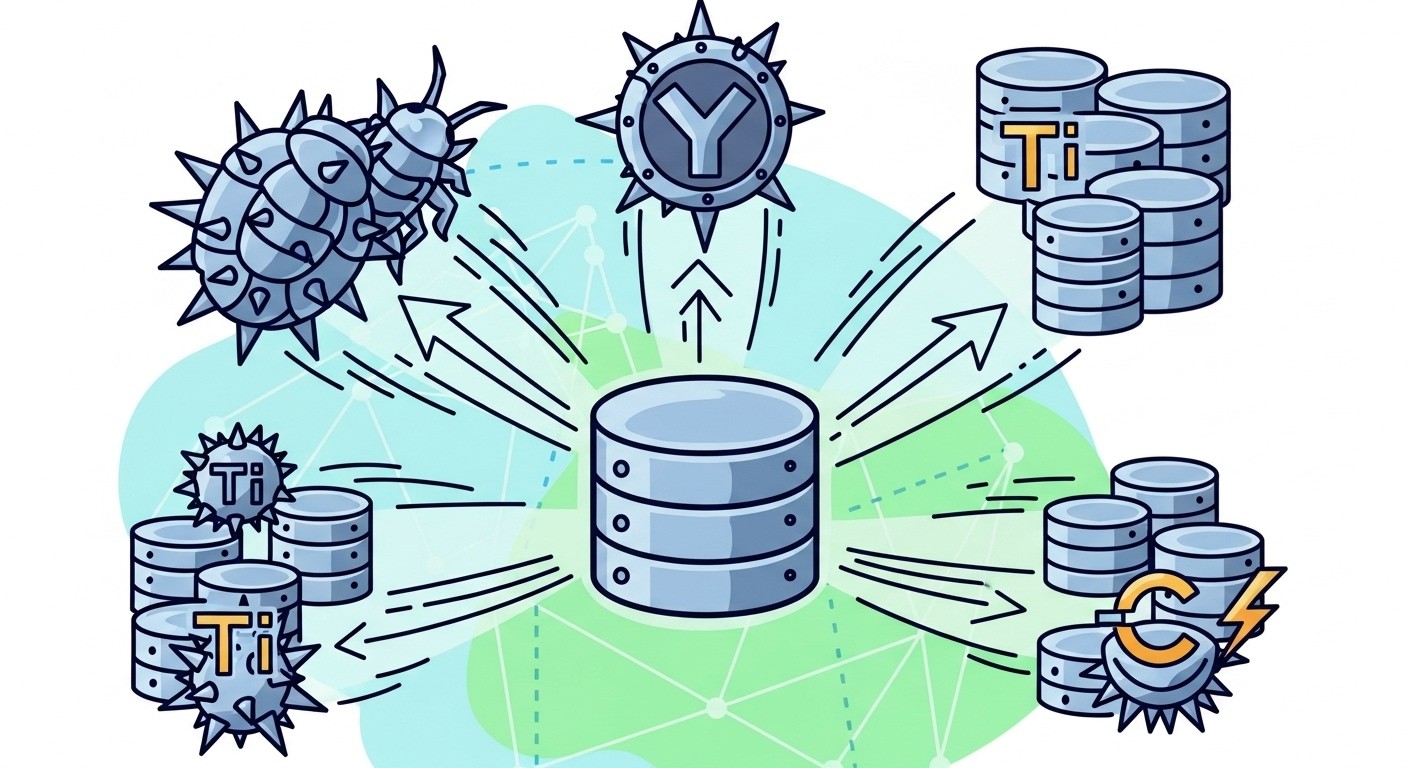

This comprehensive analysis examines the four dominant distributed database solutions that will define 2025: CockroachDB's distributed SQL approach, YugabyteDB's PostgreSQL-compatible scaling, TiDB's hybrid transactional and analytical processing, and Apache Cassandra's battle-tested wide-column architecture. We'll dive deep into performance benchmarks, real deployment scenarios, and the specific technical trade-offs that matter when your application needs to scale beyond a single machine.

Link to section: The Distributed Database RevolutionThe Distributed Database Revolution

The shift toward distributed databases isn't just a trend – it's a fundamental architectural requirement for modern applications. Traditional databases like Oracle and MySQL were designed when applications ran on single servers with predictable workloads. Today's applications face entirely different challenges: elastic scaling, global distribution, and the need to process both transactional and analytical workloads simultaneously.

Distributed databases solve these problems by spreading data across multiple nodes, typically using techniques like sharding, replication, and consensus algorithms to maintain consistency. However, each solution makes different trade-offs between consistency, availability, and partition tolerance – the famous CAP theorem constraints that force architects to choose their battles carefully.

The complexity of these systems means that choosing the wrong distributed database can lock your organization into years of technical debt. A database that performs well with 10,000 users might collapse at 1 million users, or one that works perfectly in a single region might introduce unacceptable latency when deployed globally.

Link to section: CockroachDB: Distributed SQL for the Cloud EraCockroachDB: Distributed SQL for the Cloud Era

CockroachDB has emerged as the flagship distributed SQL database, designed from the ground up for cloud-native deployments. Unlike traditional databases that were retrofitted for distributed scenarios, CockroachDB's architecture assumes failure and network partitions as normal operating conditions.

The database uses a distributed consensus algorithm based on Raft to ensure strong consistency across all nodes. This means that when you write data to a CockroachDB cluster, you can be certain that all subsequent reads will see that data, regardless of which node handles the request. This level of consistency is crucial for financial applications, e-commerce platforms, and any system where data integrity cannot be compromised.

CockroachDB's automatic sharding capability sets it apart from solutions that require manual database partitioning. The system automatically splits data into ranges and distributes them across nodes based on access patterns and data volume. When a table grows beyond a certain threshold, CockroachDB automatically creates new shards and rebalances data without requiring any application changes or downtime.

The geo-partitioning feature allows organizations to store data close to their users while maintaining global consistency. For example, a multinational e-commerce platform can ensure that European customer data stays within EU borders for GDPR compliance while still allowing global inventory management across all regions. This is achieved through CockroachDB's sophisticated replication system that can maintain different consistency levels for different data partitions.

Performance benchmarks show CockroachDB handling over 1 million transactions per second across a 100-node cluster, with read latencies averaging under 10 milliseconds and write latencies under 50 milliseconds. These numbers make it suitable for demanding applications like real-time trading platforms and high-frequency IoT data ingestion.

The SQL compatibility means existing applications can migrate to CockroachDB with minimal code changes. The database supports most PostgreSQL features, including complex joins, transactions, and stored procedures. This compatibility extends to popular ORMs like Django, Rails ActiveRecord, and Hibernate, reducing migration complexity significantly.

Link to section: YugabyteDB: PostgreSQL Compatibility at ScaleYugabyteDB: PostgreSQL Compatibility at Scale

YugabyteDB takes a different approach by building distributed capabilities on top of proven PostgreSQL foundations. This strategy provides the best of both worlds: the familiar PostgreSQL API that millions of developers know, combined with distributed scaling that can handle massive workloads.

The architecture splits into two layers: a PostgreSQL-compatible query layer (YSQL) and a distributed document store layer (YCQL). This dual-API approach allows applications to choose between SQL and NoSQL interfaces depending on their needs, all while using the same underlying distributed storage engine.

YugabyteDB's consensus replication uses a modified Raft algorithm that can maintain strong consistency across multiple availability zones and regions. The system automatically handles leader election and failover, ensuring that database operations continue seamlessly even when entire datacenters become unavailable. This resilience has made it popular with financial institutions and government agencies that cannot tolerate any data loss or extended downtime.

One of YugabyteDB's standout features is its native vector search capabilities, making it particularly relevant for AI applications in 2025. Unlike traditional databases that require separate vector databases for similarity search, YugabyteDB can store and query high-dimensional vector embeddings alongside relational data. This unified approach simplifies architectures for recommendation engines, semantic search applications, and retrieval-augmented generation systems.

The database supports horizontal read scaling through read replicas that can be deployed in different regions. Write operations go to the primary cluster, but read queries can be served from local replicas, reducing latency for global applications. This approach works particularly well for applications with read-heavy workloads, such as content management systems and analytics dashboards.

Performance testing shows YugabyteDB achieving 90% of single-node PostgreSQL performance while providing unlimited horizontal scaling. In distributed deployments, the system can handle over 2 million queries per second across a 50-node cluster, with the ability to add more nodes linearly to increase throughput.

Link to section: TiDB: Hybrid Transactional and Analytical ProcessingTiDB: Hybrid Transactional and Analytical Processing

TiDB addresses one of the most challenging problems in database architecture: supporting both online transactional processing (OLTP) and online analytical processing (OLAP) workloads on the same data without compromising performance on either side.

The system uses a unique architecture that separates storage from compute, allowing it to scale each layer independently. The storage layer (TiKV) is a distributed key-value store that handles data persistence and replication, while the compute layer (TiDB servers) processes SQL queries and can be scaled horizontally based on workload demands.

TiDB's columnar storage engine (TiFlash) automatically creates column-oriented replicas of transactional data, enabling fast analytical queries without impacting operational workloads. This hybrid approach eliminates the need for complex ETL pipelines that traditionally move data from transactional systems to analytical data warehouses.

Real-world deployments show TiDB handling mixed workloads effectively. A major e-commerce platform uses TiDB to process real-time order transactions while simultaneously running complex analytical queries for fraud detection and recommendation systems. The same database cluster handles both 50,000 transactions per second and complex join operations across billions of rows without performance degradation.

The auto-scaling capabilities in TiDB Cloud can dynamically adjust resources based on workload patterns. During peak shopping periods, the system automatically provisions additional compute nodes to handle increased transaction volume, then scales down during quieter periods to reduce costs. This elasticity is particularly valuable for businesses with highly variable workloads.

TiDB's SQL compatibility is extensive, supporting most MySQL syntax and wire protocol compatibility. Applications using MySQL can often migrate to TiDB with minimal changes, while gaining distributed scaling and analytical processing capabilities that would require significant additional infrastructure with traditional MySQL deployments.

Link to section: Apache Cassandra: Battle-Tested Wide-Column ArchitectureApache Cassandra: Battle-Tested Wide-Column Architecture

Apache Cassandra represents the mature end of the distributed database spectrum, with over a decade of production deployments at massive scale. Companies like Netflix, Apple, and Instagram rely on Cassandra to handle petabytes of data across thousands of nodes, proving its reliability under extreme conditions.

Cassandra's wide-column data model differs significantly from traditional SQL databases. Instead of rigid table schemas, Cassandra allows flexible column families that can accommodate varying data structures within the same logical table. This flexibility makes it ideal for time-series data, IoT telemetry, and applications with evolving data requirements.

The database uses consistent hashing for data distribution, ensuring that data is evenly spread across all nodes in the cluster. This approach eliminates hotspots that can plague other distributed systems and provides predictable performance characteristics regardless of cluster size. Adding or removing nodes triggers automatic data rebalancing without requiring application downtime.

Cassandra's eventual consistency model prioritizes availability and partition tolerance over strong consistency. This trade-off makes it exceptionally resilient to network failures and datacenter outages, but requires applications to handle scenarios where different nodes might return slightly different data until synchronization completes.

Performance characteristics vary significantly based on deployment configuration. A well-tuned Cassandra cluster can handle over 1 million writes per second with sub-millisecond latency, making it ideal for high-velocity data ingestion scenarios like real-time analytics, sensor networks, and social media platforms.

The wide-column model excels at time-series workloads where data is primarily accessed by time ranges. Cassandra can efficiently store and query years of historical data while maintaining fast insertion rates for new data points. This capability has made it the go-to choice for monitoring systems, financial tick data, and IoT platforms that generate continuous data streams.

Link to section: Performance and Scalability ComparisonPerformance and Scalability Comparison

The following table summarizes the key performance characteristics and capabilities of each distributed database solution based on real-world benchmarks and documented deployments:

| Feature | CockroachDB | YugabyteDB | TiDB | Apache Cassandra |

|---|---|---|---|---|

| Max Throughput | 1M+ TPS (100 nodes) | 2M+ QPS (50 nodes) | 1.5M+ TPS (mixed) | 1M+ writes/sec |

| Read Latency | <10ms (P95) | <5ms (P95) | <8ms OLTP, <100ms OLAP | <1ms (P95) |

| Write Latency | <50ms (P95) | <15ms (P95) | <20ms (P95) | <1ms (P95) |

| Consistency Model | Strong (serializable) | Strong (linearizable) | Strong (snapshot isolation) | Eventual |

| SQL Compatibility | PostgreSQL-like | Full PostgreSQL | Full MySQL | CQL (SQL-like) |

| Horizontal Scaling | Automatic sharding | Manual + auto | Automatic | Automatic |

| Multi-Region | Native geo-partitioning | Cross-region replicas | Global deployment | Multi-DC replication |

| Storage Model | LSM-tree | LSM-tree | Row + columnar | LSM-tree |

| Backup/Recovery | Point-in-time recovery | PITR + geo-redundancy | Full/incremental | Snapshot + incremental |

The performance numbers reveal distinct strengths for different use cases. CockroachDB excels at maintaining strong consistency while scaling, making it ideal for financial applications that cannot tolerate any data inconsistency. YugabyteDB offers the highest query throughput with PostgreSQL compatibility, making it attractive for migrating existing applications. TiDB provides the unique combination of transactional and analytical processing, eliminating the need for separate systems. Cassandra delivers the lowest latencies for write-heavy workloads but requires applications to handle eventual consistency.

Link to section: Real-World Use Cases and Decision FactorsReal-World Use Cases and Decision Factors

Choosing the right distributed database depends heavily on specific application requirements, team expertise, and operational constraints. The following scenarios illustrate when each solution provides the best fit:

CockroachDB shines in scenarios requiring global distribution with strong consistency. A multinational bank uses CockroachDB to process cross-border transactions while maintaining regulatory compliance in each jurisdiction. The geo-partitioning ensures that customer data stays within required boundaries while still enabling real-time fraud detection across the entire network. The automatic failover capabilities mean that regional network issues don't impact global operations.

Financial trading platforms represent another ideal CockroachDB use case. The strong consistency guarantees ensure that order books remain accurate across all trading regions, while the low-latency writes support high-frequency trading algorithms. The SQL compatibility allows existing risk management systems to query trading data without modification.

YugabyteDB excels for organizations migrating from PostgreSQL who need distributed scaling without architectural changes. A major SaaS platform migrated from a sharded PostgreSQL deployment to YugabyteDB, eliminating the complex application-level sharding logic while improving both performance and reliability. The PostgreSQL compatibility meant zero application changes, while the distributed architecture eliminated the single points of failure that plagued their previous setup.

The vector search capabilities make YugabyteDB particularly compelling for AI applications. Modern AI architectures benefit from storing vector embeddings alongside relational data, enabling complex queries that combine traditional filters with similarity searches. An e-commerce platform uses this capability to power product recommendations that consider both customer preferences (stored relationally) and product similarity (computed from vector embeddings).

TiDB fits organizations that need real-time analytics alongside transactional processing. A ride-sharing company uses TiDB to process ride requests and payments while simultaneously running analytical queries for dynamic pricing, driver allocation, and fraud detection. The hybrid OLTP/OLAP architecture eliminates the delays and complexity of traditional ETL pipelines.

Gaming companies represent another strong TiDB use case. Player actions generate constant transactional updates while game analytics require complex aggregations across historical data. TiDB handles both workloads on the same cluster, enabling real-time game balancing and player matching based on live gameplay data.

Apache Cassandra remains the top choice for high-velocity data ingestion with relaxed consistency requirements. Netflix uses Cassandra to store viewing history, user preferences, and recommendation data across multiple regions. The eventual consistency model works well for these use cases because temporary inconsistencies don't significantly impact user experience, while the write performance supports millions of simultaneous streaming sessions.

IoT platforms heavily favor Cassandra for sensor data collection. The wide-column model efficiently stores time-series data from millions of devices, while the linear scaling ensures that adding new device types doesn't require architectural changes. The ability to handle node failures gracefully is crucial for IoT deployments that span unreliable network connections.

Link to section: Cost and Operational ConsiderationsCost and Operational Considerations

The total cost of ownership varies significantly between distributed database solutions, encompassing not just licensing fees but also operational complexity, infrastructure requirements, and staffing needs.

CockroachDB offers both open-source and enterprise editions, with the enterprise version adding features like geo-partitioning, backup encryption, and priority support. Cloud deployments through CockroachDB Cloud eliminate operational overhead but come with premium pricing that can reach $1000+ per month for production clusters. The automatic operations reduce staffing requirements, but the specialized nature of distributed SQL means finding experienced administrators can be challenging.

YugabyteDB provides similar open-source and enterprise tiers, with the enterprise version adding features like read replicas, encryption at rest, and advanced monitoring. The PostgreSQL compatibility reduces training costs since existing PostgreSQL administrators can manage YugabyteDB clusters with minimal learning curve. Cloud pricing starts around $500 per month for small clusters but scales significantly for high-throughput deployments.

TiDB offers compelling economics for mixed workloads since it eliminates the need for separate OLTP and OLAP systems. Organizations typically save 30-50% on total infrastructure costs by consolidating transactional databases and data warehouses into a single TiDB cluster. The managed TiDB Cloud service provides predictable pricing with automatic scaling that adjusts costs based on actual usage patterns.

Apache Cassandra has the lowest licensing costs since it's fully open source, but operational complexity can drive up staffing expenses. Managing large Cassandra clusters requires specialized expertise, particularly for tuning, monitoring, and troubleshooting performance issues. Cloud managed services like Amazon Keyspaces and Azure Cosmos DB provide Cassandra-compatible APIs with reduced operational overhead.

The infrastructure requirements also vary considerably. CockroachDB and YugabyteDB benefit from SSD storage and high-memory configurations to support their consensus algorithms efficiently. TiDB requires more storage capacity for its columnar replicas but can use cheaper storage tiers for analytical workloads. Cassandra performs well on commodity hardware but needs careful capacity planning to avoid hotspots.

Link to section: Making the Right Choice for 2025Making the Right Choice for 2025

The distributed database decision ultimately depends on prioritizing your specific requirements across consistency, performance, operational complexity, and cost factors.

Choose CockroachDB when strong consistency is non-negotiable and you need global distribution. The automatic operations and SQL compatibility make it ideal for teams that want distributed capabilities without operational complexity. It's the best choice for financial services, regulated industries, and applications where data accuracy is paramount.

Select YugabyteDB when you have existing PostgreSQL expertise and need both traditional database capabilities and modern AI features. The combination of SQL compatibility, vector search, and horizontal scaling makes it perfect for modernizing traditional applications while adding AI capabilities.

Opt for TiDB when you need both transactional and analytical processing on the same data. The hybrid architecture eliminates data pipeline complexity and provides real-time insights that aren't possible with separate systems. It's ideal for applications that need immediate analytics on live transactional data.

Pick Apache Cassandra for high-velocity data ingestion where eventual consistency is acceptable. The proven scalability and write performance make it the right choice for time-series data, IoT platforms, and applications that prioritize availability over consistency.

The distributed database landscape in 2025 offers sophisticated solutions that can handle virtually any scaling requirement. The key is matching your specific needs with each solution's strengths rather than choosing based on popularity or marketing claims. With proper evaluation and implementation, these distributed databases can provide the foundation for applications that scale seamlessly from thousands to millions of users while maintaining the performance and reliability that modern applications demand.