Docker Model Runner Revolutionizes Local AI Development

Docker has fundamentally changed how developers think about AI model deployment with the launch of Model Runner, a feature that brings large language model execution directly into the familiar Docker workflow. Rather than wrestling with Python environments, CUDA installations, and fragmented tooling, developers can now run AI models locally with the same simplicity as launching any container.

This shift represents more than just convenience. As organizations grapple with rising cloud inference costs, data privacy concerns, and the complexity of AI model deployment, Docker Model Runner offers a compelling alternative that keeps computation local while maintaining the performance and reliability developers expect.

Link to section: The Local AI Development ProblemThe Local AI Development Problem

The current state of AI model development creates significant friction for developers. Running models like Llama 3.2 or Claude locally typically requires managing multiple Python environments, ensuring CUDA drivers work correctly, handling different model formats, and dealing with memory management issues that vary by hardware configuration.

Consider a typical scenario: a developer wants to test their application against a 7B parameter model. They must first install Python 3.11, set up a virtual environment, install PyTorch with CUDA support, download model weights that might be several gigabytes, configure memory settings for their specific GPU, and then hope everything works together. Each model often requires slightly different setup procedures, and switching between models means potentially rebuilding entire environments.

The fragmentation extends beyond setup complexity. Models are distributed through various channels - some via Hugging Face Hub, others through proprietary download tools, and many as loose files with custom authentication requirements. There's no standardized way to version, share, or deploy these models within existing development workflows.

Cloud-based alternatives like OpenAI's API or Anthropic's Claude solve the setup problem but introduce new challenges. API costs can quickly escalate during development and testing phases, especially when experimenting with different prompts or running extensive test suites. More critically, sending potentially sensitive code or proprietary data to external services raises compliance and security concerns that many organizations cannot accept.

Link to section: How Docker Model Runner Changes EverythingHow Docker Model Runner Changes Everything

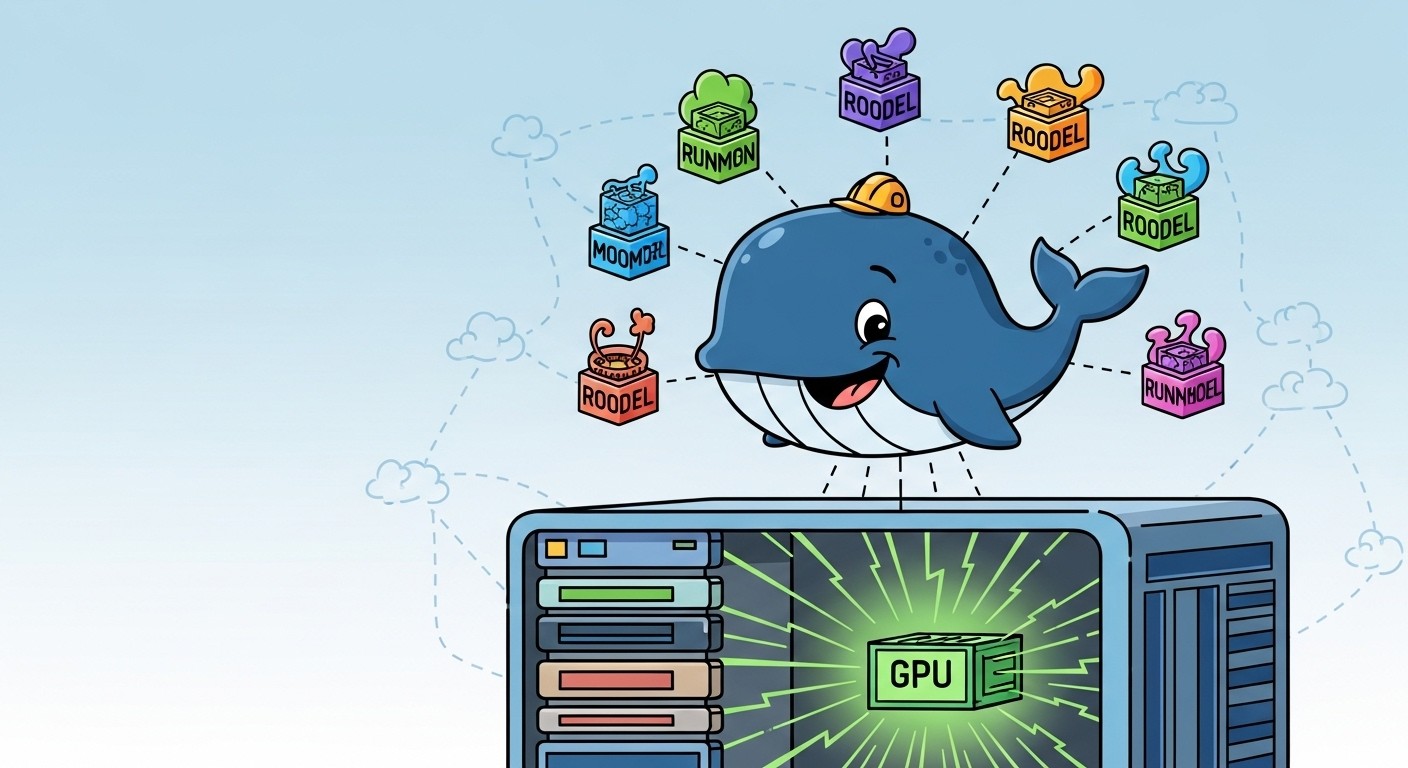

Docker Model Runner treats AI models as first-class citizens within the Docker ecosystem, applying the same principles that made container adoption so successful. Models become OCI artifacts that can be versioned, shared, and deployed using familiar Docker commands and registries.

The architecture is elegantly simple yet powerful. When you run docker model pull ai/llama3.2:1B-Q8_0, Docker downloads the model as an OCI artifact from Docker Hub. The model doesn't run inside a container, however. Instead, Docker Desktop executes llama.cpp directly on the host machine, enabling optimal GPU acceleration without the performance overhead of virtualization.

This design choice is crucial for performance. Traditional containerized approaches create barriers between the application and hardware acceleration. Docker Model Runner sidesteps this entirely by treating the model execution engine as part of Docker Desktop itself, while still providing the distribution and versioning benefits of containerization for the models themselves.

The integration with existing Docker workflows means developers can include model dependencies in their docker-compose.yml files, manage model versions alongside application code, and deploy AI-powered applications using the same CI/CD pipelines they already understand.

Link to section: Technical Architecture and ImplementationTechnical Architecture and Implementation

Docker Model Runner builds on llama.cpp, the highly optimized C++ implementation that has become the de facto standard for local LLM inference. By embedding this directly into Docker Desktop, the system achieves near-native performance while maintaining Docker's signature ease of use.

The model packaging approach leverages OCI artifacts, an open standard that extends container registries to support arbitrary file types. When you pull a model, Docker retrieves the model weights, configuration files, and metadata as a single versioned artifact. This eliminates the common problem of version mismatches between model weights and configuration that plague traditional model distribution methods.

GPU acceleration works differently across platforms, reflecting hardware realities. On Apple Silicon Macs, Docker Model Runner automatically utilizes the unified memory architecture and Metal Performance Shaders to deliver impressive inference speeds. The host-based execution approach means there's no virtualization layer reducing GPU access efficiency.

For NVIDIA GPUs on Windows and Linux systems, the integration leverages CUDA directly through the host system. Docker Desktop 4.41 and later versions include enhanced Windows GPU support, while Linux implementations can access the full CUDA toolkit installed on the host system.

The API compatibility layer provides an OpenAI-compatible interface, meaning applications written for GPT models can work with local models without code changes. This compatibility extends beyond basic chat completions to include streaming responses, function calling, and other advanced features that modern AI applications expect.

Link to section: Setting Up Docker Model RunnerSetting Up Docker Model Runner

Getting started requires Docker Desktop 4.40 or later. The feature is initially available as a beta, requiring manual activation through Docker Desktop's settings panel. Navigate to Features in development, then Beta, and enable "Docker Model Runner." For command-line management, the feature can be enabled with docker desktop enable model-runner.

The optional TCP configuration (docker desktop enable model-runner --tcp 12434) exposes the inference API on localhost:12434, allowing applications running outside Docker containers to access the models. This flexibility supports various development patterns without forcing everything through Docker networking.

Basic model operations follow Docker's familiar patterns. docker model ls shows currently downloaded models, docker model pull ai/llama3.2:1B-Q8_0 downloads a quantized 1 billion parameter version of Llama 3.2, and docker model run ai/llama3.2:1B-Q8_0 "Explain quantum computing" executes a single prompt.

The interactive mode (docker model run ai/llama3.2:1B-Q8_0 without a prompt) provides a chat interface with commands like /bye to exit, /help for assistance, and /reset to clear conversation history. This interactive approach is particularly valuable during development when testing different prompt patterns or model behaviors.

For production applications, the programmatic API integration matters most. Applications can connect to the local inference endpoint using any OpenAI-compatible client library. A typical Node.js integration looks like:

import OpenAI from 'openai';

const client = new OpenAI({

baseURL: 'http://localhost:12434/v1',

apiKey: 'not-needed-for-local'

});

const response = await client.chat.completions.create({

model: 'ai/llama3.2:1B-Q8_0',

messages: [

{ role: 'user', content: 'Write a Python function to calculate fibonacci numbers' }

]

});Link to section: Real-World Applications and IntegrationReal-World Applications and Integration

Docker Model Runner shines in development workflows where rapid iteration matters. Consider a team building a code review assistant. During development, they need to test different models, adjust prompts, and validate responses across various code patterns. With traditional cloud APIs, each test run incurs costs and latency. Docker Model Runner enables unlimited local testing with immediate feedback.

The genai-app-demo repository demonstrates a complete application architecture. The Go backend connects to Model Runner's local API, a React frontend provides the user interface, and Docker Compose orchestrates the entire stack. Developers can modify the application, restart services, and test changes without external dependencies or API costs.

Enterprise applications benefit from the data locality guarantees. A financial services company building an AI-powered document analysis tool can process sensitive documents entirely on-premises. The models never leave the local environment, addressing compliance requirements while still leveraging modern AI capabilities.

The integration with Docker Compose enables sophisticated multi-service applications. A typical configuration might include:

services:

app:

build: .

ports:

- "3000:3000"

environment:

- MODEL_BASE_URL=http://host.docker.internal:12434/v1

- MODEL_NAME=ai/llama3.2:1B-Q8_0

depends_on:

- database

database:

image: postgres:15

environment:

POSTGRES_DB: myappThis approach scales from development through production, with model dependencies clearly defined and versioned alongside application code.

Link to section: Competing Approaches and AlternativesCompeting Approaches and Alternatives

The local AI execution landscape includes several established players, each with distinct trade-offs. Ollama pioneered the "Docker for AI models" concept, providing simple local model execution with a focus on ease of use. LM Studio offers a graphical interface for running models locally, particularly popular among non-technical users exploring AI capabilities.

Cloud-based solutions like OpenAI's API, Anthropic's Claude, and Google's Vertex AI offer different value propositions. They provide access to massive models that would be impractical to run locally, handle scaling automatically, and eliminate hardware requirements. However, they introduce ongoing costs, latency considerations, and data privacy concerns.

Modern AI-first development platforms represent another category, integrating model execution with complete development environments. These platforms often include version control, collaboration features, and deployment automation, but typically require adopting their entire ecosystem.

Docker Model Runner differentiates itself through integration with existing Docker workflows rather than requiring new tools or platforms. For organizations already using Docker for development and deployment, this represents the path of least resistance for adding AI capabilities.

The performance characteristics vary significantly across approaches. Docker Model Runner's host-based execution typically outperforms containerized alternatives by 10-20% in inference speed, while cloud APIs offer access to larger models that deliver better output quality despite higher latency.

Link to section: Integration with Docker Ecosystem FeaturesIntegration with Docker Ecosystem Features

Docker Desktop 4.40 introduced several complementary features that work alongside Model Runner. The MCP (Model Context Protocol) Toolkit enables secure connections between AI agents and external tools, addressing the challenge of giving models access to real-world systems without compromising security.

Docker Offload allows compute-intensive workloads to scale to cloud resources when local hardware becomes insufficient. This hybrid approach lets developers start with local Model Runner execution during development and seamlessly scale to cloud resources for production workloads requiring larger models or higher throughput.

The Agentic Compose feature orchestrates multi-agent systems using familiar Docker Compose syntax. Teams can define complex AI workflows where different agents handle specialized tasks, all managed through standard Docker tooling.

These integrations create a comprehensive AI development stack. A developer might use Model Runner for rapid local testing, MCP Toolkit to safely connect agents to external APIs, and Docker Offload to handle production scaling, all within a unified Docker-based workflow.

Link to section: Adoption Challenges and ConsiderationsAdoption Challenges and Considerations

Despite its advantages, Docker Model Runner faces several adoption challenges. The feature requires relatively recent hardware for optimal performance, particularly for GPU acceleration. Developers with older machines or systems lacking discrete GPUs may not experience the full benefits.

Model size constraints present another limitation. While 1B-7B parameter models run well on typical developer hardware, larger models that might provide better output quality require substantial memory resources. A 13B model typically needs 16GB+ of unified memory, limiting options for developers with standard laptops.

The beta status means the feature set is still evolving. Production adoption may be premature for organizations requiring stable, fully-supported tooling. Documentation and community resources are also developing, making troubleshooting more challenging than with established tools.

Enterprise adoption faces additional hurdles around model governance and compliance. Organizations need clear policies about which models can be used, how they're versioned and updated, and what data they can process. Docker Model Runner provides the technical infrastructure but doesn't address these organizational challenges.

Link to section: Performance and Resource ManagementPerformance and Resource Management

Benchmarking reveals significant performance variations based on hardware configuration and model choice. On an M3 Max MacBook Pro with 64GB unified memory, Llama 3.2 7B generates approximately 45 tokens per second, suitable for interactive applications but potentially limiting for batch processing scenarios.

Memory usage scales roughly linearly with model parameters. The 1B parameter models typically consume 2-3GB of RAM, while 7B models require 8-12GB depending on quantization settings. The Q8_0 quantization format provides a good balance between quality and resource usage for most applications.

GPU utilization monitoring shows Docker Model Runner efficiently leverages available hardware acceleration. On Apple Silicon, Activity Monitor displays consistent GPU usage during inference, indicating effective Metal integration. NVIDIA systems show similar CUDA utilization patterns.

Thermal management becomes important during extended usage. Running large models continuously can generate significant heat, potentially throttling performance on laptops. Desktop systems with better cooling generally maintain consistent performance over longer periods.

Link to section: Future Implications and Industry ImpactFuture Implications and Industry Impact

Docker Model Runner represents a broader shift toward local-first AI development. As model efficiency improvements continue and hardware capabilities expand, more AI workloads become viable for local execution. This trend reduces cloud dependencies and enables new classes of applications that require real-time, offline, or privacy-sensitive AI processing.

The standardization around OCI artifacts for model distribution could influence how the entire industry approaches AI model packaging and deployment. If Docker's approach gains adoption, we might see model registries become as common as container registries, with similar tooling for versioning, security scanning, and automated deployment.

Comprehensive setup guides and best practices are emerging as the community explores advanced use cases and integration patterns. Early adopters are sharing configurations for complex multi-model workflows, custom model hosting, and enterprise deployment scenarios.

The competitive response from other container platforms and AI tooling vendors will likely accelerate innovation in this space. Kubernetes-based alternatives, cloud provider offerings, and specialized AI infrastructure tools are all adapting to address the local AI execution demand that Docker Model Runner validates.

As organizations balance cost, performance, privacy, and capability requirements, Docker Model Runner provides a viable option for teams seeking local AI execution without sacrificing the developer experience benefits that made Docker successful for traditional application development.