Google Veo 3 Video Generator: Complete API Setup Guide

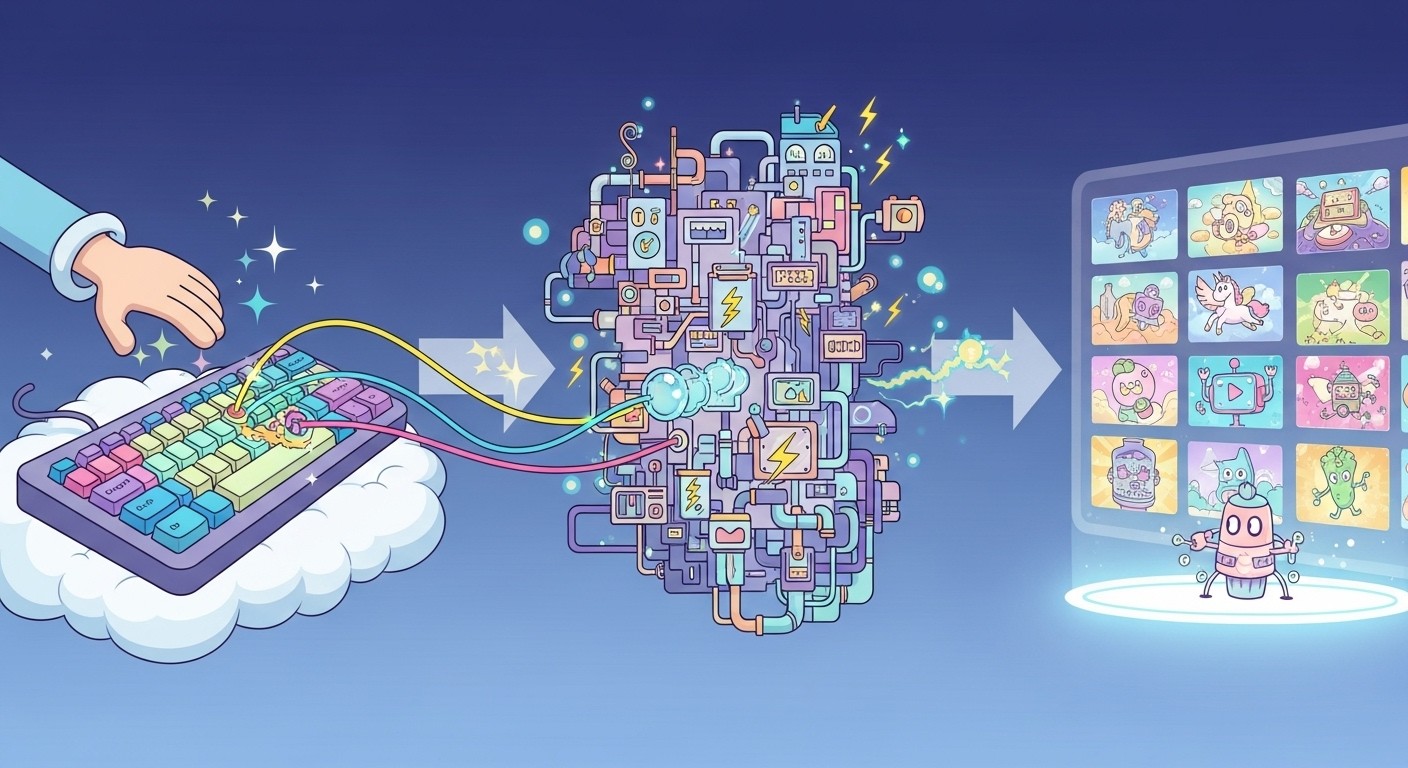

Google's Veo 3 represents a massive leap forward in AI video generation, producing high-quality 8-second clips with integrated audio from simple text prompts. Released through the Gemini API in July 2025, this breakthrough model has already generated tens of millions of videos worldwide and offers developers unprecedented creative capabilities.

This comprehensive guide walks you through setting up Veo 3 in your applications, from initial API configuration to deploying production-ready video generation features. You'll learn practical implementation techniques, explore pricing structures, and discover optimization strategies that can transform how your applications handle dynamic content creation.

Link to section: Understanding Veo 3's CapabilitiesUnderstanding Veo 3's Capabilities

Veo 3 stands apart from previous video generation models through its native audio integration and exceptional visual fidelity. Unlike competitors that generate silent clips requiring separate audio processing, Veo 3 creates complete audiovisual experiences including dialogue, sound effects, and background music directly from text descriptions.

The model excels at cinematic narratives, character animations, and detailed scene composition. During testing, developers report success rates above 85% for complex prompts involving multiple characters, environmental changes, and specific camera movements. The 720p output resolution maintains professional quality suitable for social media, marketing content, and prototype visualization.

Veo 3's training incorporates extensive video datasets spanning different genres, styles, and production techniques. This broad foundation enables the model to understand nuanced creative language like "close-up shot," "golden hour lighting," or "handheld camera movement" and translate these concepts into visually accurate results.

Link to section: Prerequisites and Account SetupPrerequisites and Account Setup

Before diving into Veo 3 implementation, ensure you have the necessary Google Cloud infrastructure. You'll need a Google Cloud Project with billing enabled, as Veo 3 operates exclusively on Google's Paid Tier pricing model at $0.75 per second of generated content.

Start by visiting the Google AI Studio interface and navigating to the API section. Click the Key button in the top-right corner to select your Google Cloud Project. If you don't see billing options, verify that your project has an active billing account linked through the Google Cloud Console.

Install the required Python dependencies using pip:

pip install google-genai google-cloud-aiplatformFor Node.js applications, use npm:

npm install @google/generative-ai @google-cloud/aiplatformAuthentication requires either service account credentials or user authentication through OAuth 2.0. For development purposes, the Google Cloud CLI provides the simplest setup:

gcloud auth login

gcloud config set project YOUR_PROJECT_IDLink to section: API Configuration and AuthenticationAPI Configuration and Authentication

Setting up Veo 3 requires proper authentication configuration and API client initialization. Create a new Python file called veo3_setup.py and add the following configuration:

import os

import time

from google import genai

from google.genai import types

# Set your Google Cloud project ID

os.environ['GOOGLE_CLOUD_PROJECT'] = 'your-project-id'

# Initialize the client

client = genai.Client()

# Verify API access

try:

models = client.models.list()

print("API connection successful!")

print(f"Available models: {len(list(models))}")

except Exception as e:

print(f"Authentication failed: {e}")The client initialization automatically handles authentication when running in environments with Google Cloud credentials. For production deployments, use service account JSON keys:

import json

from google.oauth2 import service_account

# Load service account credentials

with open('path/to/service-account.json', 'r') as f:

credentials_info = json.load(f)

credentials = service_account.Credentials.from_service_account_info(

credentials_info,

scopes=['https://www.googleapis.com/auth/cloud-platform']

)

client = genai.Client(credentials=credentials)Verify your setup by checking available models and quota limits:

# Check quota and limits

try:

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt="test connection",

config=types.GenerateVideosConfig()

)

print("Veo 3 model accessible!")

except Exception as e:

print(f"Model access error: {e}")Link to section: Your First Video GenerationYour First Video Generation

Creating your first Veo 3 video involves constructing a detailed prompt and configuring generation parameters. The model responds best to specific, descriptive language that includes visual style, subject matter, and desired mood.

Start with a simple example to understand the basic workflow:

import time

from google import genai

from google.genai import types

client = genai.Client()

def generate_basic_video():

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt="a golden retriever running through a sunflower field at sunset, cinematic shot with warm lighting",

config=types.GenerateVideosConfig(

negative_prompt="blurry, low quality, distorted",

)

)

# Wait for generation to complete

print("Starting video generation...")

while not operation.done:

time.sleep(20)

operation = client.operations.get(operation)

print("Still generating...")

# Retrieve the generated video

generated_video = operation.result.generated_videos[0]

# Download the video file

video_data = client.files.download(file=generated_video.video)

# Save to local file

with open("my_first_veo_video.mp4", "wb") as f:

f.write(video_data)

print("Video saved as my_first_veo_video.mp4")

return generated_video

# Generate your first video

video = generate_basic_video()The generation process typically takes 2-5 minutes depending on complexity and current API load. Monitor the operation status using the polling mechanism shown above, checking every 20 seconds to avoid excessive API calls.

Link to section: Advanced Prompt EngineeringAdvanced Prompt Engineering

Veo 3's output quality heavily depends on prompt construction and parameter tuning. Effective prompts combine subject description, visual style, camera work, and atmospheric details into coherent instructions that guide the model's creative process.

Structure your prompts using this proven template:

[SHOT TYPE] of [SUBJECT] [ACTION] in [SETTING], [VISUAL STYLE], [LIGHTING], [MOOD/ATMOSPHERE]

For example:

advanced_prompts = [

"Medium close-up shot of a chef preparing pasta in a modern kitchen, documentary style, soft natural lighting from window, focused and professional atmosphere",

"Aerial tracking shot of a red sports car driving along a coastal highway, cinematic color grading, golden hour lighting, exhilarating and free-spirited mood",

"Wide establishing shot of a futuristic city skyline at night, cyberpunk aesthetic, neon lighting with purple and blue tones, mysterious and technological atmosphere"

]

def generate_advanced_video(prompt_text):

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt=prompt_text,

config=types.GenerateVideosConfig(

negative_prompt="shaky, pixelated, watermark, text overlay, poor lighting",

duration_seconds=8, # Maximum duration

aspect_ratio="16:9" # Standard widescreen format

)

)

return operationNegative prompts prove crucial for avoiding common generation issues. Include terms like "blurry," "distorted," "low resolution," "artifacts," and "watermark" to improve output quality. For character-focused videos, add "deformed faces," "extra limbs," or "unnatural proportions" to prevent anatomical errors.

Audio-specific prompting requires separate consideration within your main description. Veo 3 interprets audio cues naturally when integrated into scene descriptions:

audio_enhanced_prompts = [

"Close-up of waves crashing against rocks with thunderous ocean sounds, storm approaching with distant thunder rumbles, dramatic and powerful",

"Interior shot of a cozy coffee shop with gentle jazz music playing, customers chatting quietly in background, warm and inviting atmosphere",

"Action sequence of a motorcycle chase through city streets with engine roaring, tire screeching, and urban ambient sounds, high-energy and intense"

]Link to section: Handling Generation Errors and TroubleshootingHandling Generation Errors and Troubleshooting

Video generation can fail for various reasons including prompt violations, quota limits, or technical errors. Implement robust error handling to manage these situations gracefully:

import time

import logging

from google import genai

from google.genai import types

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class VideoGenerationError(Exception):

pass

def robust_video_generation(prompt, max_retries=3):

client = genai.Client()

for attempt in range(max_retries):

try:

logger.info(f"Generation attempt {attempt + 1}/{max_retries}")

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt=prompt,

config=types.GenerateVideosConfig(

negative_prompt="low quality, blurry, distorted"

)

)

# Monitor with timeout

timeout_minutes = 10

start_time = time.time()

while not operation.done:

if time.time() - start_time > timeout_minutes * 60:

raise VideoGenerationError("Generation timeout exceeded")

time.sleep(30)

operation = client.operations.get(operation)

logger.info("Generation in progress...")

# Check for errors in the operation result

if hasattr(operation, 'error') and operation.error:

raise VideoGenerationError(f"Generation failed: {operation.error}")

generated_video = operation.result.generated_videos[0]

logger.info("Generation completed successfully!")

return generated_video

except Exception as e:

logger.error(f"Attempt {attempt + 1} failed: {e}")

if attempt == max_retries - 1:

raise VideoGenerationError(f"All {max_retries} attempts failed. Last error: {e}")

# Wait before retry

time.sleep(60)

return NoneCommon error scenarios include:

Content Policy Violations: Veo 3 filters prompts for inappropriate content, violence, or copyrighted material. Revise prompts to focus on original, family-friendly concepts.

Quota Exceeded: The API enforces daily generation limits. Monitor your usage through the Google Cloud Console and implement quota tracking:

def check_quota_status():

try:

# Attempt a minimal operation to check quota

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt="simple test",

config=types.GenerateVideosConfig()

)

return True

except Exception as e:

if "quota" in str(e).lower():

return False

raise eModel Overload: During peak usage periods, the API may return temporary unavailability errors. Implement exponential backoff:

import random

def exponential_backoff_retry(func, max_retries=5):

for attempt in range(max_retries):

try:

return func()

except Exception as e:

if attempt == max_retries - 1:

raise e

wait_time = (2 ** attempt) + random.uniform(0, 1)

logger.info(f"Retrying in {wait_time:.2f} seconds...")

time.sleep(wait_time)Link to section: Optimizing Cost and PerformanceOptimizing Cost and Performance

Veo 3's $0.75 per second pricing structure requires careful optimization for production applications. An 8-second video costs $6.00, making efficiency crucial for applications generating multiple videos daily.

Implement caching mechanisms to avoid regenerating similar content:

import hashlib

import os

import pickle

class VideoCache:

def __init__(self, cache_dir="video_cache"):

self.cache_dir = cache_dir

os.makedirs(cache_dir, exist_ok=True)

def get_cache_key(self, prompt, config):

# Create unique hash from prompt and config

content = f"{prompt}_{str(config)}"

return hashlib.md5(content.encode()).hexdigest()

def is_cached(self, cache_key):

cache_path = os.path.join(self.cache_dir, f"{cache_key}.mp4")

return os.path.exists(cache_path)

def save_video(self, cache_key, video_data):

cache_path = os.path.join(self.cache_dir, f"{cache_key}.mp4")

with open(cache_path, "wb") as f:

f.write(video_data)

def load_video(self, cache_key):

cache_path = os.path.join(self.cache_dir, f"{cache_key}.mp4")

with open(cache_path, "rb") as f:

return f.read()

def cached_video_generation(prompt, config=None):

cache = VideoCache()

cache_key = cache.get_cache_key(prompt, config)

if cache.is_cached(cache_key):

logger.info("Returning cached video")

return cache.load_video(cache_key)

# Generate new video

video = generate_video(prompt, config)

video_data = client.files.download(file=video.video)

# Cache for future use

cache.save_video(cache_key, video_data)

return video_dataFor applications requiring multiple video variations, consider batch processing strategies that group similar requests:

def batch_generate_videos(prompts, batch_size=3):

results = []

for i in range(0, len(prompts), batch_size):

batch = prompts[i:i + batch_size]

batch_operations = []

# Start all operations in the batch

for prompt in batch:

operation = client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt=prompt,

config=types.GenerateVideosConfig()

)

batch_operations.append((prompt, operation))

# Wait for all to complete

for prompt, operation in batch_operations:

while not operation.done:

time.sleep(30)

operation = client.operations.get(operation)

results.append({

'prompt': prompt,

'video': operation.result.generated_videos[0]

})

# Rate limiting between batches

time.sleep(60)

return resultsLink to section: Building Production ApplicationsBuilding Production Applications

Production deployment requires additional considerations around scalability, monitoring, and user experience. Modern AI-powered applications benefit from understanding platform architecture decisions that can impact long-term maintainability and performance.

Structure your production code using async patterns for better resource utilization:

import asyncio

import aiohttp

from concurrent.futures import ThreadPoolExecutor

class ProductionVideoGenerator:

def __init__(self):

self.client = genai.Client()

self.executor = ThreadPoolExecutor(max_workers=5)

async def generate_video_async(self, prompt, config=None):

loop = asyncio.get_event_loop()

# Run blocking generation in thread pool

operation = await loop.run_in_executor(

self.executor,

self._start_generation,

prompt, config

)

# Poll for completion asynchronously

while not operation.done:

await asyncio.sleep(30)

operation = await loop.run_in_executor(

self.executor,

self.client.operations.get,

operation

)

return operation.result.generated_videos[0]

def _start_generation(self, prompt, config):

return self.client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt=prompt,

config=config or types.GenerateVideosConfig()

)

# Usage in FastAPI application

from fastapi import FastAPI, BackgroundTasks

from pydantic import BaseModel

app = FastAPI()

generator = ProductionVideoGenerator()

class VideoRequest(BaseModel):

prompt: str

negative_prompt: str = ""

user_id: str

@app.post("/generate-video")

async def generate_video_endpoint(request: VideoRequest):

config = types.GenerateVideosConfig(

negative_prompt=request.negative_prompt

)

try:

video = await generator.generate_video_async(

request.prompt,

config

)

# Process video for delivery

video_url = await upload_to_storage(video)

return {

"status": "completed",

"video_url": video_url,

"duration": 8,

"cost": 6.00

}

except Exception as e:

return {

"status": "failed",

"error": str(e)

}

async def upload_to_storage(video):

# Implement your storage solution

# (Google Cloud Storage, AWS S3, etc.)

passImplement comprehensive monitoring and logging for production systems:

import logging

from datadog import initialize, statsd

import time

# Configure monitoring

initialize(statsd_host='localhost', statsd_port=8125)

class MonitoredVideoGenerator:

def __init__(self):

self.client = genai.Client()

self.logger = logging.getLogger(__name__)

def generate_with_monitoring(self, prompt, user_id):

start_time = time.time()

try:

# Track generation start

statsd.increment('veo3.generation.started')

self.logger.info(f"Starting generation for user {user_id}")

operation = self.client.models.generate_videos(

model="veo-3.0-generate-preview",

prompt=prompt,

config=types.GenerateVideosConfig()

)

# Monitor generation progress

while not operation.done:

time.sleep(30)

operation = self.client.operations.get(operation)

statsd.increment('veo3.generation.polling')

# Track successful completion

duration = time.time() - start_time

statsd.histogram('veo3.generation.duration', duration)

statsd.increment('veo3.generation.completed')

self.logger.info(f"Generation completed in {duration:.2f}s for user {user_id}")

return operation.result.generated_videos[0]

except Exception as e:

# Track failures

statsd.increment('veo3.generation.failed')

self.logger.error(f"Generation failed for user {user_id}: {e}")

raiseLink to section: Alternative Approaches and Future ConsiderationsAlternative Approaches and Future Considerations

While Veo 3 represents current state-of-the-art video generation, the landscape continues evolving rapidly. Consider these alternative approaches and future developments when planning long-term implementations.

Veo 3 Fast offers reduced generation times at the same $0.75 per second pricing, optimizing for applications requiring quicker turnaround. The Fast variant maintains 720p quality while reducing typical generation time from 5 minutes to 2-3 minutes.

For applications requiring higher resolution or longer duration videos, monitor Google's roadmap for Veo 3 enhancements. Current limitations include the 8-second maximum duration and 720p resolution cap, both likely to improve in future releases.

Alternative video generation services like Runway ML, Pika Labs, and Stability AI's Stable Video Diffusion provide different strengths and pricing models. Runway ML excels at motion graphics and abstract visuals, while Pika Labs focuses on social media content optimization. Evaluate these alternatives based on your specific use case requirements and budget constraints.

Consider hybrid approaches combining multiple AI services for comprehensive video production workflows. Use Veo 3 for primary scene generation, audio separation tools for soundtrack customization, and upscaling services for resolution enhancement:

class HybridVideoWorkflow:

def __init__(self):

self.veo_client = genai.Client()

# Initialize other service clients

async def create_enhanced_video(self, prompt, target_resolution="1080p"):

# Generate base video with Veo 3

base_video = await self.generate_base_video(prompt)

# Enhance resolution using upscaling service

if target_resolution == "1080p":

enhanced_video = await self.upscale_video(base_video)

else:

enhanced_video = base_video

# Add custom audio track if needed

final_video = await self.enhance_audio(enhanced_video, prompt)

return final_videoThe integration of video generation into broader application workflows represents the next frontier. Applications combining Veo 3 with content management systems, social media automation, and marketing platforms will likely define the practical adoption of AI video generation technology.

Google's Veo 3 API provides developers with unprecedented creative capabilities through straightforward implementation patterns. By following this guide's setup procedures, optimization strategies, and production practices, you can integrate professional-quality video generation into your applications while managing costs effectively. The combination of detailed prompt engineering, robust error handling, and scalable architecture ensures reliable video generation that meets production demands.

As the AI video generation landscape continues maturing, Veo 3 establishes a strong foundation for developers exploring dynamic content creation. The model's audio integration, visual quality, and API accessibility position it as a practical choice for applications ranging from social media automation to marketing content generation and creative prototyping platforms.