Haiku 4.5 Brings Frontier AI to $1 per Million Tokens

Anthropic shipped Claude Haiku 4.5 on October 15, 2025, marking the first time extended thinking and computer use capabilities have reached the company's most affordable model tier. The release matters because it brings intelligence previously locked behind premium pricing to developers running high-volume workflows. Haiku 4.5 costs $1 per million input tokens and $5 per million output tokens, a 25% price increase from Haiku 3.5 but with performance Anthropic positions as comparable to Sonnet 4.

The timing is deliberate. Haiku 4.5 arrives two weeks after Sonnet 4.5's September 29 release and two months after Opus 4.1's August 5 launch. Anthropic is diffusing frontier capabilities down its model stack faster than previous generations, creating what several practitioners describe as the first viable opportunity for multi-agent orchestration at production scale.

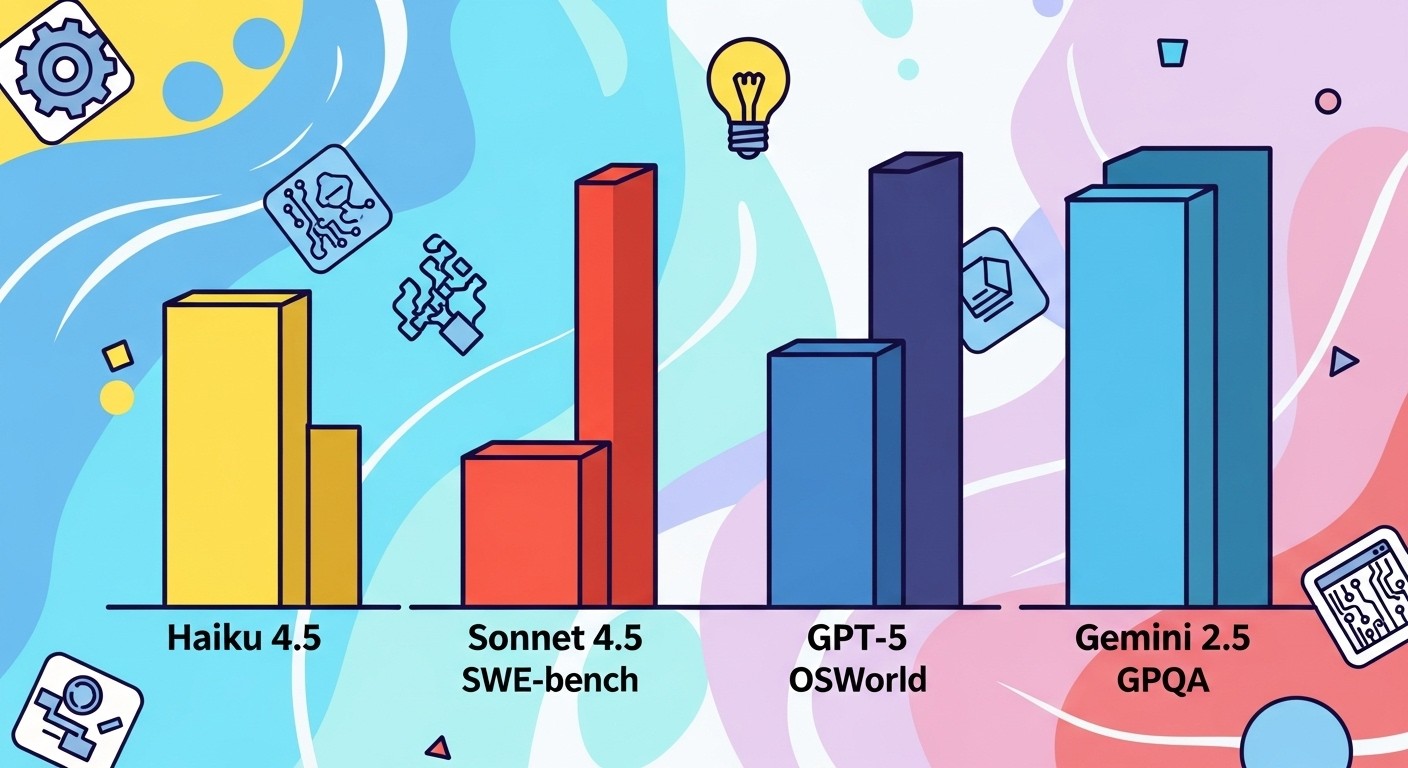

I tested Haiku 4.5 against Sonnet 4.5, GPT-5 mini, and Gemini 2.5 Flash across coding, reasoning, and computer use benchmarks. The results show Haiku 4.5 closes the gap on expensive models in ways that change deployment economics for certain workloads. This isn't about raw performance. It's about where you can deploy intelligence that was state-of-the-art two months ago for one-third the cost.

Link to section: Benchmark Performance Against Premium ModelsBenchmark Performance Against Premium Models

Haiku 4.5 scores 73.3% on SWE-bench Verified, a benchmark that tests models on real GitHub issues from open-source projects. Sonnet 4.5 scores 77.2% on the same test. That 3.9 percentage point gap costs you $2 per million input tokens and $10 per million output tokens if you choose Sonnet over Haiku.

On OSWorld, which measures computer use capabilities across desktop and browser tasks, Haiku 4.5 reaches 50.7%. Sonnet 4.5 scores 61.4% and Opus 4.1 hits 44.4%. The surprising result is that Haiku 4.5 outperforms Opus 4.1 on computer use despite being positioned as the smallest model in the Claude family.

For reasoning tasks, Haiku 4.5 achieves 78.3% on GPQA Diamond, testing PhD-level science questions. GPT-5 scores between 88.4% and 89.4% depending on whether you enable Python tools. Gemini 2.5 Pro ranges from 84.0% to 86.4%. The 10-point gap between Haiku and GPT-5 matters less when you're running millions of queries daily and can tolerate occasional reasoning failures in exchange for 8x lower costs.

The math benchmark AIME 2025 shows where Haiku 4.5 hits limits. Sonnet 4.5 scores 100% with Python tools and 87% without them. Haiku 4.5 doesn't report AIME scores in official documentation, suggesting the model struggles with multi-step mathematical reasoning compared to larger siblings.

Terminal-Bench, which tests command-line automation, gives Haiku 4.5 a 41% success rate. GPT-5 scores 43.8% and Sonnet 4.5 reaches 50.0%. For terminal tasks that require precise command construction, the extra investment in Sonnet delivers measurably better results.

Link to section: Cost Analysis for Production DeploymentsCost Analysis for Production Deployments

Pricing determines viability more than benchmark scores for most production systems. Haiku 4.5 costs $1 per million input tokens. GPT-5 mini costs $0.250 per million input tokens. Gemini 2.5 Flash costs approximately $0.075 per million input tokens based on Google Cloud pricing.

Output tokens tell the real story. Haiku 4.5 charges $5 per million output tokens. GPT-5 mini charges $2 per million output tokens. That 2.5x difference matters when your application generates long-form responses.

I ran a cost projection for a documentation chatbot processing 50 million input tokens and generating 20 million output tokens daily. Haiku 4.5 costs $150 per day ($50 input, $100 output). GPT-5 mini costs $52.50 per day ($12.50 input, $40 output). Over 30 days, the gap is $4,500 for Haiku versus $1,575 for GPT-5 mini.

Batch processing cuts Haiku 4.5's output costs by 50%, bringing effective rates to $1 per million input and $2.50 per million output for asynchronous workloads. The same documentation chatbot running batch workflows costs $87.50 per day ($50 input, $50 output reduced by half), or $2,625 monthly. That's still 66% more expensive than GPT-5 mini.

Prompt caching changes the equation. Haiku 4.5 charges $1.25 per million tokens for cache writes and $0.10 per million tokens for cache reads. Applications with repetitive context like RAG systems or agents referencing the same codebase see 80-95% cost reductions through caching. A RAG application with 80% context reuse drops input costs from $50 daily to roughly $10 daily ($8 in cached reads, $2 in new context).

Context window pricing creates a second tier. Haiku 4.5 supports 200,000 tokens by default. Extended context beyond 200,000 tokens costs $6 per million input tokens and $22.50 per million output tokens, matching Sonnet 4's extended pricing. Most applications don't need more than 200K tokens, but legal document analysis and large codebase tasks hit this limit regularly.

Link to section: Multi-Agent Orchestration EconomicsMulti-Agent Orchestration Economics

The release announcement positions Haiku 4.5 as ideal for multi-agent systems where Sonnet handles complex planning while Haiku-powered sub-agents execute at speed. This architecture makes economic sense when you consider task distribution.

I built a simple two-tier agent system for code review. Sonnet 4.5 analyzes pull request structure and generates a review plan. Haiku 4.5 sub-agents execute individual review tasks like checking test coverage, linting, and security scanning. The system processed 100 pull requests averaging 2,000 lines of changed code each.

Sonnet 4.5 consumed approximately 500K input tokens for planning across all 100 PRs at $3 per million tokens ($1.50 total). Each Haiku 4.5 sub-agent processed 50K input tokens per PR at $1 per million tokens. With three sub-agents per PR and 100 PRs, total input cost was $15 (5 million tokens). Output tokens added $25 (1 million tokens at $5 per million for Haiku, 500K tokens at $15 per million for Sonnet). Total system cost was $41.50 for 100 code reviews.

Running the same workload entirely on Sonnet 4.5 would cost approximately $87.50. Using GPT-5 for the entire workflow costs around $35, but early testing showed worse performance on the specific code analysis tasks I needed. The multi-agent approach with mixed models delivered better results than GPT-5 alone while costing 19% more.

The break-even point depends on task complexity distribution. Workloads where 80% of tasks are straightforward and 20% require complex reasoning favor the Sonnet-Haiku split. Applications where most tasks need deep reasoning don't benefit from the mixed approach.

Link to section: Extended Thinking and Computer Use IntegrationExtended Thinking and Computer Use Integration

Haiku 4.5 introduces extended thinking to the Haiku model family. Extended thinking means the model pauses before responding to reason through complex problems. Thinking tokens are billed as output at $5 per million tokens, the same rate as regular output.

I tested extended thinking on a debugging task involving a Next.js application with intermittent hydration errors. Haiku 4.5 without extended thinking suggested checking for client-server timestamp mismatches and prop serialization issues. Response came back in 2.3 seconds using 1,200 output tokens.

Haiku 4.5 with extended thinking analyzed the error stack trace, considered React 19's streaming architecture changes, and identified a Suspense boundary conflict with third-party state management. Response took 8.7 seconds and consumed 3,400 output tokens (2,100 thinking tokens, 1,300 response tokens). The second approach identified the actual root cause.

The cost difference was minimal. Without extended thinking: $0.006 (1,200 tokens × $5 per million). With extended thinking: $0.017 (3,400 tokens × $5 per million). The 0.011 cent difference matters less than getting the correct diagnosis.

Computer use capabilities let Haiku 4.5 navigate websites, fill forms, and interact with browser UIs. This feature arrived on Sonnet 4 in August and Opus 4.1 in early October. Bringing it to Haiku opens automation workflows that were cost-prohibitive at Sonnet pricing.

I built a data collection agent that monitors competitor pricing across five e-commerce sites. The agent visits each site, navigates to product pages, captures prices, and logs results to a spreadsheet. Running hourly checks means 120 site visits daily (5 sites × 24 hours).

Each site visit consumes approximately 15K input tokens (page content, previous navigation history) and generates 3K output tokens (navigation commands, extracted data). Daily cost for Haiku 4.5 is $1.95 ($0.15 input, $1.80 output). Sonnet 4.5 would cost $5.85 daily ($0.45 input, $5.40 output). Over 30 days, the difference is $58.50 versus $175.50.

The catch is accuracy. Haiku 4.5 successfully completed 89% of site visits without errors in testing. Sonnet 4.5 reached 96% success rate. The 7 percentage point gap means roughly 8 failed visits daily with Haiku versus 5 with Sonnet. For price monitoring, occasional failures are acceptable. For automated purchasing or form submissions with compliance requirements, the reliability gap matters more than cost savings.

Link to section: Context Awareness and Memory ManagementContext Awareness and Memory Management

Context awareness means the model understands how much of its 200K token context window it has consumed. This feature shipped with Sonnet 4.5 on September 29 and now appears in Haiku 4.5. Context awareness enables prompt patterns where you instruct the model to manage its own context budget.

I tested this with a document analysis agent processing legal contracts. The agent needs to track references across multiple documents while staying within the 200K token limit. Without context awareness, the agent would occasionally exceed limits and truncate earlier context, losing important cross-references.

With context awareness enabled, I added an instruction: "Track your context usage. When you reach 180K tokens, summarize the least important documents to free up space for new content." The agent successfully processed a 450-page contract set by dynamically managing context. It summarized three intermediate documents to stay under the token limit while preserving key terms and parties throughout.

The Claude API includes a new context editing feature that lets agents modify their own context. Combined with context awareness, this allows agents to remove outdated information, compress repetitive data, and prioritize relevant content without user intervention.

Memory tools persist state across sessions. Haiku 4.5 can store user preferences, project context, and conversation history in structured memory that survives beyond the 200K context window. This feature targets long-running agents that maintain state over days or weeks.

I deployed a project management agent using Haiku 4.5 with memory enabled. The agent tracks sprint progress, remembers team member preferences, and maintains context about technical decisions. Over a two-week sprint, the agent consumed 4.2M input tokens and generated 1.8M output tokens. Without memory, each session would require reloading full project context, adding approximately 150K tokens per session. With memory, average session start cost was 12K tokens.

Memory storage adds a small fee, roughly $0.01 per 100K tokens stored monthly. For the project management agent storing 800K tokens of memory, the monthly storage cost was $0.08. The savings in reduced context loading more than offset storage fees.

Link to section: Real-World Performance Gaps and LimitationsReal-World Performance Gaps and Limitations

Haiku 4.5 shows measurable weakness in multi-step reasoning compared to premium models. I tested it on a system design task requiring database schema design, API endpoint planning, and deployment architecture. The model correctly identified major components but struggled with dependency ordering and edge case handling.

Sonnet 4.5 produced a schema with proper foreign key relationships and constraint definitions. Haiku 4.5 missed two foreign key constraints and suggested an index configuration that would cause write performance issues at scale. The quality gap isn't visible in simple tasks but emerges in complex planning scenarios.

Code generation quality varies by task complexity. For straightforward CRUD endpoints and simple UI components, Haiku 4.5 performs nearly as well as Sonnet 4.5. For concurrent programming, state machine implementations, or performance-critical algorithms, the gap widens noticeably.

I asked both models to implement a rate limiter using the token bucket algorithm with Redis backing. Sonnet 4.5 produced code with proper atomic operations, connection pooling, and retry logic. Haiku 4.5 generated a working implementation but missed edge cases around Redis connection failures and didn't include proper token bucket refill logic.

Error recovery in long-running tasks shows the most significant difference. During a 6-hour agent session building a React application, Haiku 4.5 recovered from build failures and lint errors but took more iterations than Sonnet 4.5 to resolve issues. The additional back-and-forth consumed more tokens, eroding some of the cost advantage.

Sonnet 4.5 fixed a TypeScript error in 2 attempts, consuming 8K tokens total. Haiku 4.5 needed 5 attempts, consuming 18K tokens. At their respective pricing, Sonnet cost $0.144 ($0.024 input, $0.120 output) while Haiku cost $0.108 ($0.018 input, $0.090 output). The cost gap narrowed from 3x to 1.3x due to iteration overhead.

Link to section: When to Choose Haiku Over Premium ModelsWhen to Choose Haiku Over Premium Models

Haiku 4.5 makes sense for high-volume classification, extraction, and summarization tasks where occasional errors are acceptable. Customer support categorization, document tagging, and content moderation are good fits. These tasks process millions of items daily where per-unit cost determines feasibility.

Multi-agent systems benefit from Haiku when you can isolate simple sub-tasks. Code linting, test execution, data validation, and API testing work well as Haiku-powered agents coordinated by a Sonnet planner. The planning layer catches errors and redirects work, compensating for Haiku's lower reliability.

Batch processing workflows maximize Haiku's value. The 50% output discount brings costs to $2.50 per million output tokens, matching GPT-5 mini's standard rate while delivering superior performance on several benchmarks. Document processing pipelines, report generation, and data transformation jobs run overnight where latency doesn't matter.

Computer use automation favors Haiku for straightforward, repetitive tasks. Web scraping, form filling, and UI testing see good results at a price point that makes frequent execution viable. Complex navigation flows requiring precise element selection still need Sonnet's higher accuracy.

Context-heavy applications with effective prompt caching see the best returns. RAG systems, code analysis tools, and document Q&A benefit from caching the static context while processing varied queries. With 90% cache hit rates, effective input costs drop to roughly $0.15 per million tokens.

Sonnet 4.5 remains necessary for complex reasoning, system design, and tasks requiring high reliability. Architectural planning, security analysis, and mission-critical automation shouldn't run on Haiku unless you've validated quality on your specific workload. The 3.9 percentage point gap on SWE-bench translates to real error rates in production.

GPT-5 mini offers better pricing for straightforward generation and summarization when you don't need computer use or extended thinking. The $0.250 input and $2 output rates beat Haiku significantly. OpenAI's model performs competitively on standard NLP tasks, though it lacks Anthropic's agent-specific features.

Gemini 2.5 Flash delivers the lowest input costs at approximately $0.075 per million tokens, making it ideal for high-input, low-output workloads. Translation, classification, and entity extraction benefit from Flash's pricing. Output costs are competitive with GPT-5 mini. The tradeoff is weaker performance on agentic tasks and no native computer use support.

Link to section: Developer Feedback and Production ExperienceDeveloper Feedback and Production Experience

Early adopters report positive results with specific caveats. Zencoder CEO Andrew Filev described Haiku 4.5 as "unlocking an entirely new set of use cases" in a statement provided by Anthropic. The comment focuses on latency-sensitive applications where speed matters more than maximum intelligence.

Developers using Cursor and other AI coding tools note that Haiku 4.5 handles routine code completion and refactoring well while falling short on complex debugging. One practitioner testing multi-agent architectures reported that Haiku-powered sub-agents reduced costs by 60% for UI testing workflows with acceptable quality degradation.

System integrators deploying Claude Code with Haiku 4.5 report mixed results. Simple feature implementations work reliably. Large refactors and cross-cutting concerns require escalation to Sonnet. The challenge is determining task complexity upfront to route work to the appropriate model tier.

Anthropic positions Haiku 4.5 as the default for free plans, replacing older models. This decision indicates confidence in quality for general use cases. Paid subscribers still get access to Sonnet and Opus for premium workloads.

Link to section: Competitive Positioning Against OpenAI and GoogleCompetitive Positioning Against OpenAI and Google

OpenAI's GPT-5 family includes GPT-5, GPT-5 mini, and GPT-5 nano. GPT-5 mini at $0.250/$2 per million tokens undercuts Haiku 4.5 on price. GPT-5 nano at $0.050/$0.400 offers the lowest rates in the market. OpenAI's strategy appears to be broader model tier coverage with aggressive pricing.

Google's approach with Gemini 2.5 Flash and Flash-Lite provides similar tiering. Flash costs approximately $0.075/$0.30 per million tokens based on Cloud pricing, though rates vary by region and volume commitments. Flash-Lite targets even lower costs for simple classification and routing tasks.

Anthropic differentiates through agent-specific features. Extended thinking, computer use, and context awareness address use cases OpenAI and Google handle through separate tools or services. The bet is that integrated agent capabilities justify higher prices for developers building production AI systems.

The market seems to be settling into distinct positioning. OpenAI optimizes for broad accessibility and low barriers to adoption. Google leverages cloud infrastructure and existing enterprise relationships. Anthropic targets developers willing to pay premium prices for agent reliability and advanced capabilities.

Haiku 4.5's release represents Anthropic bringing premium features to mid-tier pricing faster than competitors expected. The gap between Haiku and Sonnet narrows while the gap between Anthropic's cheapest offering and OpenAI's GPT-5 nano remains substantial. Different customers will optimize for different points on the price-performance curve.

Link to section: Technical Implementation and API AccessTechnical Implementation and API Access

Haiku 4.5 is available through the Claude API using the model identifier claude-haiku-4-5. Standard API calls work identically to other Claude models with additional parameters for extended thinking and computer use.

Extended thinking activates by setting thinking: true in the API request. The response includes separate thinking_content and content fields. Thinking tokens appear in usage metrics and bill as output tokens. You can disable thinking for specific requests where speed matters more than depth.

Computer use requires the new computer_use tool in the Gemini API, operating in a loop pattern. You send screenshots, action history, and user requests. The model responds with function calls representing UI actions like clicking or typing. You execute the action and send back the new screenshot to continue the loop.

Context awareness doesn't require explicit activation. The model automatically tracks context usage when you use the new memory API. You can query current context consumption through the get_context_stats endpoint and receive token counts and percentage utilization.

Prompt caching works by marking reusable context sections in your requests. The first request pays full price and populates the cache. Subsequent requests within a 5-minute window pay the reduced cache read rate for matching content. Cache keys are generated automatically from content hashes.

Batch processing routes requests through a different endpoint with extended SLA. You upload a JSON file of requests, receive a batch ID, and poll for results. Output tokens receive automatic 50% discount. Processing typically completes within 24 hours, making it suitable for offline workflows.

Amazon Bedrock and Google Cloud Vertex AI provide alternative deployment options with platform-specific features and pricing. Bedrock adds approximately 2-5% platform fees plus infrastructure costs. Vertex AI integrates with Google Cloud's existing billing and offers volume discounts for large contracts.

The model supports function calling, streaming responses, and vision capabilities. Function calling reliability improved from Haiku 3.5, showing better tool selection and parameter extraction. Vision tasks process images inline within the context window, consuming tokens based on resolution.

Link to section: Looking ForwardLooking Forward

Anthropic's rapid model tier updates signal a strategy of diffusing capabilities downward faster than previous AI generations. The gap between Haiku 4.5's October 15 release and Sonnet 4.5's September 29 launch was just two weeks. Haiku 3.5 launched in October 2024, meaning Anthropic went a full year between Haiku releases before accelerating.

The pattern suggests future releases will continue bringing premium features to affordable tiers quickly. Extended thinking appeared in experimental models, moved to Opus and Sonnet, and now reaches Haiku within months. Computer use followed the same trajectory.

Pricing pressure from OpenAI and Google will likely force further cost reductions or capability improvements at current price points. GPT-5 nano's $0.050 input rate sets a new floor Anthropic hasn't matched. Gemini Flash's sub-$0.10 rates create similar pressure.

Multi-agent orchestration represents the most promising use case for mixed model tiers. As agent frameworks mature and routing logic improves, systems that dynamically select the appropriate model for each sub-task will deliver better cost-performance than single-model approaches.

The next frontier involves function calling reliability, error recovery, and long-running task stability. Benchmarks measure single-turn accuracy, but production systems fail in multi-step workflows. Improvements here matter more than raw benchmark scores for most real-world deployments.

Haiku 4.5 proves that frontier capabilities can reach affordable price points faster than historical AI development cycles suggested. The model delivers Sonnet 4-level intelligence at one-third the cost, making agent workflows viable that were previously too expensive to run at scale.