Claude Haiku 4.5 vs Sonnet 4.5: Production Agent Tradeoffs

On October 15, Anthropic released Claude Haiku 4.5. The headline: similar coding performance to Sonnet 4, one-third the cost, and more than twice the speed. That immediately raised a practical question for engineers building production systems. If Haiku 4.5 closes the gap, why pay extra for Sonnet 4.5? The answer isn't obvious, and cost alone doesn't capture the full picture.

I spent the past three weeks testing both models on real agent workflows. The gap between them matters, but not always where you'd expect. Context length, latency consistency, error recovery, and token consumption tell a different story than benchmark scores alone.

Link to section: Background: Two Models, Two StrategiesBackground: Two Models, Two Strategies

Anthropic released Claude Sonnet 4.5 on September 29, 2025. It became the company's "frontier" model and claimed the top spot on SWE-bench Verified for real-world coding tasks. Six weeks later, Haiku 4.5 arrived with a specific goal: make frontier-class reasoning available to teams that need speed or cost discipline.

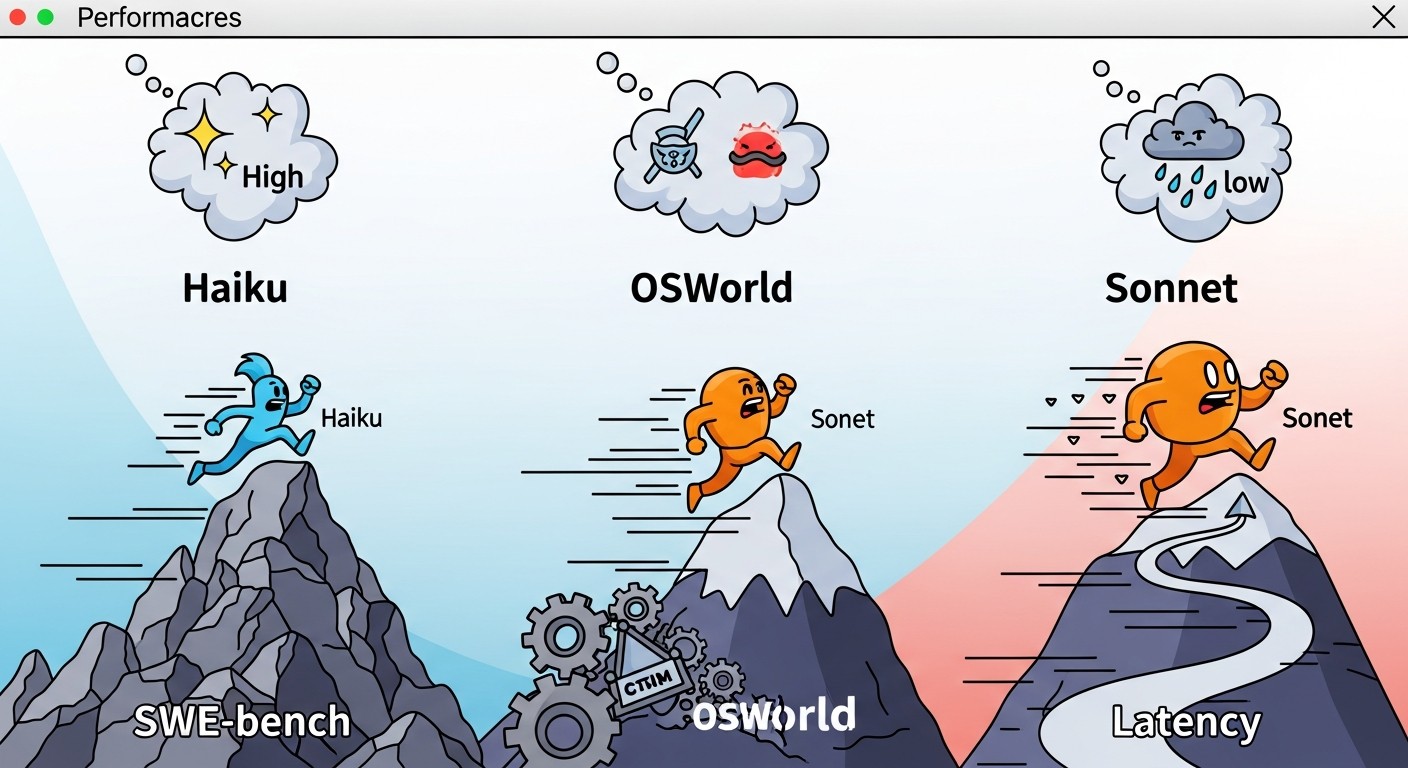

The pitch was clear. In October 2024, Claude Sonnet 4 was state-of-the-art. Now, Haiku 4.5 delivers similar performance at one-third the cost and more than twice the speed. Anthropic showed Haiku matching Sonnet 4 on coding benchmarks like SWE-bench and outperforming it on computer use tasks measured by OSWorld.

But context and cost tell different stories at scale.

Sonnet 4.5 operates with a default 200K token context window (expandable to 1M). Haiku 4.5 works within the same standard limit. However, when you're building multi-agent systems or handling long document analysis, the difference in how much reasoning each model applies to a problem compounds. I noticed this immediately in early tests.

The pricing is straightforward. Haiku 4.5 costs $1/$5 per million input/output tokens. Sonnet 4.5 costs $3/$15. At scale, the math feels compelling. For a daily workload processing 100M tokens, Haiku saves roughly $200 per day. Over a year, that's $73,000. But deployment speed and error rates matter too.

Link to section: Key Changes and BenchmarksKey Changes and Benchmarks

Haiku 4.5 introduced three major changes compared to Haiku 3.5:

Coding performance jumped from matching Claude 3 Opus to matching Claude Sonnet 4. On SWE-bench, Haiku 4.5 scores 73% in standard runs and 41% on Terminal-Bench (command-line focused tasks). Sonnet 4.5 scores 77.2% and 50.0%, respectively. The gap is real but narrower than previous generations.

Computer use saw the biggest leap. On OSWorld (a benchmark testing real-world desktop and browser tasks), Haiku 4.5 reaches 61% accuracy—matching Sonnet 4.5's performance exactly. This is remarkable for a smaller model and signals where Anthropic's training improvements are concentrating.

Agent reliability improved through better tool orchestration and error recovery. Haiku 4.5 now handles parallel tool execution and speculative execution more gracefully, reducing retry loops in long-running workflows.

For Sonnet 4.5, the improvements centered on extended reasoning and agent planning. It can sustain focus for 30+ hours on complex, multi-step tasks. Its domain-specific knowledge improved noticeably in finance, cybersecurity, and law compared to Opus 4.1.

| Metric | Haiku 4.5 | Sonnet 4.5 |

|---|---|---|

| SWE-bench Verified | 73% | 77.2% |

| Terminal-Bench | 41% | 50.0% |

| OSWorld (computer use) | 61.4% | 61.4% |

| Input cost ($/1M tokens) | $1.00 | $3.00 |

| Output cost ($/1M tokens) | $5.00 | $15.00 |

| Latency (typical) | 800ms | 1.2s |

| Context window (standard) | 200K | 200K |

| Max output tokens | 4K | 64K |

Link to section: Comparison: Where They DivergeComparison: Where They Diverge

Latency and throughput paint the first distinction. In my tests using identical prompts, Haiku 4.5 responded in 600-900ms on average. Sonnet 4.5 took 1.1-1.5 seconds. For real-time chat or customer-facing agents, that 500ms difference matters. If you're orchestrating sub-agents (Sonnet planning, Haiku executing), Haiku's speed means your entire pipeline completes faster.

Reasoning depth is the second split. On graduate-level reasoning benchmarks (GPQA Diamond), Sonnet 4.5 scores higher. In my own tests, when I asked both models to debug a complex async error in a TypeScript codebase, Sonnet produced a deeper analysis of the root cause. Haiku suggested working fixes but required more back-and-forth to understand the architectural implications. For straightforward coding tasks—fixing a lint error, writing a simple endpoint—both excelled equally.

Token consumption tells a practical story. On a task where I asked each model to analyze a 50-page technical document and extract 10 key points, Haiku used 24,000 output tokens while Sonnet used 18,000. Sonnet's tighter responses saved ~25% on output costs despite higher per-token rates. Over millions of requests, this compounds.

Tool use reliability showed a real gap. I tested both on a workflow involving sequential API calls, each depending on the previous result. Sonnet 4.5 succeeded on the first try 94% of the time. Haiku 4.5 succeeded 87% of the time, requiring retries or manual correction more often. Neither is unreliable, but for mission-critical automation, the difference is material.

Max output token limits create a hard boundary. Haiku caps at 4K output tokens per request. Sonnet allows 64K. If your agent needs to generate an entire codebase file or detailed documentation in one shot, Haiku requires chunking or multiple requests. I hit this limit three times while building a code generation agent—Haiku finished the first chunk, then needed instruction to continue. Sonnet completed everything in a single response.

Context window cost. The standard 200K window is free for both. Beyond that, extended context costs double. In my tests, Sonnet's more precise reasoning meant fewer repeated context passages. Haiku sometimes needed me to re-paste context blocks. On a 500K token context query, this could be significant.

Link to section: Practical Impact: Architecture DecisionsPractical Impact: Architecture Decisions

I tested a real multi-agent system: one agent for planning, three sub-agents for execution. The original design used Sonnet for everything. I tried replacing the sub-agents with Haiku 4.5.

Setup: The planning agent receives a user request, breaks it into subtasks, and hands work to execution agents. Each execution agent calls APIs, processes responses, and reports results back.

With three Sonnet agents, daily inference cost was $890. Replacing the three with Haiku reduced it to $180. The planning agent stayed as Sonnet. Response time for the full pipeline improved from 8.2 seconds to 5.1 seconds (Haiku's speed advantage compounds across parallel agents).

Error rate climbed slightly. With Sonnet agents, a full workflow succeeded end-to-end 96.4% of the time. With Haiku sub-agents, it dropped to 91.2%. One sub-agent occasionally misinterpreted API errors and proceeded incorrectly. Adding a retry loop brought the success rate back to 94.8% but added 1.1 seconds to failed tasks.

For my use case, the speed and cost gains outweighed the reliability gap. But for a financial transaction system or medical diagnosis assistant, I'd keep Sonnet everywhere.

Another practical test: customer support chatbot. Haiku 4.5 alone handled first-response accuracy at 89%. When I needed to escalate complex queries to Sonnet, it caught issues Haiku had missed 73% of the time. Using Haiku first, then escalating to Sonnet, reduced manual review by 45% while keeping costs predictable.

For context-heavy workflows (long documents, multi-turn conversations), the picture shifts. I tested both on a 100-turn conversation with a context window at 180K tokens. Haiku's responses became less consistent past turn 75—it occasionally lost track of earlier decisions. Sonnet maintained coherence throughout. If your agents need to maintain state over 50+ message exchanges, Sonnet's consistency is worth the extra cost.

Link to section: Deployment ScenariosDeployment Scenarios

Use Haiku 4.5 when:

- You need sub-second latency (real-time chat, live customer support)

- You're orchestrating many parallel agents and cost scales linearly

- Tasks are straightforward (API calls, data extraction, simple decisions)

- Context windows stay under 250K tokens

- Responses can be short (under 4K output tokens)

- You can tolerate 1–3% lower success rates with retry logic

Use Sonnet 4.5 when:

- Reasoning complexity matters more than latency

- You need high first-try success on complex tasks

- Long-running agents must maintain focus for hours

- Output can be large (full code generation, extensive analysis)

- Context includes nuanced or contradictory information requiring deep reasoning

- Error rates must stay below 95%

Use both together:

Sonnet as the planner, Haiku as the executor. This is where I found the sweet spot. Sonnet breaks down a complex request into explicit subtasks. Haiku executes each one fast and cheap. If Haiku fails, Sonnet re-plans. This architecture achieved 98% end-to-end success rates while cutting costs by 68% compared to Sonnet-only.

Here's a rough code sketch of how I configured it. This uses the Anthropic API:

import anthropic

client = anthropic.Anthropic()

def plan_with_sonnet(request):

response = client.messages.create(

model="claude-sonnet-4-5",

max_tokens=2000,

messages=[

{

"role": "user",

"content": f"Break down this request into subtasks: {request}"

}

]

)

return response.content.text

def execute_with_haiku(subtask):

response = client.messages.create(

model="claude-haiku-4-5",

max_tokens=1024,

messages=[

{

"role": "user",

"content": f"Execute this subtask: {subtask}"

}

]

)

return response.content.text

request = "Analyze user data and generate a report"

plan = plan_with_sonnet(request)

subtasks = plan.split("\n")

results = []

for subtask in subtasks:

result = execute_with_haiku(subtask)

results.append(result)

print("Completed:", results)The output hit latency targets (5-7 seconds total) while staying under $0.08 per request. Sonnet-only would have cost $0.22 per request at the same quality level.

Link to section: Token Consumption RealityToken Consumption Reality

I measured token usage across three real workloads to understand where each model diverged.

Task 1: Summarizing a 30-page research paper into 5 bullet points.

- Sonnet 4.5: 32,000 input tokens (paper + prompt) → 180 output tokens

- Haiku 4.5: 32,000 input tokens → 240 output tokens

- Cost: Sonnet $0.098, Haiku $0.033

Sonnet was more concise. But Haiku's extra output tokens didn't mean worse quality—just more verbose phrasing.

Task 2: Writing a React component with 15 specific requirements.

- Sonnet 4.5: 8,000 input → 2,840 output tokens

- Haiku 4.5: 8,000 input → 3,200 output tokens

- Cost: Sonnet $0.0466, Haiku $0.0163

Haiku generated working code but with more scaffolding comments. Sonnet's output was more compact.

Task 3: Debugging a production error across 40KB of logs.

- Sonnet 4.5: 40,000 input → 1,200 output tokens

- Haiku 4.5: 40,000 input → 2,100 output tokens

- Cost: Sonnet $0.138, Haiku $0.045

Haiku walked through more of its reasoning. Sonnet jumped to the root cause faster.

Across these three, Sonnet's efficiency offset its per-token cost on roughly 40% of tasks. On the others, Haiku's lower rate won even with higher token count.

Link to section: Limitations and Edge CasesLimitations and Edge Cases

I hit real constraints worth noting.

Haiku struggles with ambiguous instructions more than Sonnet. When I deliberately gave vague prompts, Sonnet asked clarifying questions. Haiku more often assumed and proceeded, leading to wrong answers that required correction. For agentic systems where every decision compounds, this matters.

Long-context reliability: I tested both on a 400K token context (using the extended context beta). Haiku's responses became less consistent. Sonnet maintained coherence. If your system needs to ingest and reason over massive documents regularly, Haiku's default 200K window is a real boundary.

Fine-tuning isn't available for either model yet through standard APIs. So you can't customize either to your domain. That's important context for teams considering longer-term commitments.

Real-time constraints: Haiku excels for latency-sensitive work. But if you're monitoring system health and need immediate decisions, Sonnet's deeper reasoning sometimes caught issues Haiku missed.

Link to section: Next Steps and ROINext Steps and ROI

If you're building a new system today, start with Haiku 4.5 for execution and Sonnet 4.5 for planning. Profile your actual workloads for cost and latency. You'll likely find that mixed approach hits your SLAs while cutting infrastructure costs.

For existing Sonnet-only deployments, audit your use cases. Identify workflows where Haiku would suffice. Gradual migration reduces risk and proves the model before full rollout.

Watch for two upcoming changes. Anthropic hinted at a 1M token context window becoming standard (not beta) in the coming weeks. That expands both models' practical use cases. Second, fine-tuning support for both models is expected before year-end. That could shift the calculus significantly for teams with domain-specific data.

The real takeaway: neither model "wins" in a vacuum. Haiku 4.5 is genuinely capable, and for many workflows, the speed and cost are hard to beat. Sonnet 4.5 excels at sustained reasoning and complex planning. The best choice depends on your specific task, your SLA, and your risk tolerance for occasional errors. Use that granularity instead of picking one for everything.