Microsoft MAI Models Setup Guide: Voice and Text AI

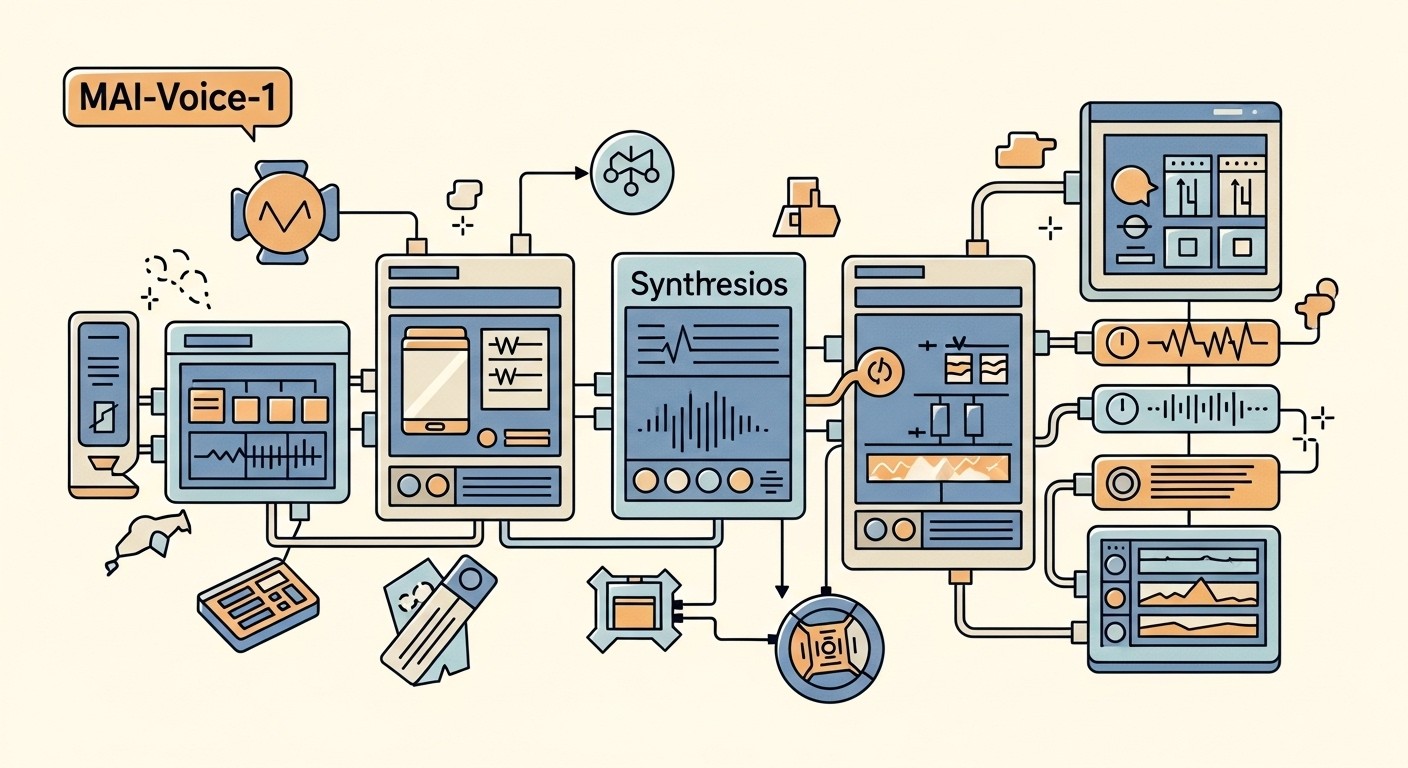

Microsoft has officially launched MAI-Voice-1 and MAI-1-preview, marking the company's first completely in-house AI models without OpenAI involvement. This represents a major shift as Microsoft moves from infrastructure partner to direct model developer, competing head-to-head with industry leaders.

MAI-Voice-1 delivers impressive speech synthesis capabilities, generating one minute of natural-sounding audio in under one second using a single GPU. Meanwhile, MAI-1-preview serves as Microsoft's first proprietary foundation model, currently ranking 15th on LM Arena above GPT-4.1 Flash. Despite being trained on just 15,000 H100 GPUs compared to competitors using over 100,000 GPUs, these models punch well above their weight through efficient training techniques.

This comprehensive guide walks you through setting up both models, from initial access to production deployment, complete with practical examples and troubleshooting solutions.

Link to section: Prerequisites and System RequirementsPrerequisites and System Requirements

Before diving into the setup process, ensure your development environment meets the minimum requirements for both models. MAI-Voice-1 requires significantly less computational power than traditional speech synthesis models, making it accessible for various deployment scenarios.

Your system needs Python 3.8 or higher, with at least 8GB of available RAM for basic implementations. For production deployments, consider 16GB RAM and a dedicated GPU, though MAI-Voice-1's single-GPU efficiency means you won't need enterprise-grade hardware. Install the latest versions of pip and virtualenv to manage dependencies effectively.

Create a dedicated project directory and virtual environment:

mkdir mai-models-project

cd mai-models-project

python -m venv mai-env

source mai-env/bin/activate # On Windows: mai-env\Scripts\activateInstall the essential dependencies:

pip install requests python-dotenv numpy scipy soundfile

pip install azure-cognitiveservices-speech # For audio processing

pip install streamlit # For demo applicationsYou'll also need valid Microsoft Azure credentials and access to the MAI models through Microsoft's preview programs. The setup process differs slightly depending on whether you're accessing through Azure Cognitive Services or the LM Arena platform.

Link to section: Accessing MAI-Voice-1 Through Copilot LabsAccessing MAI-Voice-1 Through Copilot Labs

MAI-Voice-1 is currently available through Copilot Labs, offering the most straightforward path for experimentation. Microsoft has integrated the model into Copilot Daily for voice updates and news summaries, providing a testing ground before broader API release.

Navigate to the Copilot Labs interface and locate the MAI-Voice-1 section. The interface allows you to input text prompts and generate audio stories or guided narratives. This testing environment helps you understand the model's capabilities before implementing it in your applications.

For API access, you'll need to apply through Microsoft's preview program. Create a configuration file to store your credentials:

# config.py

import os

from dotenv import load_dotenv

load_dotenv()

AZURE_SUBSCRIPTION_KEY = os.getenv('AZURE_SUBSCRIPTION_KEY')

AZURE_REGION = os.getenv('AZURE_REGION')

MAI_VOICE_ENDPOINT = os.getenv('MAI_VOICE_ENDPOINT')

MAI_TEXT_ENDPOINT = os.getenv('MAI_TEXT_ENDPOINT')Create a .env file in your project root:

AZURE_SUBSCRIPTION_KEY=your_subscription_key_here

AZURE_REGION=eastus

MAI_VOICE_ENDPOINT=https://your-mai-voice-endpoint.cognitiveservices.azure.com/

MAI_TEXT_ENDPOINT=https://your-mai-text-endpoint.cognitiveservices.azure.com/The limited access during preview means you might encounter random assignment when trying to test the model. Most users won't get direct access initially, but you can apply for API access through Microsoft's developer portal.

Link to section: Setting Up MAI-Voice-1 for Speech SynthesisSetting Up MAI-Voice-1 for Speech Synthesis

MAI-Voice-1's transformer-based architecture handles both single-speaker and multi-speaker scenarios with remarkable efficiency. The model was trained on a diverse multilingual speech dataset, enabling it to generate expressive and context-appropriate voice outputs across multiple languages.

Create a basic speech synthesis client:

# mai_voice_client.py

import requests

import json

import base64

from config import AZURE_SUBSCRIPTION_KEY, MAI_VOICE_ENDPOINT

class MAIVoiceClient:

def __init__(self):

self.endpoint = MAI_VOICE_ENDPOINT

self.subscription_key = AZURE_SUBSCRIPTION_KEY

self.headers = {

'Ocp-Apim-Subscription-Key': self.subscription_key,

'Content-Type': 'application/json'

}

def synthesize_speech(self, text, voice_settings=None):

"""Generate speech from text using MAI-Voice-1"""

default_settings = {

'voice_type': 'neural',

'speaking_rate': 1.0,

'pitch': 0,

'volume': 50,

'output_format': 'audio-16khz-32kbitrate-mono-mp3'

}

if voice_settings:

default_settings.update(voice_settings)

payload = {

'text': text,

'voice_settings': default_settings,

'model': 'MAI-Voice-1'

}

try:

response = requests.post(

f"{self.endpoint}/synthesize",

headers=self.headers,

json=payload,

timeout=30

)

if response.status_code == 200:

return response.content

else:

print(f"Error: {response.status_code} - {response.text}")

return None

except requests.exceptions.RequestException as e:

print(f"Request failed: {e}")

return None

def save_audio(self, audio_data, filename):

"""Save generated audio to file"""

if audio_data:

with open(filename, 'wb') as f:

f.write(audio_data)

return True

return False

Test the speech synthesis functionality:

# test_voice_synthesis.py

from mai_voice_client import MAIVoiceClient

def test_basic_synthesis():

client = MAIVoiceClient()

test_text = """

Welcome to MAI-Voice-1 demonstration. This model generates

high-quality speech using Microsoft's latest AI technology.

The synthesis process completes in under one second.

"""

# Generate audio with default settings

audio_data = client.synthesize_speech(test_text)

if audio_data:

success = client.save_audio(audio_data, 'test_output.mp3')

if success:

print("Audio generated successfully: test_output.mp3")

else:

print("Failed to save audio file")

else:

print("Speech synthesis failed")

def test_multi_speaker():

client = MAIVoiceClient()

# Test multi-speaker capabilities

voice_settings = {

'voice_type': 'multi-speaker',

'speaker_id': 'speaker_2',

'speaking_rate': 1.2,

'pitch': 5

}

narrator_text = "In a world where AI transforms communication..."

audio_data = client.synthesize_speech(narrator_text, voice_settings)

if audio_data:

client.save_audio(audio_data, 'multi_speaker_output.mp3')

print("Multi-speaker audio generated: multi_speaker_output.mp3")

if __name__ == "__main__":

test_basic_synthesis()

test_multi_speaker()The model's efficiency comes from its optimized architecture that requires only a single GPU for inference. This makes it suitable for real-time applications like interactive assistants, podcast narration, and accessibility features.

Link to section: Configuring MAI-1-Preview for Text GenerationConfiguring MAI-1-Preview for Text Generation

MAI-1-preview represents Microsoft's first end-to-end foundation language model, trained entirely on their infrastructure using approximately 15,000 NVIDIA H100 GPUs. The model uses a mixture-of-experts architecture optimized for instruction-following and conversational tasks.

Currently, MAI-1-preview is accessible primarily through LM Arena for head-to-head comparisons with other models. For API access, create a client that can handle both Arena testing and future direct API integration:

# mai_text_client.py

import requests

import json

import time

from config import MAI_TEXT_ENDPOINT, AZURE_SUBSCRIPTION_KEY

class MAITextClient:

def __init__(self):

self.endpoint = MAI_TEXT_ENDPOINT

self.subscription_key = AZURE_SUBSCRIPTION_KEY

self.headers = {

'Authorization': f'Bearer {self.subscription_key}',

'Content-Type': 'application/json',

'User-Agent': 'MAI-Client/1.0'

}

def generate_text(self, prompt, generation_config=None):

"""Generate text using MAI-1-preview model"""

default_config = {

'max_tokens': 1000,

'temperature': 0.7,

'top_p': 0.9,

'frequency_penalty': 0.0,

'presence_penalty': 0.0,

'stop_sequences': []

}

if generation_config:

default_config.update(generation_config)

payload = {

'model': 'MAI-1-preview',

'messages': [

{

'role': 'user',

'content': prompt

}

],

'generation_config': default_config

}

try:

response = requests.post(

f"{self.endpoint}/chat/completions",

headers=self.headers,

json=payload,

timeout=60

)

if response.status_code == 200:

result = response.json()

return result['choices'][0]['message']['content']

else:

print(f"API Error: {response.status_code}")

print(f"Response: {response.text}")

return None

except requests.exceptions.RequestException as e:

print(f"Request failed: {e}")

return None

def streaming_generate(self, prompt, generation_config=None):

"""Generate text with streaming response"""

config = {

'max_tokens': 1000,

'temperature': 0.7,

'stream': True

}

if generation_config:

config.update(generation_config)

payload = {

'model': 'MAI-1-preview',

'messages': [{'role': 'user', 'content': prompt}],

'generation_config': config

}

try:

response = requests.post(

f"{self.endpoint}/chat/completions",

headers=self.headers,

json=payload,

stream=True,

timeout=60

)

for line in response.iter_lines():

if line:

line_text = line.decode('utf-8')

if line_text.startswith('data: '):

json_str = line_text[6:]

if json_str.strip() != '[DONE]':

try:

data = json.loads(json_str)

delta = data['choices'][0]['delta']

if 'content' in delta:

yield delta['content']

except json.JSONDecodeError:

continue

except requests.exceptions.RequestException as e:

print(f"Streaming request failed: {e}")Test the text generation capabilities:

# test_text_generation.py

from mai_text_client import MAITextClient

def test_basic_generation():

client = MAITextClient()

prompt = """

Explain the key advantages of Microsoft's MAI-1-preview model

compared to other language models in terms of efficiency and performance.

"""

response = client.generate_text(prompt)

if response:

print("Generated Response:")

print("-" * 50)

print(response)

else:

print("Text generation failed")

def test_streaming_generation():

client = MAITextClient()

prompt = "Write a technical explanation of transformer architecture in AI models."

print("Streaming Response:")

print("-" * 50)

for chunk in client.streaming_generate(prompt):

print(chunk, end='', flush=True)

print()

def test_instruction_following():

client = MAITextClient()

config = {

'temperature': 0.3, # Lower temperature for more focused responses

'max_tokens': 500

}

prompt = """

Create a Python function that demonstrates error handling

best practices. Include docstrings and type hints.

"""

response = client.generate_text(prompt, config)

if response:

print("Code Generation Example:")

print("-" * 50)

print(response)

if __name__ == "__main__":

test_basic_generation()

print("\n" + "="*60 + "\n")

test_streaming_generation()

print("\n" + "="*60 + "\n")

test_instruction_following()MAI-1-preview's mixture-of-experts architecture allows it to achieve competitive performance while using fewer training resources than models like xAI's Grok or OpenAI's rumored GPT-5 cluster.

Link to section: Building Integrated ApplicationsBuilding Integrated Applications

The real power of Microsoft's MAI models emerges when combining both voice and text capabilities in unified applications. This section demonstrates building practical applications that leverage both models simultaneously.

Create a conversational AI assistant that can understand text input and respond with synthesized speech:

# integrated_assistant.py

from mai_voice_client import MAIVoiceClient

from mai_text_client import MAITextClient

import streamlit as st

import tempfile

import os

class MAIAssistant:

def __init__(self):

self.voice_client = MAIVoiceClient()

self.text_client = MAITextClient()

def process_conversation(self, user_input, voice_enabled=True):

"""Process user input and return text/audio response"""

# Generate text response using MAI-1-preview

text_response = self.text_client.generate_text(

user_input,

generation_config={

'temperature': 0.8,

'max_tokens': 300

}

)

if not text_response:

return None, None

audio_response = None

if voice_enabled:

# Convert text response to speech using MAI-Voice-1

audio_data = self.voice_client.synthesize_speech(

text_response,

voice_settings={

'speaking_rate': 1.1,

'pitch': 2,

'voice_type': 'neural'

}

)

if audio_data:

# Save to temporary file for playback

temp_file = tempfile.NamedTemporaryFile(

delete=False,

suffix='.mp3'

)

temp_file.write(audio_data)

temp_file.close()

audio_response = temp_file.name

return text_response, audio_response

def create_streamlit_app():

"""Create interactive Streamlit application"""

st.title("MAI Models Integration Demo")

st.write("Powered by Microsoft MAI-Voice-1 and MAI-1-preview")

# Initialize assistant

if 'assistant' not in st.session_state:

st.session_state.assistant = MAIAssistant()

# User input

user_input = st.text_area(

"Enter your message:",

placeholder="Ask me anything about technology, science, or general topics..."

)

col1, col2 = st.columns(2)

with col1:

generate_text = st.button("Generate Text Response")

with col2:

generate_voice = st.button("Generate Text + Voice")

if generate_text and user_input:

with st.spinner("Generating response..."):

text_response, _ = st.session_state.assistant.process_conversation(

user_input,

voice_enabled=False

)

if text_response:

st.subheader("AI Response:")

st.write(text_response)

else:

st.error("Failed to generate response")

if generate_voice and user_input:

with st.spinner("Generating text and voice response..."):

text_response, audio_file = st.session_state.assistant.process_conversation(

user_input,

voice_enabled=True

)

if text_response:

st.subheader("AI Response:")

st.write(text_response)

if audio_file:

st.subheader("Voice Output:")

st.audio(audio_file)

# Clean up temporary file

try:

os.unlink(audio_file)

except:

pass

else:

st.warning("Text generated but voice synthesis failed")

else:

st.error("Failed to generate response")

if __name__ == "__main__":

create_streamlit_app()Launch the integrated application:

streamlit run integrated_assistant.pyThis creates a web interface where users can interact with both MAI models through a single application, demonstrating practical integration patterns.

For production deployments, consider implementing caching mechanisms to improve response times and reduce API costs:

# cache_manager.py

import hashlib

import json

import os

from datetime import datetime, timedelta

class ResponseCache:

def __init__(self, cache_dir="mai_cache", ttl_hours=24):

self.cache_dir = cache_dir

self.ttl = timedelta(hours=ttl_hours)

if not os.path.exists(cache_dir):

os.makedirs(cache_dir)

def _get_cache_key(self, text, model_type, settings=None):

"""Generate cache key from input parameters"""

key_data = f"{text}:{model_type}:{json.dumps(settings, sort_keys=True)}"

return hashlib.md5(key_data.encode()).hexdigest()

def get_cached_response(self, text, model_type, settings=None):

"""Retrieve cached response if available and valid"""

cache_key = self._get_cache_key(text, model_type, settings)

cache_file = os.path.join(self.cache_dir, f"{cache_key}.json")

if os.path.exists(cache_file):

try:

with open(cache_file, 'r') as f:

cache_data = json.load(f)

cached_time = datetime.fromisoformat(cache_data['timestamp'])

if datetime.now() - cached_time < self.ttl:

return cache_data['response']

except:

pass

return None

def cache_response(self, text, model_type, response, settings=None):

"""Store response in cache"""

cache_key = self._get_cache_key(text, model_type, settings)

cache_file = os.path.join(self.cache_dir, f"{cache_key}.json")

cache_data = {

'timestamp': datetime.now().isoformat(),

'response': response,

'text': text,

'model_type': model_type,

'settings': settings

}

try:

with open(cache_file, 'w') as f:

json.dump(cache_data, f)

except:

passLink to section: Performance Optimization and TroubleshootingPerformance Optimization and Troubleshooting

Understanding the performance characteristics of both MAI models helps optimize applications for different use cases. MAI-Voice-1's single-GPU efficiency makes it suitable for real-time applications, while MAI-1-preview's competitive performance despite smaller training infrastructure demonstrates Microsoft's efficient training techniques.

Common performance bottlenecks include network latency, inefficient batch processing, and suboptimal model configuration. Here's a performance monitoring and optimization toolkit:

# performance_monitor.py

import time

import psutil

import logging

from functools import wraps

from typing import Dict, Any

class PerformanceMonitor:

def __init__(self):

self.metrics = []

self.logger = logging.getLogger('MAI_Performance')

def monitor_api_call(self, func):

"""Decorator to monitor API call performance"""

@wraps(func)

def wrapper(*args, **kwargs):

start_time = time.time()

start_memory = psutil.Process().memory_info().rss / 1024 / 1024

try:

result = func(*args, **kwargs)

success = True

error = None

except Exception as e:

result = None

success = False

error = str(e)

end_time = time.time()

end_memory = psutil.Process().memory_info().rss / 1024 / 1024

metric = {

'function': func.__name__,

'duration': end_time - start_time,

'memory_delta': end_memory - start_memory,

'success': success,

'error': error,

'timestamp': time.time()

}

self.metrics.append(metric)

if not success:

self.logger.error(f"API call failed: {func.__name__} - {error}")

elif metric['duration'] > 5.0: # Slow response warning

self.logger.warning(f"Slow API response: {func.__name__} took {metric['duration']:.2f}s")

return result

return wrapper

def get_performance_stats(self) -> Dict[str, Any]:

"""Calculate performance statistics"""

if not self.metrics:

return {}

successful_calls = [m for m in self.metrics if m['success']]

failed_calls = [m for m in self.metrics if not m['success']]

durations = [m['duration'] for m in successful_calls]

memory_deltas = [m['memory_delta'] for m in successful_calls]

return {

'total_calls': len(self.metrics),

'successful_calls': len(successful_calls),

'failed_calls': len(failed_calls),

'success_rate': len(successful_calls) / len(self.metrics) * 100,

'avg_duration': sum(durations) / len(durations) if durations else 0,

'max_duration': max(durations) if durations else 0,

'min_duration': min(durations) if durations else 0,

'avg_memory_delta': sum(memory_deltas) / len(memory_deltas) if memory_deltas else 0

}

# Enhanced clients with performance monitoring

class OptimizedMAIVoiceClient(MAIVoiceClient):

def __init__(self):

super().__init__()

self.monitor = PerformanceMonitor()

@property

def monitored_synthesize_speech(self):

return self.monitor.monitor_api_call(self.synthesize_speech)

class OptimizedMAITextClient(MAITextClient):

def __init__(self):

super().__init__()

self.monitor = PerformanceMonitor()

@property

def monitored_generate_text(self):

return self.monitor.monitor_api_call(self.generate_text)Address common troubleshooting scenarios with automated diagnostics:

# diagnostics.py

import requests

import json

import time

from config import AZURE_SUBSCRIPTION_KEY, MAI_VOICE_ENDPOINT, MAI_TEXT_ENDPOINT

class MAIDiagnostics:

def __init__(self):

self.voice_endpoint = MAI_VOICE_ENDPOINT

self.text_endpoint = MAI_TEXT_ENDPOINT

self.subscription_key = AZURE_SUBSCRIPTION_KEY

def test_connectivity(self):

"""Test basic connectivity to MAI services"""

results = {}

# Test voice endpoint

try:

response = requests.get(

f"{self.voice_endpoint}/health",

timeout=10,

headers={'Ocp-Apim-Subscription-Key': self.subscription_key}

)

results['voice_connectivity'] = {

'status': 'success' if response.status_code == 200 else 'failed',

'response_time': response.elapsed.total_seconds(),

'status_code': response.status_code

}

except Exception as e:

results['voice_connectivity'] = {

'status': 'failed',

'error': str(e)

}

# Test text endpoint

try:

response = requests.get(

f"{self.text_endpoint}/health",

timeout=10,

headers={'Authorization': f'Bearer {self.subscription_key}'}

)

results['text_connectivity'] = {

'status': 'success' if response.status_code == 200 else 'failed',

'response_time': response.elapsed.total_seconds(),

'status_code': response.status_code

}

except Exception as e:

results['text_connectivity'] = {

'status': 'failed',

'error': str(e)

}

return results

def validate_configuration(self):

"""Validate configuration settings"""

issues = []

if not self.subscription_key:

issues.append("Missing AZURE_SUBSCRIPTION_KEY")

elif len(self.subscription_key) < 32:

issues.append("AZURE_SUBSCRIPTION_KEY appears invalid")

if not self.voice_endpoint:

issues.append("Missing MAI_VOICE_ENDPOINT")

elif not self.voice_endpoint.startswith('https://'):

issues.append("MAI_VOICE_ENDPOINT should use HTTPS")

if not self.text_endpoint:

issues.append("Missing MAI_TEXT_ENDPOINT")

elif not self.text_endpoint.startswith('https://'):

issues.append("MAI_TEXT_ENDPOINT should use HTTPS")

return {

'valid': len(issues) == 0,

'issues': issues

}

def run_full_diagnostics(self):

"""Run complete diagnostic suite"""

print("Running MAI Models Diagnostics...")

print("-" * 50)

# Configuration validation

config_results = self.validate_configuration()

print(f"Configuration: {'✓ Valid' if config_results['valid'] else '✗ Issues found'}")

if not config_results['valid']:

for issue in config_results['issues']:

print(f" - {issue}")

return

# Connectivity tests

connectivity_results = self.test_connectivity()

for service, result in connectivity_results.items():

service_name = service.replace('_', ' ').title()

if result['status'] == 'success':

print(f"{service_name}: ✓ Connected ({result['response_time']:.2f}s)")

else:

print(f"{service_name}: ✗ Failed")

if 'error' in result:

print(f" Error: {result['error']}")

print("\nDiagnostics complete!")

if __name__ == "__main__":

diagnostics = MAIDiagnostics()

diagnostics.run_full_diagnostics()Run diagnostics to ensure proper setup:

python diagnostics.pyThe diagnostic tool helps identify common issues like incorrect endpoints, authentication problems, or network connectivity issues that can affect model performance.

Link to section: Production Deployment ConsiderationsProduction Deployment Considerations

Deploying MAI models in production requires careful consideration of scalability, reliability, and cost optimization. Microsoft's efficient training approach translates to cost-effective deployment, but proper architecture planning remains crucial.

Design a production-ready service architecture that handles high traffic while maintaining performance:

# production_service.py

from fastapi import FastAPI, HTTPException, BackgroundTasks

from pydantic import BaseModel

from typing import Optional, Dict, Any

import uvicorn

import asyncio

import aiohttp

from cache_manager import ResponseCache

from performance_monitor import PerformanceMonitor

app = FastAPI(title="MAI Models Production API", version="1.0.0")

# Initialize components

cache = ResponseCache()

monitor = PerformanceMonitor()

class TextRequest(BaseModel):

prompt: str

temperature: Optional[float] = 0.7

max_tokens: Optional[int] = 1000

use_cache: Optional[bool] = True

class VoiceRequest(BaseModel):

text: str

voice_type: Optional[str] = 'neural'

speaking_rate: Optional[float] = 1.0

use_cache: Optional[bool] = True

class ProductionMAIService:

def __init__(self):

self.session = None

async def create_session(self):

if not self.session:

connector = aiohttp.TCPConnector(limit=100, limit_per_host=30)

timeout = aiohttp.ClientTimeout(total=60)

self.session = aiohttp.ClientSession(

connector=connector,

timeout=timeout

)

async def close_session(self):

if self.session:

await self.session.close()

async def generate_text_async(self, request: TextRequest) -> str:

"""Async text generation with caching and monitoring"""

# Check cache first

if request.use_cache:

cached = cache.get_cached_response(

request.prompt,

'text',

{'temp': request.temperature, 'max_tokens': request.max_tokens}

)

if cached:

return cached

await self.create_session()

payload = {

'model': 'MAI-1-preview',

'messages': [{'role': 'user', 'content': request.prompt}],

'generation_config': {

'temperature': request.temperature,

'max_tokens': request.max_tokens

}

}

headers = {

'Authorization': f'Bearer {AZURE_SUBSCRIPTION_KEY}',

'Content-Type': 'application/json'

}

start_time = time.time()

try:

async with self.session.post(

f"{MAI_TEXT_ENDPOINT}/chat/completions",

json=payload,

headers=headers

) as response:

if response.status == 200:

result = await response.json()

generated_text = result['choices'][0]['message']['content']

# Cache successful response

if request.use_cache:

cache.cache_response(

request.prompt,

'text',

generated_text,

{'temp': request.temperature, 'max_tokens': request.max_tokens}

)

return generated_text

else:

error_text = await response.text()

raise HTTPException(status_code=response.status, detail=error_text)

except Exception as e:

raise HTTPException(status_code=500, detail=f"Text generation failed: {str(e)}")

service = ProductionMAIService()

@app.on_event("startup")

async def startup_event():

await service.create_session()

@app.on_event("shutdown")

async def shutdown_event():

await service.close_session()

@app.post("/generate-text")

async def generate_text_endpoint(request: TextRequest):

try:

result = await service.generate_text_async(request)

return {"generated_text": result, "status": "success"}

except HTTPException:

raise

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

@app.get("/health")

async def health_check():

return {"status": "healthy", "service": "MAI Models API"}

@app.get("/metrics")

async def get_metrics():

return monitor.get_performance_stats()

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)For containerized deployment, create a Docker configuration:

# Dockerfile

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

EXPOSE 8000

CMD ["uvicorn", "production_service:app", "--host", "0.0.0.0", "--port", "8000"]# docker-compose.yml

version: '3.8'

services:

mai-api:

build: .

ports:

- "8000:8000"

environment:

- AZURE_SUBSCRIPTION_KEY=${AZURE_SUBSCRIPTION_KEY}

- MAI_VOICE_ENDPOINT=${MAI_VOICE_ENDPOINT}

- MAI_TEXT_ENDPOINT=${MAI_TEXT_ENDPOINT}

volumes:

- ./mai_cache:/app/mai_cache

restart: unless-stopped

nginx:

image: nginx:alpine

ports:

- "80:80"

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf

depends_on:

- mai-api

restart: unless-stoppedThis production setup includes proper error handling, caching, monitoring, and scalability considerations essential for real-world deployments.

Microsoft's MAI models represent a significant advancement in efficient AI development, demonstrating that competitive performance doesn't require massive resource expenditure. By following this comprehensive setup guide, you can integrate both MAI-Voice-1 and MAI-1-preview into your applications, taking advantage of their unique capabilities for speech synthesis and text generation. The models' efficiency makes them particularly attractive for cost-conscious deployments while maintaining high-quality output standards.

As Microsoft continues to refine these models and expand access, they position themselves as a serious competitor to established players in the AI space. The combination of in-house development, efficient training techniques, and practical deployment considerations makes MAI models an compelling choice for developers seeking AI-powered productivity tools that balance performance with resource efficiency.