Microsoft's AI Independence Push with MAI Voice Models

Microsoft dropped a bombshell on August 29, 2025, with the announcement of two groundbreaking AI models: MAI-Voice-1 and MAI-1-preview. These aren't just incremental updates or partnerships—they represent Microsoft's first fully in-house developed AI models, signaling a dramatic pivot away from exclusive reliance on OpenAI's technology. The launch comes at a critical juncture as tensions between the two AI giants have escalated, with Microsoft now officially listing OpenAI as a competitor in its annual reports despite investing over $13 billion in the company.

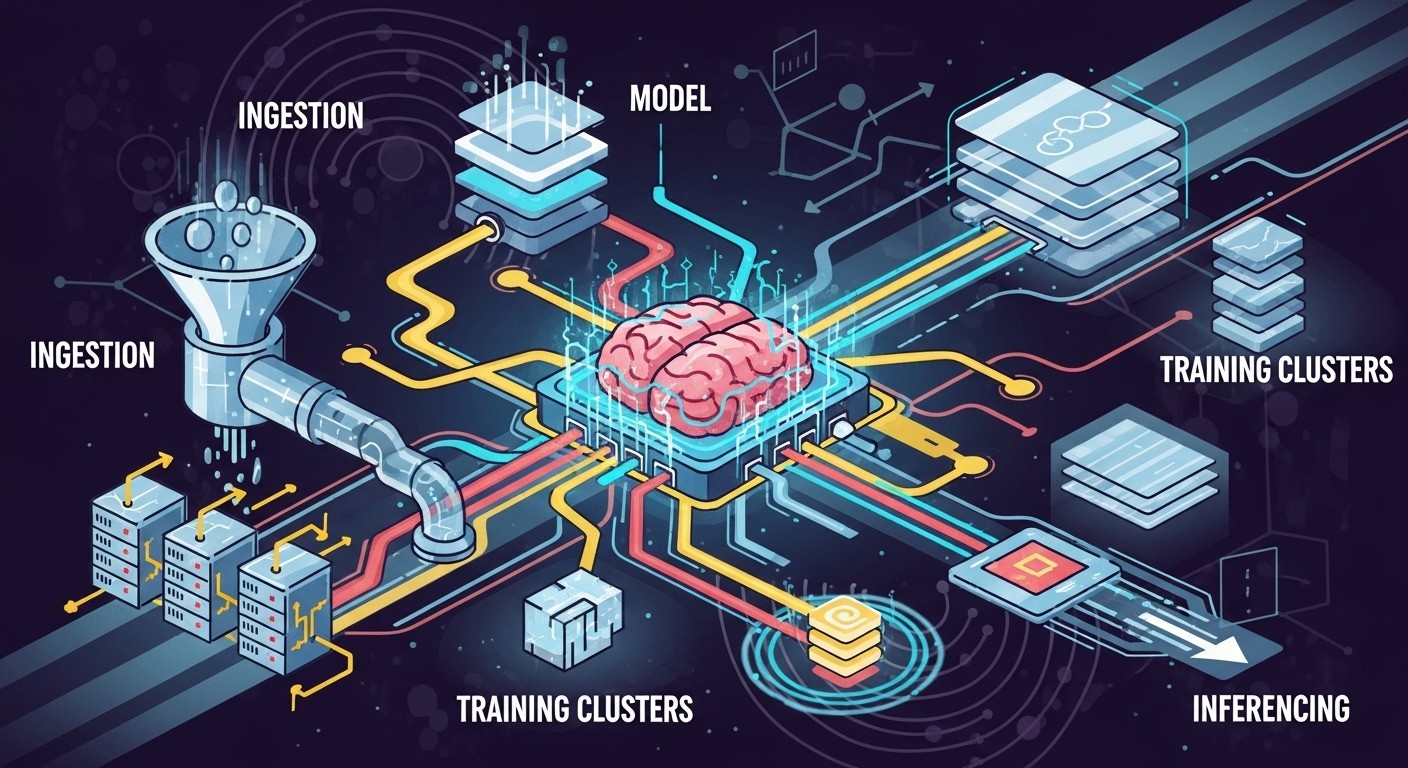

MAI-Voice-1 delivers unprecedented efficiency in speech generation, capable of producing a full minute of natural-sounding audio in under one second using just a single GPU. MAI-1-preview, the company's first end-to-end trained foundation model, was developed using approximately 15,000 NVIDIA H100 GPUs and currently ranks 13th on LMArena's competitive benchmarks. Both models are now being integrated into Microsoft's flagship Copilot assistant, marking the beginning of what could be a complete restructuring of the AI industry's power dynamics.

Link to section: The Strategic Context Behind Microsoft's Bold MoveThe Strategic Context Behind Microsoft's Bold Move

The timing of this announcement reflects months of growing friction between Microsoft and OpenAI. What began as a transformative partnership in 2019, with Microsoft providing exclusive cloud infrastructure through Azure, has evolved into something resembling a strategic chess match. OpenAI's explosive growth to 700 million weekly ChatGPT users and a $500 billion valuation has fundamentally altered the relationship dynamics.

Microsoft's decision to develop proprietary models stems from several critical factors. OpenAI has begun diversifying its infrastructure partnerships, working with CoreWeave, Google Cloud, and Oracle rather than relying solely on Microsoft's Azure platform. This shift threatens Microsoft's position as the exclusive gateway to OpenAI's technology. Additionally, OpenAI's controversial "AGI clause" allows the company to sever Microsoft's access to its intellectual property once artificial general intelligence is achieved, creating existential partnership risks for Microsoft's AI strategy.

The financial stakes are enormous. Microsoft's $13.75 billion investment in OpenAI, while substantial, may increasingly be viewed as funding a future competitor rather than securing a long-term technology partner. Recent reports indicate that Microsoft has been actively working to diversify Microsoft 365 Copilot away from exclusive OpenAI dependence, exploring both internal and third-party alternatives to reduce costs and improve performance.

Link to section: Technical Capabilities and Performance BenchmarksTechnical Capabilities and Performance Benchmarks

MAI-Voice-1 represents a significant breakthrough in speech synthesis technology. The model's ability to generate a full minute of high-fidelity audio in under one second on a single GPU sets a new efficiency standard for the industry. Independent testing by PCMag reported actual generation times closer to 3-4 seconds per clip, which still far exceeds competing solutions from companies like ElevenLabs or OpenAI's voice models.

The technical architecture leverages a transformer-based design trained on diverse multilingual speech datasets. MAI-Voice-1 handles both single-speaker and multi-speaker scenarios, providing expressive and contextually appropriate voice outputs. Users can experience the model directly through Copilot Labs, where they can select "Emotive" for varied voices and styles or "Story" for audiobook-style narration.

MAI-1-preview employs a mixture-of-experts (MoE) architecture, a design choice that allows the model to activate different specialized components based on the specific task at hand. This approach enables more efficient computation compared to traditional monolithic models. The training process consumed approximately 15,000 NVIDIA H100 GPUs, a relatively modest scale compared to competitors like xAI's Grok, which reportedly used over 100,000 GPUs.

Current performance benchmarks place MAI-1-preview at 13th position on LMArena, trailing models from OpenAI, Google's Gemini, Anthropic's Claude, and xAI's Grok. However, Microsoft AI Chief Mustafa Suleyman emphasized that the model is "punching way above its weight" given its training resources, highlighting efficient data selection and optimization techniques gleaned from the open-source community.

Link to section: Integration Points and Real-World ApplicationsIntegration Points and Real-World Applications

Microsoft has wasted no time integrating these models into its existing product ecosystem. MAI-Voice-1 already powers Copilot Daily, which delivers personalized news summaries with AI-generated narration, and Copilot Podcasts, which creates multi-speaker discussions on complex topics. The model's efficiency makes it ideal for real-time applications where latency matters, such as interactive voice assistants and live content generation.

MAI-1-preview is being gradually rolled out for select text-based use cases within Copilot over the coming weeks. The company plans to gather user feedback to refine the model before broader deployment. Developers interested in early access can submit requests through Microsoft's API application process, though access appears quite limited initially.

The integration strategy reflects Microsoft's vision of "orchestrating a range of specialized models serving different user intents and use cases." Rather than relying on a single general-purpose model, Microsoft aims to deploy targeted AI solutions optimized for specific workflows. This approach could provide significant advantages in enterprise environments where reliability and task-specific performance matter more than general capability.

Link to section: Developer Implications and Integration PathwaysDeveloper Implications and Integration Pathways

For developers building on Microsoft's platform, these new models represent both opportunities and potential disruptions. MAI-Voice-1's single-GPU efficiency opens possibilities for local deployment scenarios that were previously impractical. Developers working on voice-enabled applications, content creation tools, or accessibility features could leverage this capability without requiring expensive cloud infrastructure.

The API access model for MAI-1-preview suggests Microsoft will maintain strict control over distribution initially. This contrasts with the broader availability of models like OpenAI's GPT-4 or Anthropic's Claude through multiple providers. Developers currently integrated with OpenAI's models through Microsoft's Azure OpenAI Service may need to evaluate whether MAI-1-preview offers sufficient capabilities for their use cases.

Microsoft's emphasis on consumer-focused training data could make these models particularly effective for productivity and automation applications. The models are trained with consumer behavior patterns in mind, potentially offering more intuitive interactions for everyday business tasks compared to research-oriented alternatives.

The gradual rollout strategy provides developers time to assess performance and plan migration strategies. However, the limited initial access may create competitive disadvantages for teams unable to secure early API access, particularly for startups competing against larger organizations with established Microsoft relationships.

Link to section: Enterprise Business Impact and Strategic ConsiderationsEnterprise Business Impact and Strategic Considerations

For enterprise customers, Microsoft's AI independence push addresses several critical concerns. The primary benefit lies in reduced vendor lock-in risks and improved cost predictability. Organizations heavily invested in the Microsoft ecosystem can now access advanced AI capabilities without depending on external partnerships that might change terms or availability.

Microsoft's consumer-focused training approach could provide more personalized and workflow-appropriate AI interactions for business users. The company's access to vast amounts of enterprise data through Microsoft 365, LinkedIn, and GitHub creates opportunities for highly targeted model training that competitors cannot easily replicate.

Cost implications remain unclear but potentially significant. If MAI models prove more efficient than licensed alternatives, Microsoft could pass savings to customers or improve margins on AI-enhanced products. The company's massive capital expenditures on AI infrastructure—projected to exceed $80 billion in fiscal 2025—suggest a long-term commitment to controlling costs through vertical integration.

Enterprise adoption strategies will likely focus on hybrid approaches initially. Microsoft has emphasized that it will continue using "the very best models from our team, our partners, and the latest innovations from the open-source community." This suggests enterprises won't face immediate pressure to migrate entirely to MAI models but will benefit from optimized model selection based on specific use cases.

Link to section: Competitive Landscape and Market DynamicsCompetitive Landscape and Market Dynamics

Microsoft's entry into proprietary AI model development intensifies competition across multiple dimensions. The company now competes directly with OpenAI, Google, Anthropic, and other model providers rather than serving primarily as a distribution partner. This shift could accelerate innovation as companies seek to differentiate through specialized capabilities rather than general-purpose performance.

The efficiency focus of MAI models addresses a critical market need. Many organizations require AI capabilities but cannot justify the computational costs of frontier models for routine tasks. MAI-Voice-1's single-GPU performance and MAI-1-preview's training efficiency suggest Microsoft is targeting practical deployment scenarios rather than benchmark dominance.

Strategic hiring has played a crucial role in Microsoft's model development capabilities. The company recruited Mustafa Suleyman from Inflection AI along with several colleagues and added approximately two dozen researchers from Google's DeepMind. This "acqui-hiring" approach enabled Microsoft to compress years of research into accelerated development timelines.

The partnership-to-competition evolution reflects broader industry trends. Strategic partnerships in AI often serve as stepping stones to direct rivalry, as companies use initial collaborations to build internal capabilities. Similar dynamics are playing out between other major technology companies and AI startups.

Link to section: Short-Term Market Reactions and Immediate ImplicationsShort-Term Market Reactions and Immediate Implications

Initial market responses to Microsoft's MAI models launch have been cautiously positive, with analysts viewing the move as a logical step toward AI independence. The company's stock has seen modest gains as investors appreciate reduced reliance on external partners, though questions remain about the financial impact of massive AI infrastructure investments.

Developer community reactions have been mixed. Some appreciate the potential for more efficient and cost-effective AI tools, while others express concerns about ecosystem fragmentation. The limited availability of MAI-1-preview through application-only API access has created frustration among developers eager to evaluate the technology.

OpenAI's response has been notably restrained, with the company focusing on its own product announcements rather than directly addressing Microsoft's competitive positioning. However, industry observers note that OpenAI's recent technology presentations have omitted mentions of Microsoft infrastructure, suggesting growing independence from their primary partner and investor.

Enterprise customers are taking a wait-and-see approach, with most organizations planning to evaluate MAI models alongside existing solutions rather than making immediate migration decisions. The gradual rollout strategy provides time for thorough testing and performance comparison.

Link to section: Technical Challenges and Performance LimitationsTechnical Challenges and Performance Limitations

Despite the impressive specifications, MAI models face several technical challenges that could impact adoption. MAI-1-preview's 13th-place ranking on LMArena indicates significant performance gaps compared to frontier models from OpenAI, Google, and Anthropic. While the efficiency gains are notable, many use cases require maximum capability rather than cost optimization.

The consumer-focused training approach may limit effectiveness for specialized enterprise applications. Models trained primarily on consumer interaction patterns might struggle with technical documentation, scientific research, or industry-specific workflows that require domain expertise.

Integration complexity represents another challenge. Organizations already invested in OpenAI or other AI platforms face migration costs and potential workflow disruptions. The limited API availability compounds this challenge by preventing comprehensive evaluation during decision-making processes.

Quality consistency remains unproven at scale. While initial demonstrations show promising results, large-scale deployment often reveals edge cases and failure modes not apparent in controlled testing environments. Microsoft's gradual rollout strategy acknowledges these risks but may delay broader adoption.

Link to section: Long-Term Strategic Implications and Industry TransformationLong-Term Strategic Implications and Industry Transformation

Microsoft's AI independence push signals a fundamental shift toward vertical integration in AI development. Success with MAI models could inspire other technology giants to pursue similar strategies, potentially fragmenting the AI market into proprietary ecosystems rather than shared platforms.

The financial implications extend beyond immediate cost savings. If Microsoft successfully reduces dependence on external AI providers, it could achieve higher margins on AI-enhanced products while gaining greater control over feature development and deployment timelines. This control becomes increasingly valuable as AI capabilities become central to competitive positioning.

Innovation trajectories may also shift as companies focus on specialized rather than general-purpose models. Microsoft's emphasis on consumer-focused training and specific use case optimization suggests a move away from the "one model fits all" approach that has dominated recent AI development.

The geopolitical dimensions of AI development add another layer of complexity. As nations increasingly view AI capabilities as strategic assets, companies with domestic AI development capabilities may gain advantages in government contracts and regulated industries.

Link to section: Regulatory and Privacy ConsiderationsRegulatory and Privacy Considerations

Microsoft's AI independence strategy intersects with growing regulatory scrutiny of AI partnerships and data usage. The company's control over training data through its enterprise products creates both opportunities and responsibilities for privacy protection and regulatory compliance.

The European Union's AI Act and similar regulations worldwide may favor companies with transparent AI development processes over those relying on external providers with opaque training methods. Microsoft's in-house development could provide advantages in regulatory compliance and audit requirements.

Data sovereignty concerns also play a role, particularly for government and enterprise customers requiring guarantees about data processing and storage locations. Microsoft's Azure infrastructure and domestic AI development capabilities position it well for customers with stringent data residency requirements.

Link to section: Unanswered Questions and Future Research DirectionsUnanswered Questions and Future Research Directions

Several critical questions remain about Microsoft's AI strategy and its broader implications for the industry. The financial sustainability of massive AI infrastructure investments remains unclear, particularly given the company's historically high software margins. Investors will closely watch whether AI independence translates to improved profitability or continued margin pressure.

The scalability of Microsoft's approach raises questions about whether smaller technology companies can pursue similar strategies. If AI independence requires investments measured in tens of billions of dollars, it could create insurmountable barriers for emerging competitors and further concentrate AI capabilities among technology giants.

Integration challenges between in-house models and existing enterprise workflows need resolution. Organizations require clear migration paths and compatibility guarantees to justify the risks of switching AI providers, particularly for business-critical applications.

The competitive response from OpenAI and other AI providers will significantly influence market dynamics. Whether these companies pursue similar vertical integration strategies or focus on maintaining technological leadership through continued innovation will shape the industry's evolution.

Microsoft's MAI models launch represents more than a product announcement—it signals a strategic inflection point that could reshape the AI industry's structure and competitive dynamics. The success or failure of this independence push will influence how technology companies approach AI development partnerships and could determine whether the industry evolves toward consolidated ecosystems or continued collaboration and interoperability.

For developers, businesses, and users, the immediate impact may be modest, but the long-term implications are profound. The next few quarters will reveal whether Microsoft's bet on AI independence pays off through improved performance, reduced costs, and greater strategic control, or whether the company's massive investments prove insufficient to match the capabilities of specialized AI providers. Either way, the AI industry will never be quite the same.