Voice Coding vs Keyboard: 2025's Developer Battle

The software development world stands at an inflection point. While developers have typed code for decades, a revolutionary shift toward voice-activated programming is gaining momentum in 2025. This transformation extends beyond simple dictation to encompass what OpenAI's Andrej Karpathy coined as "vibe coding" - where developers speak their intentions and AI translates them into functional code.

Recent data reveals that humans speak at 150+ words per minute compared to typing speeds of 40-80 WPM, suggesting a fundamental productivity advantage for voice-based development. Major tech companies are investing heavily in this space, with Microsoft's GitHub Copilot introducing conversational features and Google's Gemini Live enabling natural language programming interactions. Yet traditional keyboard programming remains deeply entrenched, supported by decades of tooling and muscle memory.

Link to section: Performance Analysis: Speed and Accuracy MetricsPerformance Analysis: Speed and Accuracy Metrics

Voice coding demonstrates measurable performance advantages in specific scenarios. The human speech rate of 150-175 words per minute significantly exceeds average typing speeds of 40-80 WPM, with even experienced developers rarely exceeding 100 WPM. This 2-3x speed differential translates directly to faster code generation when using AI-assisted voice tools.

Accuracy measurements reveal nuanced results. Modern speech recognition systems like OpenAI's Whisper achieve 95%+ accuracy rates under optimal conditions, but performance degrades with background noise, accents, or technical terminology. Traditional typing maintains consistent accuracy rates of 92-96% for experienced developers, with immediate visual feedback enabling instant error correction.

Latency introduces another performance dimension. Voice coding systems require multiple processing steps: speech-to-text conversion (100-300ms), AI interpretation (200-500ms), and code generation (300-1000ms). This creates a total latency of 600-1800ms compared to immediate keyboard input. However, tools like Super Whisper reduce speech-to-text latency to under 100ms, making the experience more responsive.

Real-world testing shows voice coding excels for boilerplate generation, documentation writing, and high-level architectural discussions. A developer using Cursor AI with Wispr Flow can generate a complete React component by saying "Create a user authentication form with email, password validation, and submit handling" in 8 seconds, compared to 3-5 minutes of typing equivalent code.

Keyboard programming maintains advantages for precise editing, complex debugging, and situations requiring character-level accuracy. Refactoring existing code, adjusting indentation, or making surgical changes to function parameters remains faster with traditional input methods.

Link to section: Cost Structure and Investment RequirementsCost Structure and Investment Requirements

Voice coding implementations vary dramatically in cost structure. Entry-level solutions using built-in operating system speech recognition cost nothing but provide limited functionality. The VSCode Speech Extension integrates directly with GitHub Copilot at no additional cost beyond existing subscriptions.

Professional voice coding setups require multiple tool subscriptions. Cursor AI costs $20 monthly for Pro features, while Wispr Flow adds $10 monthly for enhanced speech recognition. GitHub Copilot Business subscriptions run $19 per user monthly. Super Whisper, a popular macOS solution, costs $29 as a one-time purchase.

Hardware requirements introduce additional costs. Quality microphones become essential - the Blue Yeti Nano ($100) provides adequate performance, while professional options like the Shure SM7B ($400) deliver superior noise rejection. Noise-canceling headphones reduce background interference, with models like the Sony WH-1000XM5 ($400) becoming valuable investments for open office environments.

Traditional keyboard programming requires minimal ongoing costs. Mechanical keyboards range from $100-300 for professional models, with quality options like the Keychron K2 ($80) or Das Keyboard 4 Professional ($170) lasting years. IDEs like VSCode remain free, while premium options like JetBrains IDEs cost $149 annually per license.

Enterprise deployments reveal different cost patterns. Voice coding reduces training time for new developers who can describe functionality in natural language rather than learning complex syntax. However, infrastructure costs increase due to AI processing requirements and potential privacy concerns requiring on-premises solutions.

Link to section: Scalability and Enterprise Adoption PatternsScalability and Enterprise Adoption Patterns

Scalability challenges emerge differently for each approach. Voice coding systems strain under concurrent usage, with cloud-based AI services experiencing degraded performance during peak hours. GitHub Copilot occasionally exhibits response delays during high-traffic periods, while local solutions like Wispr Flow maintain consistent performance but require significant computational resources.

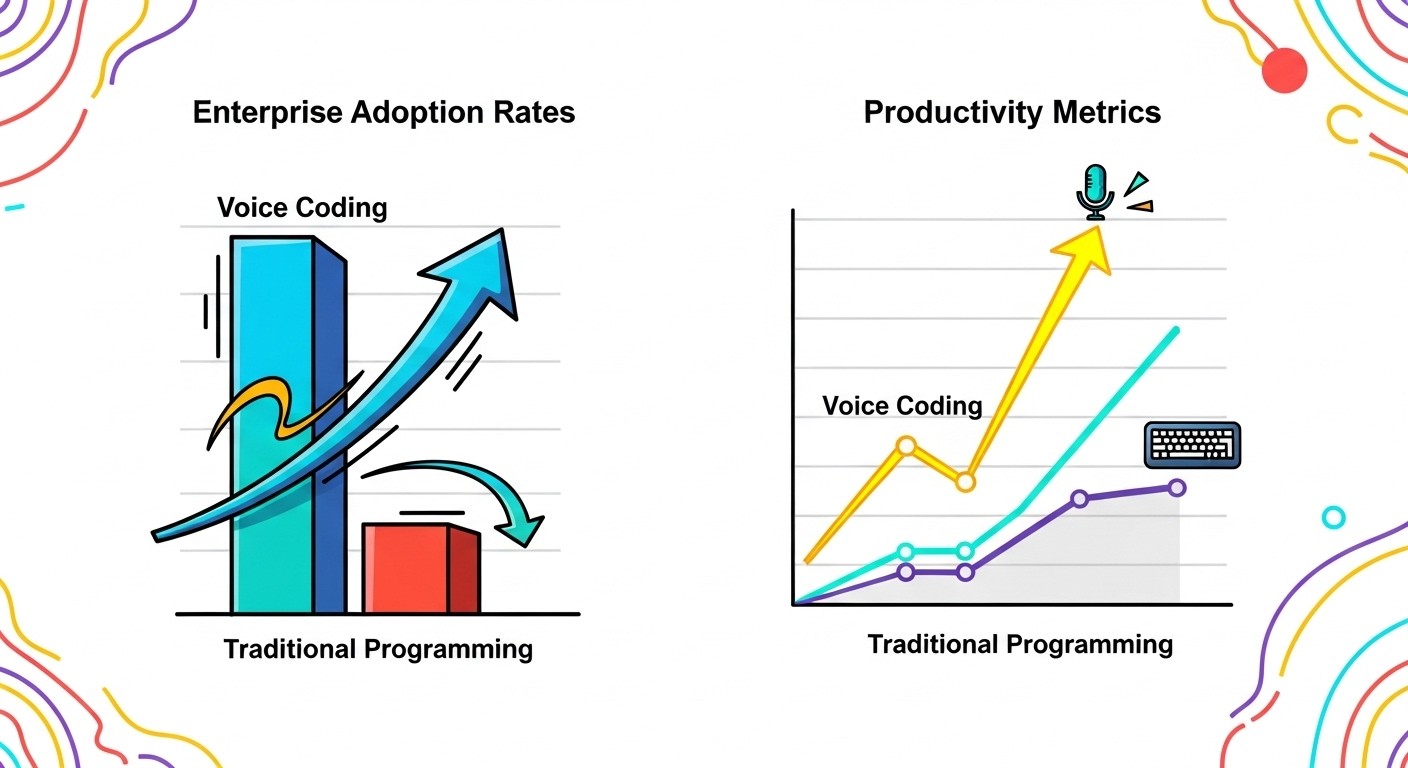

Enterprise adoption reveals interesting patterns. Financial services companies report reluctance to adopt voice coding due to privacy regulations and open office acoustics. Conversely, remote-first startups embrace voice tools for their productivity benefits and natural integration with video calls.

Microsoft's integration of voice features into Visual Studio 2022 17.12 Preview 3 signals enterprise readiness. The guided chat experience reduces learning curves by asking clarifying questions rather than requiring perfect prompts. This approach addresses scalability by making voice coding accessible to developers with varying prompt engineering skills.

Traditional keyboard programming scales linearly with developer count. Adding team members requires minimal infrastructure investment beyond standard development workstations. Version control systems, CI/CD pipelines, and collaborative tools operate identically regardless of input method.

Network requirements differ substantially. Voice coding systems require stable internet connections for cloud-based AI processing, creating dependency risks. Traditional programming operates entirely offline once development environments are configured, providing greater reliability in unstable network conditions.

Link to section: Usability and Learning Curve AnalysisUsability and Learning Curve Analysis

Learning curves vary significantly between approaches. Voice coding demands mastery of "prompt engineering" - crafting precise natural language instructions that AI systems interpret correctly. This skill differs fundamentally from traditional programming knowledge, requiring developers to think in terms of high-level descriptions rather than specific implementations.

New developers often find voice coding more accessible initially. Describing desired functionality in English feels natural compared to memorizing syntax rules and programming constructs. However, this accessibility can create false confidence, as developers may generate code they don't fully understand.

Experienced programmers face different challenges. Decades of muscle memory and keyboard shortcuts become irrelevant in voice-first environments. The transition requires deliberate practice and mindset shifts from precise character-level control to descriptive, intent-based communication.

Error handling represents a crucial usability difference. Traditional programming provides immediate visual feedback through syntax highlighting, compiler errors, and IDE warnings. Voice coding systems often generate syntactically correct but logically flawed code, requiring careful review and testing to identify issues.

Cognitive load patterns shift between methods. Keyboard programming requires sustained focus on syntax, indentation, and character-level accuracy. Voice coding reduces syntactic burden but increases cognitive overhead for prompt formulation and result validation.

Physical considerations impact usability significantly. Voice coding eliminates repetitive strain injuries common among developers but introduces vocal fatigue during extended sessions. Traditional programming causes wrist and finger strain but allows for silent operation in shared workspaces.

Link to section: Ecosystem and Integration SupportEcosystem and Integration Support

Tool ecosystem maturity heavily favors traditional programming. Every major IDE, text editor, and development tool assumes keyboard input as the primary interaction method. Decades of refinement have produced sophisticated features like intelligent code completion, refactoring tools, and debugging interfaces optimized for typed interaction.

Voice coding ecosystems remain fragmented but growing rapidly. Cursor AI leads integration efforts by combining voice input with intelligent code generation. The platform supports natural language prompts through speech while maintaining traditional editing capabilities for fine-tuned adjustments.

Plugin availability reveals ecosystem gaps. Popular IDEs like IntelliJ IDEA, VSCode, and Vim offer extensive plugin repositories built around keyboard-centric workflows. Voice coding tools currently lack equivalent ecosystem depth, though projects like Vocode demonstrate promising integration approaches for conversational AI development.

API compatibility presents interesting dynamics. Voice coding tools excel at generating API integration code from natural language descriptions. A developer can say "Connect to the Stripe payment API and create a subscription" to generate complete implementation code. Traditional programming requires manual API documentation review and code writing.

Version control integration works seamlessly with both approaches. Git repositories store code identically regardless of input method, though voice-generated code may include more verbose comments and descriptive variable names that improve code readability in collaborative environments.

Framework support varies considerably. Voice coding tools demonstrate strong capabilities with popular frameworks like React, Django, and Express.js due to extensive training data. Niche frameworks or custom internal tools may receive limited voice coding support, making traditional programming necessary for specialized development.

Link to section: Real-World Implementation Case StudiesReal-World Implementation Case Studies

Synthflow, a Berlin-based startup, uses Vocode framework for professional voice AI development. Their approach combines speech-to-text processing with real-time response generation, achieving sub-500ms response times for conversational AI applications. The team reports 40% faster prototype development using voice-first methodologies compared to traditional coding approaches.

GitHub's internal metrics reveal that 41% of code on the platform now contains AI-generated content, with voice-activated tools contributing significantly to this growth. Developers using GitHub Copilot's conversational features report increased satisfaction and reduced time spent on boilerplate code generation.

University research provides additional validation. The Technical University of Cluj-Napoca conducted studies with 16 mobile developers using AI voice tools versus traditional methods. Results showed 30% improvement in task completion times and 72% of participants rating voice tools as highly helpful for development work.

Remote development teams increasingly adopt hybrid approaches. Developers use voice coding for initial feature scoping and rapid prototyping, then switch to keyboard input for detailed implementation and debugging. This workflow combines the speed advantages of voice input with the precision of traditional programming.

Accessibility benefits drive adoption in specific communities. Developers with repetitive strain injuries, visual impairments, or physical disabilities find voice coding enables continued programming careers. Organizations prioritizing inclusive development practices invest in voice tools as accommodation solutions.

Link to section: Future Trajectory and Strategic RecommendationsFuture Trajectory and Strategic Recommendations

The convergence of improved speech recognition, advanced AI models, and natural language processing suggests continued growth for voice coding adoption. Google's Gemini Live demonstrates multimodal interactions where developers can share screens while discussing code changes verbally, creating new collaborative programming paradigms.

Technical limitations continue constraining widespread adoption. Background noise, accent recognition, and domain-specific terminology remain problematic for speech recognition systems. Advanced AI coding environments address some limitations through improved context understanding and error correction capabilities.

Enterprise deployment strategies should consider hybrid approaches. Teams benefit from voice coding for rapid prototyping, documentation generation, and collaborative design sessions while maintaining keyboard proficiency for detailed implementation work. Training programs should address both paradigms rather than forcing exclusive adoption.

Individual developers should evaluate voice coding based on specific use cases. Content creators, documentation writers, and architects gain immediate benefits from voice tools. Systems programmers, database administrators, and security specialists may find limited applicability for precision-critical tasks.

The competitive landscape continues evolving rapidly. Microsoft's integration of voice features into mainstream development tools signals industry commitment to voice-first programming. Smaller specialized tools like Super Whisper and Wispr Flow provide targeted solutions for specific workflows and preferences.

Investment in voice coding infrastructure makes sense for organizations prioritizing developer productivity and innovation speed. The technology particularly benefits teams working on customer-facing applications, content management systems, and rapid prototyping initiatives where development velocity outweighs implementation precision requirements.

Both voice coding and traditional keyboard programming will coexist for the foreseeable future, with developers choosing tools appropriate for specific tasks and contexts. The most successful development teams will master both approaches, leveraging voice coding for rapid iteration and traditional methods for precise implementation and maintenance work.