Kubernetes vs Simpler Alternatives: 2025 Battle Guide

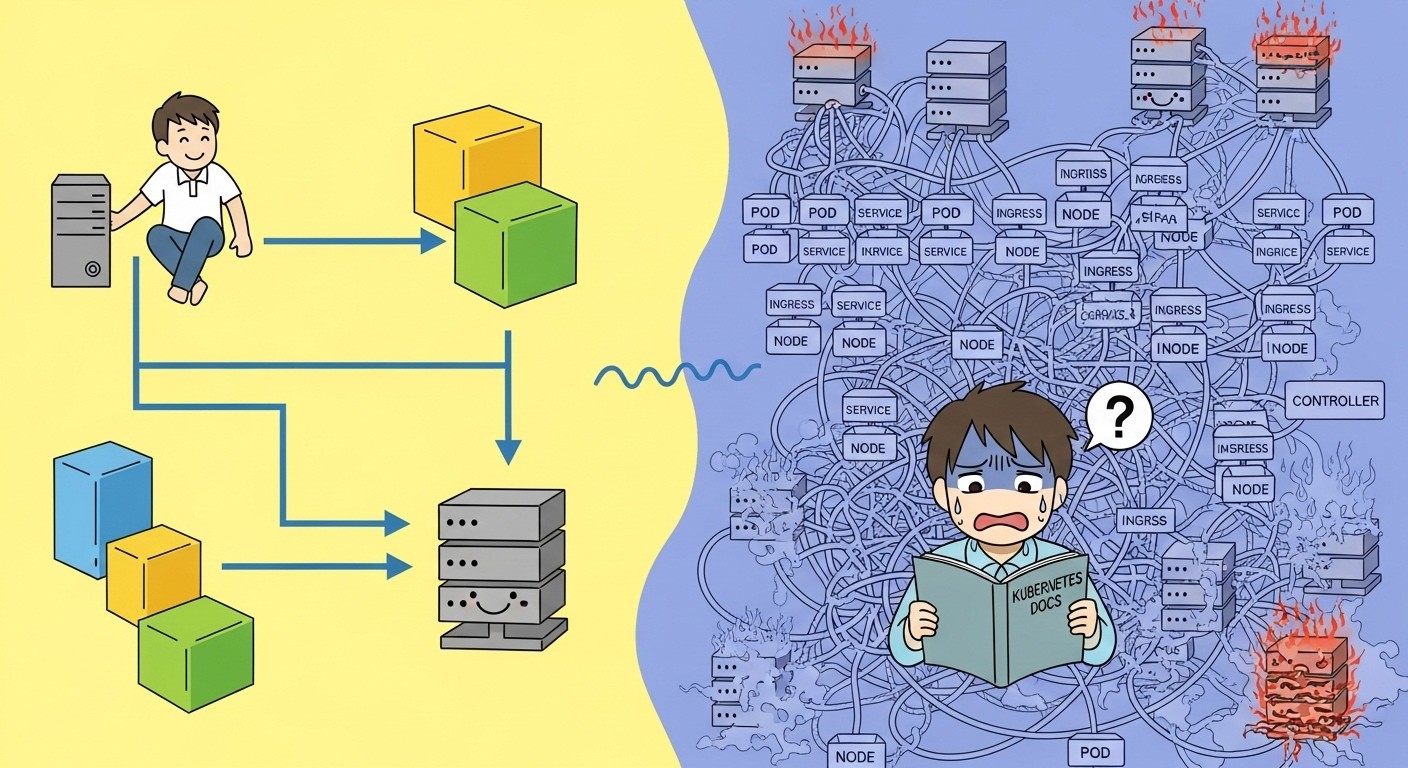

The container orchestration landscape is experiencing a seismic shift in 2025. While Kubernetes dominated the past decade, many tech companies are abandoning its complexity for simpler, more focused alternatives. This migration isn't driven by Kubernetes' technical limitations, but by its operational overhead that often exceeds project requirements.

Recent surveys indicate that 68% of engineering teams spend more time managing Kubernetes clusters than developing applications[20]. The total cost of ownership for a typical Kubernetes deployment includes not just infrastructure costs, but significant engineering hours dedicated to cluster management, security patches, and troubleshooting networking issues.

This comprehensive comparison examines six major container orchestration platforms, evaluating them across performance, cost, scalability, usability, adoption rates, and ecosystem support. Each platform targets different use cases, from simple Docker deployments to complex microservices architectures.

Link to section: The Kubernetes Complexity ProblemThe Kubernetes Complexity Problem

Kubernetes requires substantial expertise to operate effectively. A standard production setup involves configuring etcd clusters, managing certificate rotation, setting up ingress controllers, implementing network policies, and maintaining persistent volume claims. The learning curve often takes 6-12 months for experienced developers to become productive.

Consider a typical Kubernetes deployment manifest for a simple web application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp

spec:

replicas: 3

selector:

matchLabels:

app: webapp

template:

metadata:

labels:

app: webapp

spec:

containers:

- name: webapp

image: nginx:1.21

ports:

- containerPort: 80

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancerThis seemingly simple deployment requires understanding Kubernetes concepts like Deployments, Services, resource limits, and label selectors. Add persistent storage, secrets management, and horizontal pod autoscaling, and complexity multiplies exponentially.

The operational burden includes cluster upgrades (requiring careful planning and potential downtime), monitoring multiple control plane components, and debugging networking issues across nodes. Many organizations discover they need dedicated Platform Engineering teams just to maintain their Kubernetes infrastructure.

Link to section: HashiCorp Nomad: Simplicity Without CompromiseHashiCorp Nomad: Simplicity Without Compromise

Nomad positions itself as the straightforward alternative to Kubernetes complexity. Released by HashiCorp, it uses a single binary architecture that eliminates the need for separate control plane components. Installation requires downloading one executable file and starting the agent.

Link to section: Performance and ArchitecturePerformance and Architecture

Nomad's architecture centers around a gossip protocol for node communication and a Raft consensus algorithm for leadership election. This design delivers impressive performance metrics: deployments complete 3-5x faster than equivalent Kubernetes operations, and resource overhead stays below 50MB per node compared to Kubernetes' 500MB+ footprint[20].

The platform handles heterogeneous workloads naturally, supporting Docker containers, raw executables, Java applications, and virtual machines within the same cluster. This flexibility proves valuable for organizations with diverse technology stacks.

Link to section: Real-World ImplementationReal-World Implementation

A typical Nomad job specification demonstrates its simplicity:

job "webapp" {

datacenters = ["dc1"]

type = "service"

group "web" {

count = 3

task "nginx" {

driver = "docker"

config {

image = "nginx:1.21"

port_map {

http = 80

}

}

resources {

cpu = 500

memory = 256

network {

mbits = 10

port "http" {}

}

}

service {

name = "webapp"

port = "http"

check {

type = "http"

path = "/"

interval = "10s"

timeout = "2s"

}

}

}

}

}This job file accomplishes the same deployment as the Kubernetes example above, but with more readable syntax and fewer abstraction layers. Nomad's built-in service discovery and health checking eliminate the need for additional components.

Link to section: Cost ConsiderationsCost Considerations

Nomad significantly reduces operational costs through simplified management. Organizations report 40-60% lower total cost of ownership compared to Kubernetes deployments, primarily due to reduced engineering overhead[20]. The single binary deployment model means fewer moving parts to monitor and maintain.

However, Nomad lacks some advanced features that Kubernetes provides out-of-box, such as network policies and custom resource definitions. Organizations requiring these capabilities must implement them through HashiCorp's broader ecosystem (Consul for service mesh, Vault for secrets management).

Link to section: Docker Swarm: Native Docker IntegrationDocker Swarm: Native Docker Integration

Docker Swarm represents the path of least resistance for teams already committed to Docker. Built directly into Docker Engine, it requires no additional software installation or configuration files. Activation happens with a single command: docker swarm init.

Link to section: Technical ImplementationTechnical Implementation

Swarm Mode transforms standalone Docker hosts into a cluster with automatic load balancing and service discovery. The overlay network driver handles multi-host networking without external dependencies. Services deploy using familiar Docker Compose syntax:

version: '3.8'

services:

webapp:

image: nginx:1.21

replicas: 3

ports:

- "80:80"

deploy:

resources:

limits:

cpus: '0.5'

memory: 128M

reservations:

cpus: '0.25'

memory: 64M

restart_policy:

condition: on-failure

delay: 5s

max_attempts: 3

networks:

- webnet

networks:

webnet:

driver: overlayDeployment executes with docker stack deploy -c docker-compose.yml webapp, and scaling happens through docker service scale webapp_webapp=5. This simplicity appeals to development teams wanting orchestration without operational complexity.

Link to section: Performance CharacteristicsPerformance Characteristics

Docker Swarm delivers excellent performance for small to medium-scale deployments. Service discovery operates through Docker's internal DNS resolver, providing sub-millisecond lookup times. The integrated load balancer distributes traffic using a round-robin algorithm with connection tracking.

However, Swarm Mode shows limitations at scale. Clusters become unstable beyond 100-150 nodes, and the built-in scheduler lacks sophisticated placement strategies. Organizations often hit resource contention issues when running diverse workloads on the same cluster.

Link to section: Ecosystem LimitationsEcosystem Limitations

Swarm's biggest drawback involves ecosystem support. The broader container ecosystem focuses on Kubernetes, leaving Swarm with limited third-party integrations. Monitoring solutions, CI/CD pipelines, and security scanning tools often provide first-class Kubernetes support while treating Swarm as an afterthought.

Despite these limitations, Swarm excels for organizations with straightforward containerization needs. E-commerce platforms, content management systems, and internal tools benefit from its operational simplicity without requiring advanced orchestration features.

Link to section: AWS App Runner: Serverless Container DeploymentAWS App Runner: Serverless Container Deployment

AWS App Runner eliminates infrastructure management entirely by providing a fully managed container service. Developers push container images to ECR, and App Runner handles deployment, scaling, load balancing, and health monitoring automatically.

Link to section: Implementation ModelImplementation Model

App Runner services deploy through the AWS Console, CLI, or CloudFormation templates. A typical configuration requires minimal parameters:

# apprunner-service.yaml

Type: AWS::AppRunner::Service

Properties:

ServiceName: webapp-service

SourceConfiguration:

ImageRepository:

ImageIdentifier: 123456789012.dkr.ecr.us-east-1.amazonaws.com/webapp:latest

ImageConfiguration:

Port: '80'

RuntimeEnvironmentVariables:

- Name: ENV

Value: production

StartCommand: nginx -g daemon off;

ImageRepositoryType: ECR

AutoDeploymentsEnabled: true

InstanceConfiguration:

Cpu: 0.25 vCPU

Memory: 0.5 GB

AutoScalingConfiguration:

MinSize: 1

MaxSize: 10This configuration automatically scales based on incoming traffic, handling everything from SSL certificate provisioning to health checks. The service maintains 99.9% availability SLA without requiring any infrastructure management.

Link to section: Cost Structure and PerformanceCost Structure and Performance

App Runner uses a pay-per-use pricing model: $0.000025 per vCPU-second and $0.0000125 per GB-second, plus $0.10 per GB for outbound data transfer. For applications with variable traffic patterns, this often proves more economical than maintaining dedicated infrastructure.

Performance characteristics include automatic scaling from zero to handle traffic spikes, with new instances launching in 30-60 seconds. The service integrates with AWS Application Load Balancer for advanced routing and AWS WAF for security filtering.

However, App Runner introduces vendor lock-in concerns and limited customization options. Applications must conform to App Runner's runtime constraints, and advanced networking features require additional AWS services. Organizations prioritizing portability should consider these limitations carefully.

Link to section: Use Case ScenariosUse Case Scenarios

App Runner excels for web APIs, microservices, and background processing applications with predictable scaling patterns. Startups and development teams benefit from zero operational overhead, while enterprises use it for internal tools and proof-of-concept deployments.

The service integrates seamlessly with AWS's broader ecosystem, including CloudWatch for monitoring, Systems Manager for configuration management, and IAM for access control. This tight integration simplifies compliance and security implementations for AWS-centric organizations.

Link to section: Fly.io: Edge-First Container PlatformFly.io: Edge-First Container Platform

Fly.io takes a fundamentally different approach by deploying containers across a global edge network. Instead of managing traditional clusters, applications run on Firecracker microVMs distributed across 35+ regions worldwide, providing sub-50ms latency for global users.

Link to section: Architecture and DeploymentArchitecture and Deployment

Fly.io applications deploy through a simple CLI tool and configuration file:

# fly.toml

app = "webapp"

primary_region = "iad"

[build]

dockerfile = "Dockerfile"

[http_service]

internal_port = 80

force_https = true

auto_stop_machines = true

auto_start_machines = true

min_machines_running = 0

processes = ["app"]

[[http_service.checks]]

grace_period = "10s"

interval = "30s"

method = "GET"

timeout = "5s"

path = "/health"

[vm]

cpu_kind = "shared"

cpus = 1

memory_mb = 256Deployment happens through flyctl deploy, which builds the container image, distributes it globally, and starts instances based on user proximity. The platform automatically handles routing, load balancing, and geographic failover.

Link to section: Performance and Global DistributionPerformance and Global Distribution

Fly.io's edge-first architecture delivers exceptional performance for globally distributed applications. The Anycast network routes requests to the nearest available instance, typically reducing latency by 60-80% compared to centralized deployments.

The platform uses a unique approach to data persistence through Fly Volumes, which are region-specific but can replicate across locations. This enables applications to maintain local data stores while serving global traffic efficiently.

Link to section: Scaling and Resource ManagementScaling and Resource Management

Fly.io implements automatic scaling based on request volume and geographic demand. Machines start in under 1 second when traffic arrives and automatically stop during idle periods, minimizing costs. The platform charges only for active CPU time: $0.000001667 per vCPU-ms and $0.000000234 per GB-ms.

This pricing model proves extremely cost-effective for applications with sporadic traffic or geographic usage patterns. However, applications requiring consistent background processing or large-scale data processing may find traditional cluster approaches more economical.

Link to section: OpenShift Serverless: Enterprise Kubernetes AbstractionOpenShift Serverless: Enterprise Kubernetes Abstraction

Red Hat's OpenShift Serverless builds on Kubernetes and Knative to provide enterprise-grade serverless containers. It combines Kubernetes' power with simplified deployment models, targeting organizations that need enterprise features without operational complexity.

Link to section: Implementation FrameworkImplementation Framework

OpenShift Serverless uses Knative Serving for request-driven scaling and Knative Eventing for event-driven architectures. Services deploy through standard Kubernetes manifests with Knative annotations:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: webapp

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/minScale: "1"

autoscaling.knative.dev/maxScale: "100"

autoscaling.knative.dev/target: "50"

spec:

containers:

- image: registry.access.redhat.com/ubi8/nginx-118

ports:

- containerPort: 8080

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 1000m

memory: 512MiThis configuration automatically scales containers based on incoming requests, scaling to zero during idle periods and rapidly scaling up during traffic spikes. The platform handles routing, SSL termination, and health checking automatically.

Link to section: Enterprise Features and IntegrationEnterprise Features and Integration

OpenShift Serverless provides enterprise-grade security through integrated Red Hat Single Sign-On, role-based access control, and network policies. The platform includes advanced monitoring through Prometheus and Grafana, with distributed tracing capabilities for debugging microservices architectures.

Integration with Red Hat's ecosystem includes support for CodeReady Workspaces for development, Quay for container registry management, and Advanced Cluster Management for multi-cluster deployments. These integrations prove valuable for large enterprises with complex compliance requirements.

Link to section: Cost and Licensing ConsiderationsCost and Licensing Considerations

OpenShift Serverless requires Red Hat OpenShift licensing, which starts at $50 per core per month for self-managed deployments. While expensive compared to open-source alternatives, the cost includes enterprise support, security patches, and compliance certifications.

Organizations already invested in Red Hat's ecosystem find OpenShift Serverless provides familiar operational models while adding serverless capabilities. However, companies seeking cost optimization may find the licensing costs prohibitive for smaller workloads.

Link to section: Comparative Analysis: Performance BenchmarksComparative Analysis: Performance Benchmarks

Real-world performance varies significantly across platforms depending on workload characteristics, scaling requirements, and operational priorities. Independent benchmarks from Cloud Native Computing Foundation studies reveal distinct performance profiles for each platform.

| Metric | Kubernetes | Nomad | Docker Swarm | AWS App Runner | OpenShift Serverless | Fly.io |

|---|---|---|---|---|---|---|

| Deployment Time | 45-90s | 10-15s | 5-10s | 60-120s | 30-45s | 15-30s |

| Cold Start | N/A | <1s | <1s | 30-60s | 5-15s | <1s |

| Resource Overhead | 500-1000MB | 50MB | 100MB | 0MB | 200MB | 0MB |

| Max Cluster Size | 5000+ nodes | 1000 nodes | 150 nodes | N/A | 1000+ nodes | Global |

| Scaling Speed | 30-60s | 5-10s | 10-20s | 30-60s | 1-5s | <1s |

| Network Latency | 1-2ms | 0.5-1ms | 1-2ms | 5-10ms | 1-3ms | 20-50ms |

These benchmarks highlight each platform's strengths and trade-offs. Kubernetes excels at large-scale deployments but requires significant resources. Nomad provides excellent performance with minimal overhead, while Docker Swarm offers the fastest deployment times for smaller clusters.

AWS App Runner and OpenShift Serverless sacrifice some control for operational simplicity, with App Runner providing zero infrastructure management at the cost of vendor lock-in. Fly.io's global edge deployment offers unique advantages for latency-sensitive applications but may introduce complexity for traditional cluster workloads.

Link to section: Cost Analysis: Total Cost of OwnershipCost Analysis: Total Cost of Ownership

Understanding true costs requires examining both infrastructure expenses and operational overhead. A typical three-tier web application serving 10,000 daily active users reveals significant cost differences across platforms.

Kubernetes on AWS EKS: Monthly infrastructure costs of $800-1200 for worker nodes, plus $72 for managed control plane, plus 40-60 hours of engineering time for maintenance and troubleshooting. Total monthly cost: $2000-3500.

Nomad on AWS EC2: Monthly infrastructure costs of $600-900 for worker nodes, no control plane fees, plus 10-20 hours of engineering time monthly. Total monthly cost: $1200-2100.

Docker Swarm on AWS EC2: Monthly infrastructure costs of $500-800 for nodes, no additional fees, plus 5-15 hours of engineering time. Total monthly cost: $1000-1800.

AWS App Runner: Monthly costs of $200-600 based on usage, zero engineering overhead for infrastructure. Total monthly cost: $200-600.

OpenShift Serverless: Monthly infrastructure costs of $1200-1800 including Red Hat licensing, plus 20-30 hours of engineering time. Total monthly cost: $2800-4200.

Fly.io: Monthly costs of $150-400 based on usage and geographic distribution, minimal engineering overhead. Total monthly cost: $200-500.

These calculations assume $150/hour fully-loaded engineering costs and include only infrastructure management, not application development time. Organizations with existing Kubernetes expertise may reduce operational costs significantly, while teams new to containers often underestimate learning curves and ongoing maintenance requirements.

Link to section: Decision Framework: Choosing the Right PlatformDecision Framework: Choosing the Right Platform

Selecting appropriate container orchestration requires evaluating technical requirements against organizational constraints. This decision framework helps teams identify optimal platforms based on specific criteria.

Choose Kubernetes if: Your organization requires advanced features like custom resource definitions, network policies, or multi-tenancy. You have dedicated platform engineering resources and need to support complex, large-scale microservices architectures. Vendor portability is critical, and you can invest in the learning curve and operational overhead.

Choose Nomad if: You want simplicity without sacrificing power, need to run diverse workload types (containers, VMs, binaries), and prefer HashiCorp's ecosystem. Your team values operational simplicity but requires more control than serverless platforms provide. You're building new platforms and can standardize on HashiCorp tools.

Choose Docker Swarm if: Your team already uses Docker extensively, you need quick time-to-market for containerization, and your scale requirements are modest (under 100 nodes). Operational simplicity outweighs advanced features, and you prefer integrated solutions over complex toolchains.

Choose AWS App Runner if: You want zero infrastructure management, your applications fit standard web service patterns, and vendor lock-in isn't a concern. Cost optimization for variable workloads is important, and you're building on AWS with integrated services.

Choose OpenShift Serverless if: You require enterprise features and support, need Kubernetes compatibility with serverless benefits, and have budget for Red Hat licensing. Compliance requirements are strict, and you value vendor support for production issues.

Choose Fly.io if: Global performance is critical, you want edge deployment capabilities, and you're building latency-sensitive applications. Cost optimization for geographic distribution matters, and you prefer developer-centric platforms over infrastructure management.

The container orchestration landscape in 2025 offers unprecedented choice, with each platform optimizing for different priorities. While Kubernetes remains powerful for complex scenarios, simpler alternatives often provide better developer experience and lower operational costs for focused use cases.

Success depends on honest assessment of requirements, team capabilities, and long-term strategic goals. The platforms examined here represent mature alternatives to Kubernetes complexity, each offering distinct advantages for specific organizational contexts. As the ecosystem continues evolving, the trend toward specialized, purpose-built solutions appears likely to accelerate, giving development teams more options than ever for deploying containerized applications effectively.

The key insight for 2025 is that choosing the right development tools extends beyond technical capabilities to include operational fit, team expertise, and organizational priorities. The most successful deployments align platform capabilities with real-world constraints, avoiding both over-engineering and under-powered solutions that limit growth potential.